In advertising, achieving a balance between art and science is crucial.

As direct-response advertisers, rigorous testing forms the backbone of our daily operations. We continuously pit creatives against each other, fine-tune campaign bids and budgets, and meticulously analyze audience-specific results across the entire customer journey. While well-structured campaigns often provide clear answers, there are times when we need to adopt a more scientific approach. This involves formulating strong hypotheses, isolating variables, and conducting thorough A/B testing. In this article, we delve into our experiences with Facebook’s relatively new testing feature, creative split testing, offering insights into when to leverage and when to forgo this powerful tool. Let’s begin with a primer on Facebook’s split testing feature.

Understanding Facebook Split Testing

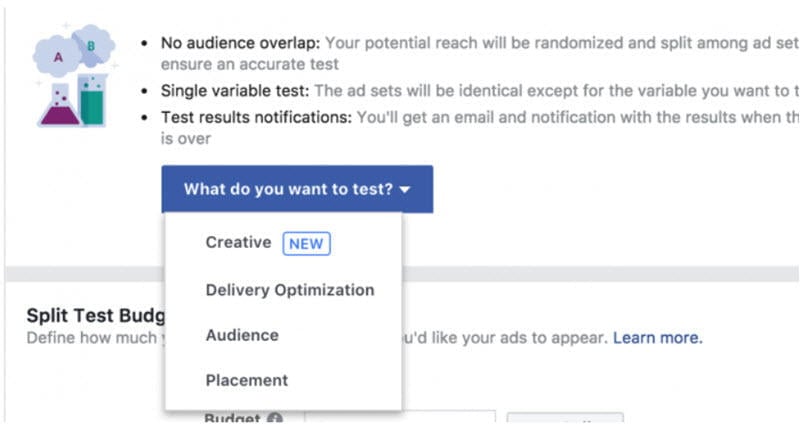

Previously accessible only through an API (which we accessed via our Facebook technology partner Smartly.io), creative split testing—Facebook’s terminology for ad A/B testing—has now transformed into a user-friendly self-service tool.

Launched in November 2017, Facebook empowers advertisers to A/B test a range of variables, including:

- Creative assets

- Delivery Optimization strategies

- Target Audience segments

- Ad Placement options Facebook’s A/B testing methodology involves dividing your ad set into equal parts (up to a maximum of five), ensuring no audience overlap and guaranteeing that users are exposed to only one variation throughout the test duration. This feature also ensures uniform budget allocation across all variations, a feat impossible to achieve manually. Naturally, all established A/B testing best practices still hold true:

- Isolate and test a single variable at a time for optimal results.

- Allow sufficient time for your test to achieve statistical significance and determine a clear winner. While Facebook recommends a minimum of four days, we use this handy dandy statistical relevance calculator by Kissmetrics.

- A/B testing generally yields more reliable results with larger audience sizes. Aim for audiences of 1 million or more in each subset for optimal outcomes.

- Define your primary Key Performance Indicator (KPI) beforehand, whether it’s click-through rate or cost-per-install. For a comprehensive guide on setting up Facebook ads for split testing, Adespresso has got you covered.

When to Embrace Creative Split Testing

The value proposition of creative split testing is undeniable; it empowers advertisers to scientifically pinpoint the best-performing ads, audiences, or bidding strategies. However, it necessitates meticulous planning and dedicated budget allocation, potentially impacting overall efficiency. We constantly evaluate the trade-offs between A/B testing benefits and costs, and we have identified specific scenarios where A/B testing is highly recommended and others where it might be unnecessary. Here are some scenarios where A/B testing shines.

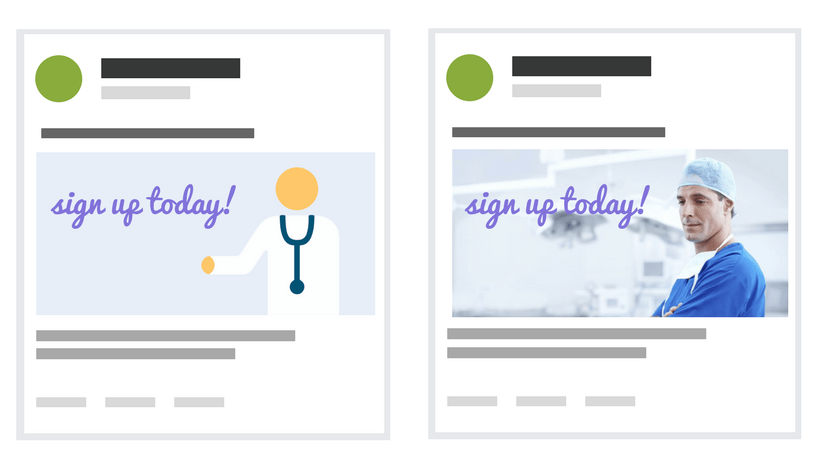

#1: Testing Imagery to Shape Future Creative Direction

The cardinal rule of split testing is straightforward: test measurable hypotheses. As an advertising agency committed to maintaining campaign structure hygiene, we take this a step further. We run A/B tests on hypotheses that significantly impact our overall strategy. When executed correctly, A/B testing provides clear and actionable insights to inform your creative strategy moving forward. Consider this example: We conducted a split test pitting an existing high-performing ad against a variation with identical messaging, concept, and copy but a different design element.

Test Specifics: In this test, our isolated variable was the design concept; all ad copy, in-image copy, calls-to-action (CTAs), and overarching concepts remained consistent. By tracking cost discrepancies among our top-performing audience segments, we aimed to determine the most effective direction for our creative concepts. Results: After a week-long test duration to achieve statistical significance, we identified a clear winner based on lower cost-per-acquisition (CPA) metrics. We then applied this insight to guide our subsequent creative asset production, ultimately achieving an average cost reduction of 60%.

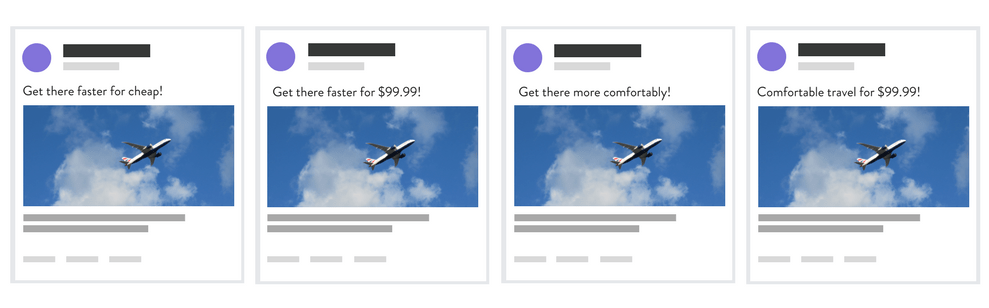

#2: Testing Messaging to Inform Company-Wide Strategy

Similar to the previous example, split-testing can help refine messaging across various channels, even influencing company-wide communication strategies. For an emerging transportation company, we conducted a split test to understand which initial messaging resonated most effectively with their core demographic.

Test Specifics: While traditional ad sets were an option, we understood the importance of high confidence levels in guiding our client’s future positioning. Conducting a controlled A/B test also provided insights into customer lifetime value, which we’ll explore later in this article. Results: In this instance, our messaging variations didn’t significantly impact the click-through rate (CTR). While statistically relevant, our winning messaging only demonstrated a marginal 3% improvement in CTR. However, these findings are still valuable, prompting us to investigate further—should we focus on other aspects like imagery, CTAs, or value proposition communication? Should we tailor our messaging based on different audience segments?

#3: Testing Creative Concept Variations for Specific Personas

Split testing proves invaluable when deciphering which creative elements resonate with new or challenging-to-reach audiences. Adopting an “ax first, sandpaper later” approach, you can leverage split testing to identify the most effective tactics initially and then make incremental optimizations. For instance: For a particular client, we wanted to conduct a split test to determine if persona-specific creative outperformed generic creative when targeting a new demographic group.

Test Specifics: This test pitted existing high-performing ads against new ads to validate our hypothesis that persona-specific creative would yield better results. Results: The findings were significant considering this was an entirely untapped user segment for us, providing clear guidelines on how to approach this audience in future campaigns. The costs associated with the less effective concept were 270% higher.

#4: Determining Long-Term Value of Messaging or User Groups

While more time and resource-intensive, split testing can also be utilized to create pseudo-cohorts for ongoing monitoring after your initial test concludes. Here are a few examples:

- Test which messaging drives the highest bottom-of-the-funnel conversions (purchases, etc.).

- Test which Facebook placements deliver the lowest acquisition costs.

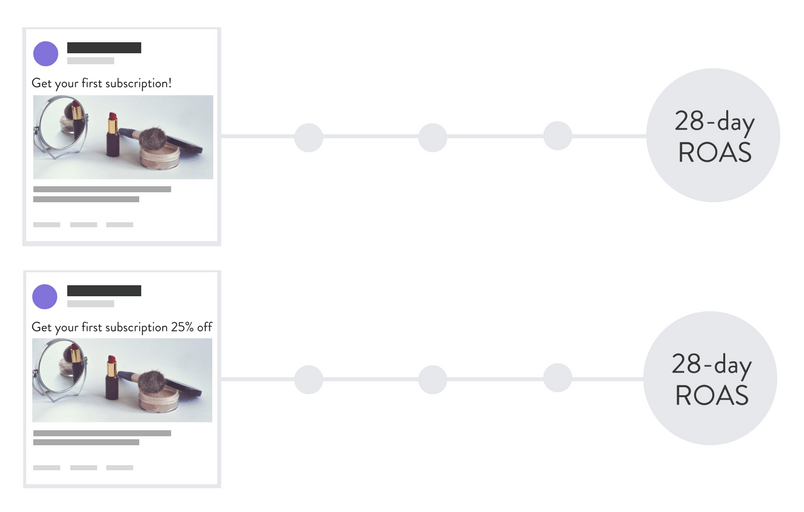

- Test which demographic (e.g., gender, age, interests, education) exhibits the highest lifetime value. We implemented this strategy for a subscription-based consumer goods startup to identify the upfront ad messaging that generated the highest return on ad spend (ROAS) after 28 days.

Test Specifics: For this particular test, our objective was to track the ROAS of groups that received an offer compared to those that didn’t. Results: After running the test long enough to obtain statistically relevant cost data, we continued monitoring our two groups. Our analysis revealed that after 28 days, although conversion rates remained virtually identical, one group achieved a 27% higher ROAS.

When to Reconsider Facebook Split Testing

Now, let’s face reality—we don’t always have the luxury of setting up highly scientific, tightly controlled A/B tests. In many cases, our structured campaigns and ad sets perform adequately, and alternative tailor-made solutions exist for specific scenarios. Here are three situations where you might want to rethink running an A/B test.

#1: Testing Multiple Creative Variables Simultaneously

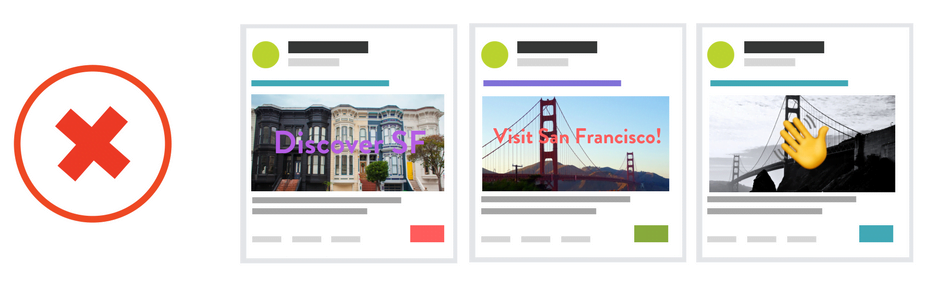

This violates a fundamental principle of A/B testing: test only one variable at a time.

Even if you were to test 20 variations of a single variable, achieving statistical relevance would be incredibly challenging. Your best bet is to run standard ad sets or, if you’re feeling adventurous, experiment with dynamic creative optimization (DCO). Although more of a black box, Dynamic Creative Optimization (DCO) can be more efficient in generating multiple learnings simultaneously. While it might not directly inform your broader strategy, it’s an effective use of resources if you need to test various ad copy variations, images, CTAs, and more.

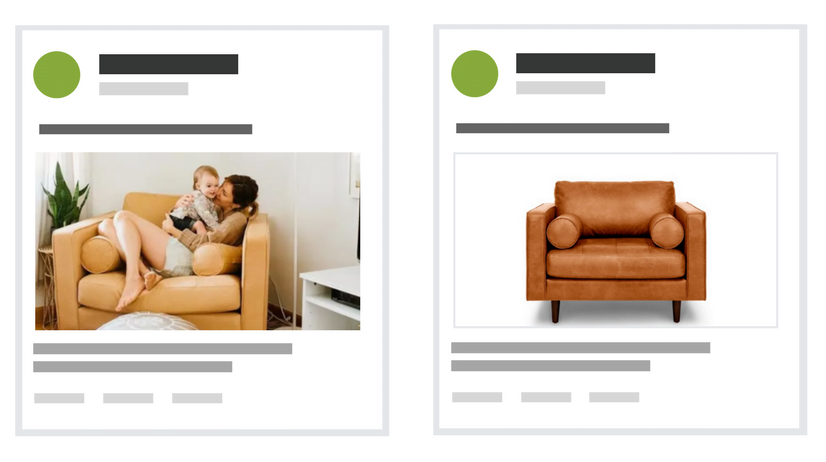

#2: Testing Minor Creative Tweaks with Limited Business Value

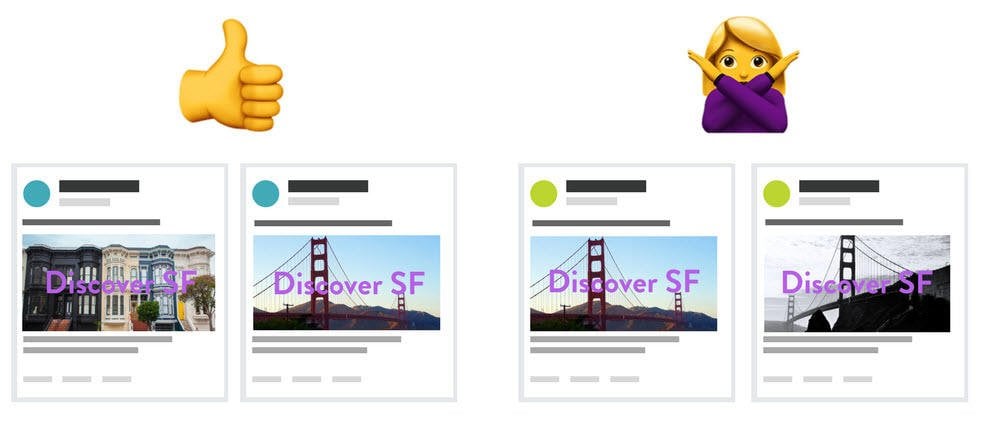

As emphasized earlier, A/B testing is most effective when you need definitive answers—when you have a specific, measurable hypothesis to prove or disprove. We advise against wasting time A/B testing superficial ad variations like individual words, image headlines, or background colors. Instead, focus on testing ads with significant conceptual differences.

The test on the left is better suited for an A/B test, as one image portrays more stereotypical San Francisco imagery, while the other presents a more “local” feel. The example on the right (testing color versus black and white photography) might offer some value but holds less significance for your overall business. Ultimately, Facebook split testing remains the gold standard for running scientifically rigorous A/B tests. However, it demands careful planning and resource allocation. As a general rule, you should trust Facebook’s algorithm to deliver your highest-converting ads. Unless you require irrefutable answers, standard ad sets should suffice. In any case, we encourage you to carefully weigh the pros and cons of setting up, running, and analyzing a Facebook creative split test upfront. We hope this article has guided you towards a well-informed decision.