A significant technological advancement, Virtual Reality (VR), has emerged. Experiencing VR for the first time evokes a much stronger emotional response in every aspect of computing than using a smartphone for the first time ever did. While the first iPhone was released only 12 years ago, VR as a concept has existed for a longer period. However, the technology required to make VR accessible to everyday consumers has only recently become available.

Facebook’s Oculus Quest is a VR gaming platform designed for consumers. One of its key features is that it eliminates the need for a PC. It offers a mobile VR experience that is wireless. You can share a 3D model by handing someone a VR headset in a coffee shop, which is similar to the awkwardness of googling something during a conversation. However, the shared experience’s reward is much more engaging.

VR will revolutionize how we work, shop, consume content, and more.

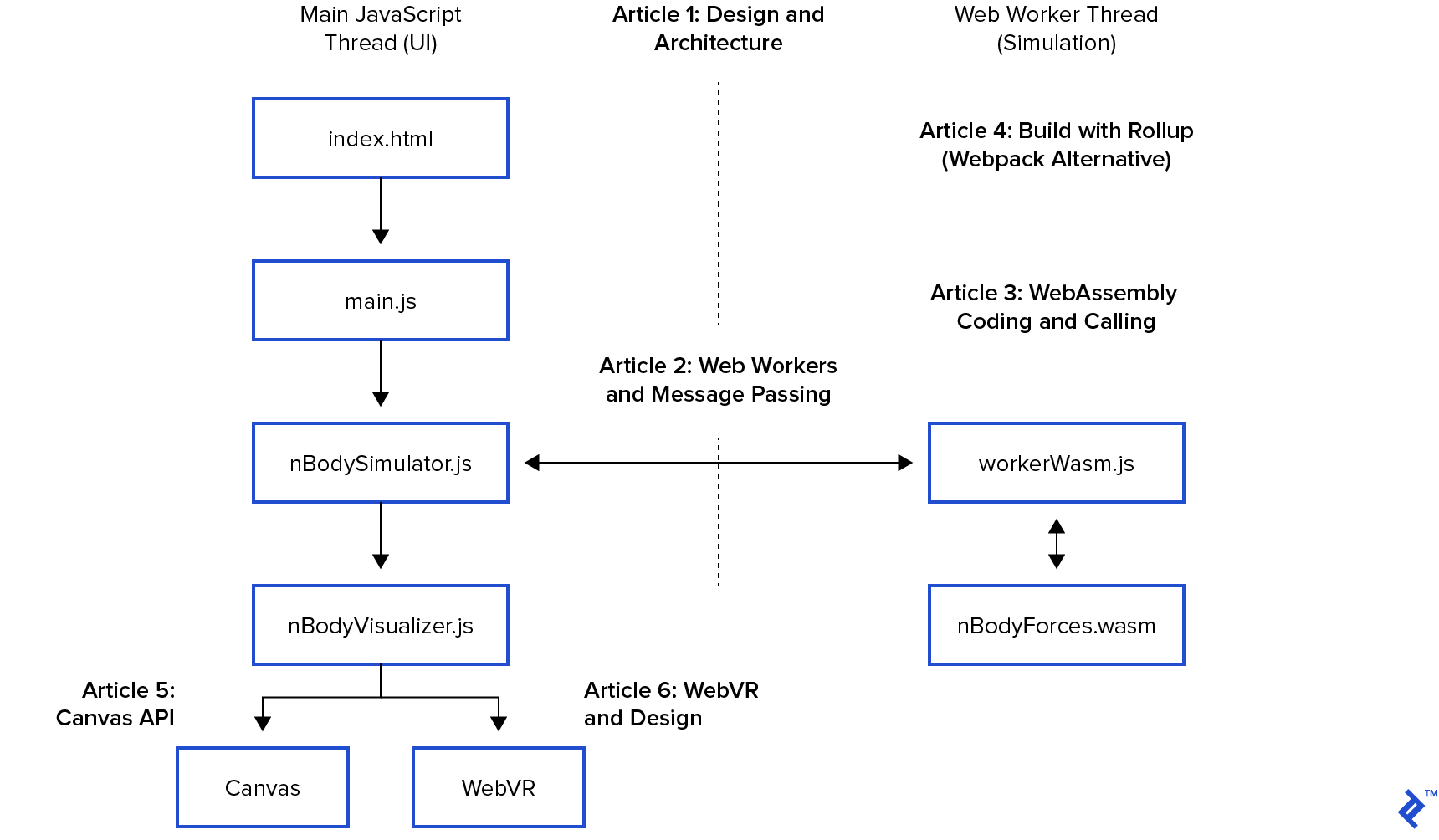

This series will delve into current browser technologies, including WebVR and browser edge computing, that power VR experiences. The first post will provide an overview of these technologies and an architecture for our simulation.

Subsequent articles will highlight some of the unique challenges and opportunities presented in code. I have developed a Canvas and WebVR demo and made the code available on GitHub to aid in exploring this technology.

The Implications of VR for UX

As a Toptal Developer, I guide businesses in taking projects from conception to beta testing with users. So, how does VR apply to business web applications?

Entertainment content will be the driving force behind VR adoption, similar to the trend observed with mobile devices. Nevertheless, once VR becomes as ubiquitous as mobile, “VR-first design” will become the standard user expectation, much like “mobile-first” design is today.

“Mobile-first” was a game-changer, “Offline-first” is a current shift, and “VR-first” is on the horizon. As a designer or developer, this is an exciting time to be in the field. VR represents a completely different design approach, which we will explore in the last article of this series. You cannot be considered a VR designer if you lack the ability to grip.

While VR originated during the Personal Computing (PC) revolution, it is now emerging as the next stage in the mobile revolution. Facebook’s Oculus Quest draws inspiration from Google Cardboard, incorporating Qualcomm’s Snapdragon 835 System-on-Chip (SoC) technology, headset tracking using mobile cameras, and the Android operating system— all thoughtfully designed to fit comfortably on your face.

The $400 Oculus Quest offers incredible experiences that can be shared with friends. A new $1,000 iPhone no longer generates the same level of excitement. It seems humanity is fully embracing VR.

VR Applications Across Industries

VR is beginning to impact various industries and computing fields. Beyond the heavily publicized content consumption and gaming sectors, VR is gradually transforming industries such as architecture and healthcare.

- Architecture and Real Estate are industries where the value of a physical reality comes at a very high cost compared to digital alternatives. It makes intuitive sense for architects and real estate agents to utilize VR to provide clients with immersive virtual tours, showcasing the potential experience. VR allows for a “beta test” of a $200 million stadium or a virtual walkthrough conducted remotely over the phone.

- Learning and Education can benefit significantly from VR, which can provide experiences that would be impossible to replicate with traditional images or videos.

- Automotive companies are leveraging VR across various areas, from design and safety to training and marketing.

- At Stanford’s Lucile Packard Children’s Hospital, Healthcare professionals have been utilizing VR to plan intricate heart surgeries. VR provides a means to understand a patient’s anatomy in detail before making any incisions. Additionally, VR is being explored as a potential alternative to pharmaceuticals for pain management.

- Retail, Marketing, and Hospitality industries are already offering virtual tours of products and locations using VR. As retailers recognize the immense potential of VR to enhance the shopping experience, innovative players in the retail sector could potentially accelerate the decline of physical brick-and-mortar stores.

As technology continues to advance, we can anticipate increased VR adoption across various sectors. The key questions now revolve around the pace of this shift and the industries that will be most significantly impacted.

VR’s Implications for the Web and Edge Computing

“Edge Computing” involves shifting computing processes from centralized application server clusters and bringing them closer to the end user. This approach has gained significant attention because hosting providers are eager to offer low-latency servers in every city.

Google’s Stadia service serves as a prime example of B2C edge computing. It runs demanding gaming workloads, including CPU/GPU intensive tasks, on Google’s servers and then streams the game to a user’s device, much like how Netflix operates. This technology enables even basic Netflix Chromebooks to handle and play games that typically require powerful gaming computers. It also opens up new architecture possibilities for creating tightly integrated and immersive multiplayer games.

An illustration of B2B edge computing is Nvidia’s GRID, which leverages Nvidia GPUs to provide virtual remote workstations to less powerful devices, similar to those used for Netflix streaming.

Question: Why not extend edge computing beyond data centers and into web browsers?

A practical application for browser edge computing is an “animation render farm” consisting of interconnected computers. These farms are used to render complex 3D movies by dividing the rendering process, which could take an entire day, into smaller tasks that thousands of computers can process simultaneously in just minutes.

Technologies such as Electron and NW.js were instrumental in bringing web programming capabilities to desktop applications. The emergence of new browser technologies, like Progressive Web Apps (PWAs), signifies a return of the web application distribution model—where Software as a Service (SaaS) is primarily about distribution—to desktop computing. Some notable examples include projects like SETI@Home, Folding@Home (for protein-folding research), and various other render farms. Now, instead of needing to download and install dedicated software, individuals can contribute to these computational efforts by simply visiting a website.

Question: Is WebVR a viable and sustainable technology, or will VR content be confined to “app stores” and closed ecosystems?

As a Toptal freelancer and technologist, I feel obligated to stay informed. To address my own inquiries, I developed a technical prototype. The findings were very encouraging, and I decided to share them in this blog series.

Spoiler alert: Yes, WebVR is a viable technology. And yes, browser edge computing can use the same APIs to access the processing power required to enable WebVR.

A Proof of Concept - Let’s Dive In!

We’ll develop an astrophysics simulation of the n-body problem to illustrate these concepts.

Astronauts use equations to determine the gravitational forces between two objects. However, there are no equations for three or more bodies, which unfortunately encompasses every known system in the universe. Such is the nature of science!

While there is no analytical solution (equations) for the n-body problem, there is a computational solution (algorithms) with a time complexity of O(n²). Although O(n²) represents a less favorable scenario, it’s the path to achieving our goal and one of the reasons Big O notation was conceived in the first place.

Figure 2: “Is it going up and to the right? Well, I’m no engineer, but the performance looks good to me!”

If you’re refreshing your knowledge of Big O skills, remember that Big O notation measures how the performance of an algorithm “scales” in relation to the size of the input data.

In our case, the input data is represented by all the bodies in our simulation. Adding a new body introduces a new two-body gravity calculation for every existing body in the set.

While the inner loop has a time complexity of less than n, it is not less than or equal to O(log n), which means the overall algorithm’s complexity is O(n²). That’s just the way it is, no bonus points for a better complexity.

| |

The n-body solution also leads us to the realm of physics/game engines and an exploration of the technologies underpinning WebVR.

Once the simulation is complete, we will create a 2D visualization for our prototype.

Lastly, we will replace the Canvas visualization with a WebVR version.

If you’re eager to get started, feel free to jump directly to the project’s code.

Web Workers, WebAssembly, AssemblyScript, Canvas, Rollup, WebVR, Aframe

Prepare for an exciting and informative journey through a collection of new technologies readily available in your modern mobile browsers (excluding Safari, unfortunately):

- Web Workers will be used to offload the simulation to a separate CPU thread, thus improving both perceived and actual performance.

- We’ll use WebAssembly to execute the O(n²) algorithm in highly optimized compiled code within that dedicated thread using languages like C/C++/Rust/AssemblyScript.

- Canvas will be our tool for creating a 2D visualization of our simulation.

- As a lightweight alternative to Webpack, we’ll employ Rollup and Gulp.

- Finally, we’ll use WebVR and Aframe to develop a Virtual Reality experience accessible on your smartphone.

A High-Level Architecture Before We Start Coding

Let’s begin with a Canvas-based implementation since you’re probably at work right now.

Initially, we’ll utilize existing browser APIs to leverage the necessary computing resources for creating a CPU-intensive simulation without sacrificing user experience.

Following that, we’ll visualize this simulation directly in the browser using Canvas, and eventually, we’ll replace our Canvas visualization with WebVR powered by Aframe.

API Design and Simulation Loop

Our n-body simulation predicts celestial object positions based on gravitational forces. While an equation can determine the precise force between two objects, calculating forces among three or more objects necessitates dividing the simulation into small time intervals and iterating through them. Our aim is to achieve a smooth visual experience, targeting 30 frames per second (movie speed), which translates to approximately 33 milliseconds per frame.

To help you understand the structure, here’s a brief overview of the code:

- The browser sends a GET request for index.html.

- This triggers the execution of

main.js, as shown in the code below. Rollup, our chosen alternative to Webpack, handles theimportstatements. - An instance of nBodySimulator() is created.

- This simulator exposes an external API:

sim.addVisualization()sim.addBody()sim.start()

| |

Our two asteroids have zero mass, meaning they are not affected by gravity. This ensures our 2D visualization maintains a minimum zoom level of 30x30. The final piece of code is our “mayhem” button, which, when clicked, introduces 10 small inner planets for some captivating, spinny action!

The next part is our “simulation loop.” It recalculates and repaints every 33 milliseconds. If you’re enjoying this, you could also call it a “game loop.” We’ll use setTimeout() to implement our loop, as it’s the simplest approach that can achieve our goal. An alternative could be requestAnimationFrame().

sim.start() initiates the simulation by invoking the sim.step() function every 33 milliseconds, aiming for a frame rate of approximately 30 frames per second.

| |

We’re moving beyond the design phase and into implementation. We will implement the physics calculations in WebAssembly and execute them within a separate Web Worker thread.

The nBodySimulator encapsulates this implementation complexity, dividing it into several distinct parts:

calculateForces()is a function that returns a promise to compute the forces to be applied.- These calculations primarily involve floating-point operations and are handled by WebAssembly.

- With a time complexity of O(n²), these calculations represent our performance bottleneck.

- To mitigate this, we utilize a Web Worker to offload these computations to a separate thread, improving both perceived and actual performance.

trimDebris()removes any debris that is no longer of interest (and would cause our visualization to zoom out unnecessarily). This function has a time complexity of O(n).applyForces()applies the computed forces to the bodies, with a time complexity of O(n).- If we’ve skipped

calculateForces()because the worker thread is already busy, we reuse previously calculated forces. While this prioritizes perceived performance by reducing jitter, it does come at the expense of some accuracy. - This approach ensures that the main UI thread can continue rendering using the old forces even if the force calculation exceeds the allotted 33 milliseconds.

- If we’ve skipped

visualize()iterates through the array of bodies, passing each one to each visualizer for rendering. This function has a time complexity of O(n).

And all of this happens within a mere 33 milliseconds! Could this design be further enhanced? Absolutely! If you’re intrigued or have suggestions, please share them in the comments below. For a more sophisticated and modern design and implementation, you can explore the open-source Matter.js.

Time for Launch!

I had a fantastic time working on this project, and I’m thrilled to finally share it. Let’s continue this exciting journey!

- Introduction - this page

- Web Workers - harnessing the power of multiple threads

- WebAssembly - enabling browser-based computation without JavaScript

- Rollup and Gulp - exploring an alternative to Webpack

- Canvas - drawing with the Canvas API and the “simulation loop”

- WebVR - transitioning from our Canvas visualizer to WebVR and Aframe

Entertainment will be the primary driver of content in Virtual Reality, much like it was for mobile. However, as VR becomes more commonplace, akin to mobile today, it will become the expected experience for both consumers and productivity, mirroring the trajectory of mobile.

We’ve never had more power to create impactful human experiences. As designers and developers, we are living in extraordinary times. Let’s move beyond web pages and start building entire worlds.

Our adventure begins with the unassuming Web Worker. Stay tuned for the next installment of our WebVR series.