With a global usage rate of 58.4%, social media platforms provide a constant stream of opinions, thoughts, and conversations. This data offers valuable insights into the topics that resonate most with users.

For marketing professionals, analyzing social media can be crucial to understanding and capitalizing on consumer behavior. Two widely used and effective methods are:

- Topic modeling, which seeks to answer, “What are users discussing?”

- Sentiment analysis, which aims to answer, “How positive or negative are user sentiments towards a particular topic?”

This article utilizes Python for social media data analysis, demonstrating how to extract valuable market insights, identify actionable feedback, and pinpoint the product features most important to customers.

Case Study: Analyzing Smartwatch Discussions on Reddit

To showcase the effectiveness of social media analysis, we’ll delve into a product analysis of various smartwatches using Reddit data and Python. Python, a powerful language for data science, offers numerous libraries that simplify the implementation of the machine learning (ML) and natural language processing (NLP) models used in this analysis.

Reddit was chosen as the data source (over Twitter, Facebook, or Instagram) because it holds the distinction of being the second most trusted social media platform for news and information, according to the American Press Institute. Additionally, Reddit’s subforum structure, with dedicated “subreddits” where users discuss and review specific products, makes it ideal for product-focused analysis.

We’ll begin by employing sentiment analysis to compare user opinions on popular smartwatch brands, identifying the most favorably viewed products. Subsequently, we’ll utilize topic modeling to zero in on specific smartwatch features that are frequently discussed by users. While our example is specific, the methodology can be applied to any product or service.

Preparing Our Reddit Data Sample

The data set for this analysis consists of the title, the main text, and all comments from the latest 100 posts within the r/smartwatch subreddit. Our dataset captures the most recent 100 comprehensive product discussions, encompassing user experiences, product recommendations, and discussions of pros and cons.

To gather this information from Reddit, we’ll use PRAW, the Python Reddit API Wrapper. Begin by setting up a client ID and secret token on Reddit, following the instructions provided on the OAuth2 guide. Then, follow the official PRAW tutorials on downloading post comments and getting post URLs.

Sentiment Analysis: Unveiling Top-Performing Products

To identify market leaders, we can analyze the positive and negative sentiments expressed by users about specific brands by applying sentiment analysis to our text data. Sentiment analysis models are NLP tools designed to classify text as positive or negative based on the words and phrases used. There are various models, ranging from simple positive/negative word counters to sophisticated deep neural networks.

For our analysis, we will utilize VADER, chosen for its effectiveness in analyzing short social media texts. VADER leverages lexicons and rule-based algorithms to achieve this.

To analyze the data, use a Python ML notebook of your choice (such as Jupyter). Install VADER using pip:

| |

We’ll start by adding three new columns to our dataset: compound sentiment scores for the post title, post body, and comments. To do this, we’ll iterate through each text segment and apply VADER’s polarity_scores method. This method takes a string as input and outputs a dictionary containing four scores: positivity, negativity, neutrality, and compound.

Our focus will be on the compound score, which provides an overall sentiment rating based on the first three scores. This score ranges from -1 to 1, with -1 being the most negative and 1 the most positive. This allows us to represent the sentiment of a text with a single, easily interpretable value:

| |

The next step is to categorize the texts by product and brand. This allows us to associate sentiment scores with specific smartwatches. We begin by defining a list of product lines to analyze and then check each text segment for mentions of these products:

| |

It’s possible that some texts mention multiple products (e.g., a comment comparing two smartwatches). In such cases, we can either discard those texts or employ NLP techniques to split them (assigning parts of the text to each product).

For clarity and simplicity, our analysis will discard texts mentioning multiple products.

Unveiling Insights from Sentiment Analysis

We are now equipped to analyze the data and determine the average sentiment associated with different smartwatch brands, based on user feedback:

| |

The analysis yields the following insights:

Smartwatch | Samsung | Apple | Xiaomi | Huawei | Amazfit | OnePlus |

|---|---|---|---|---|---|---|

Sentiment Compound Score (Avg.) | 0.4939 | 0.5349 | 0.6462 | 0.4304 | 0.3978 | 0.8413 |

These findings provide valuable market intelligence. For instance, our data suggests that users have a more positive sentiment towards the OnePlus smartwatch compared to others.

However, businesses should look beyond average sentiment scores and investigate the underlying factors influencing them. What are the specific features that users love or dislike about each brand? To answer these questions, we can employ topic modeling to delve deeper into our analysis and generate actionable feedback on products and services.

Topic Modeling: Uncovering Key Product Attributes

Topic modeling, a branch of NLP, utilizes ML models to mathematically identify the themes present in a body of text. This discussion will focus on traditional NLP topic modeling techniques, although recent advancements have been made using transformer models like BERTopic.

Various topic modeling algorithms exist, including non-negative matrix factorization (NMF), sparse principal components analysis (sparse PCA), and latent dirichlet allocation (LDA). These ML models take a matrix as input and reduce its dimensionality. The structure of the input matrix is as follows:

- Each column corresponds to a word.

- Each row represents a text segment.

- Each cell represents the frequency of a word in a specific text.

These unsupervised models can all be used for topic decomposition. NMF is widely used in social media analysis due to its interpretability and will be our choice for this example. NMF generates an output matrix with the following structure:

- Each column represents a topic.

- Each row represents a text segment.

- Each cell indicates the extent to which a text discusses a specific topic.

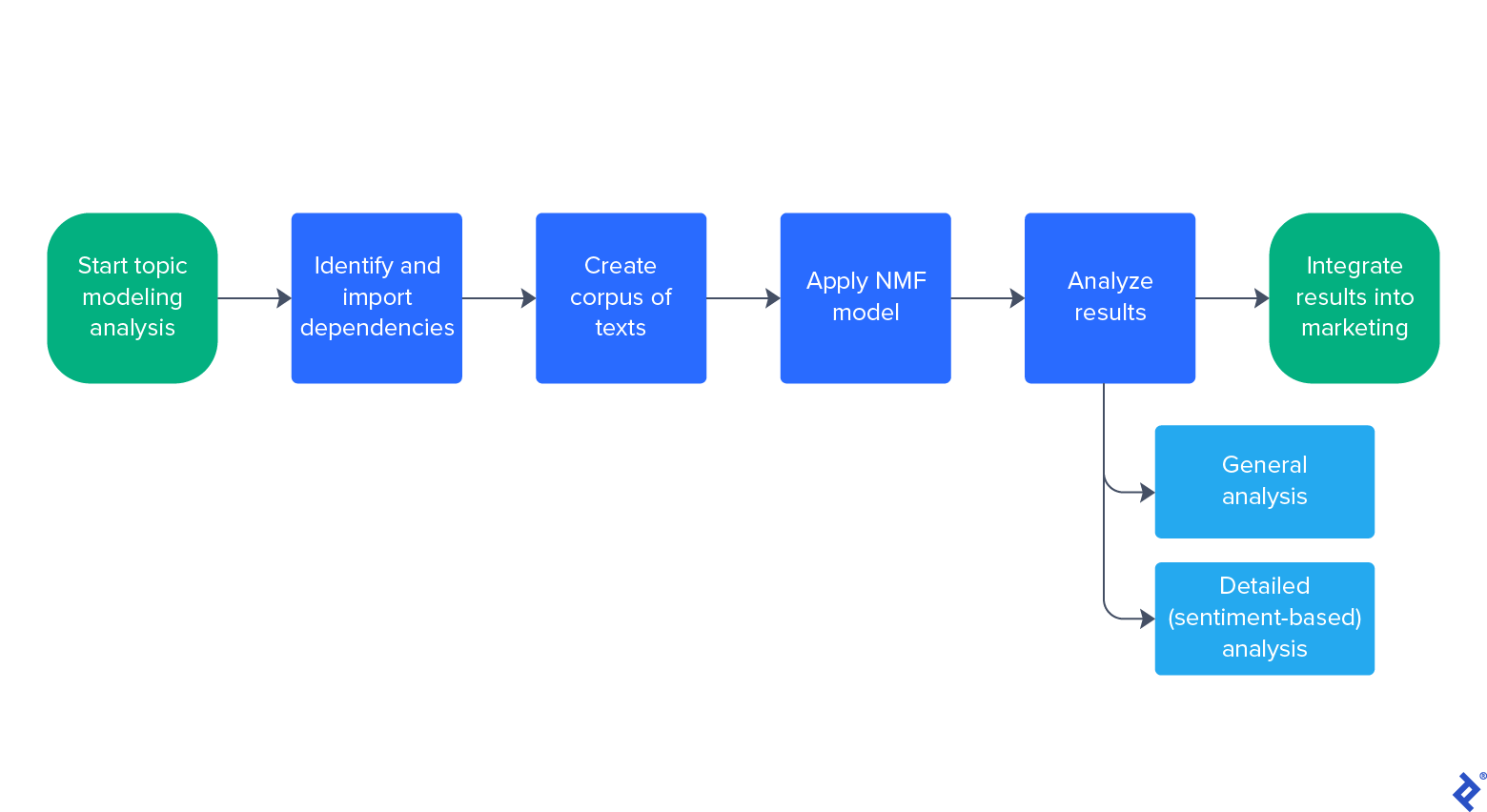

Our workflow follows this process:

We’ll first apply the NMF model to identify general topics of interest and then narrow our focus to positive and negative topics.

Uncovering General Topics of Interest

Let’s examine the topics related to the OnePlus smartwatch, given its high compound sentiment score. We’ll start by importing the necessary packages for NMF functionality and common stop words to exclude from our text:

| |

Next, we’ll create a list containing the text corpus for analysis. We’ll use the CountVectorizer and TfidfTransformer functions from scikit-learn’s ML library to generate our input matrix:

| |

(Note that handling n-grams—variations in spelling and usage like “one plus”—is discussed in my previous article on topic modeling.)

We’re now ready to apply the NMF model and uncover the latent topics within our data. Like other dimensionality reduction techniques, NMF requires setting the number of topics (dimension) as a parameter. For simplicity, we’ll use a 10-topic dimensionality reduction, but you can experiment with different values to find the optimal number of topics for unsupervised learning. Consider maximizing metrics like the silhouette coefficient or elbow method. Additionally, we’ll set a random state for reproducibility:

| |

The important_words variable now holds lists of words. Each list represents a topic, with words ordered by their importance within the topic. These lists often include both meaningful and less relevant (“garbage”) topics. This is common in topic modeling as it’s challenging for algorithms to perfectly cluster all texts into a limited number of topics.

Inspecting the important_words output reveals meaningful topics around words like “budget” or “charge,” suggesting features users consider important when discussing OnePlus smartwatches:

| |

Given the high compound sentiment score for OnePlus, we might assume this is due to its affordability or battery life compared to competitors. However, at this stage, we lack information about whether users view these factors positively or negatively. A more detailed analysis is needed for concrete answers.

Diving into Positive and Negative Topics

Our refined analysis applies the same concepts as before, separately examining positive and negative texts. This will reveal the factors driving positive and negative sentiments towards a product.

We’ll demonstrate this using the Samsung smartwatch. The same pipeline will be used, but with different text corpora:

- A list of positive texts with a compound score greater than 0.8 will be created.

- A list of negative texts with a compound score less than 0 will be created.

These thresholds are chosen to select the top 20% of both positive and negative text scores, ensuring robust results for our smartwatch sentiment analysis:

| |

We can now apply the same topic modeling technique used for general topics to uncover positive and negative themes. The results provide more specific marketing insights: For example, our model’s negative corpus output reveals a topic related to the accuracy of calorie tracking, while the positive output highlights navigation/GPS and health indicators like heart rate and blood oxygen levels. This gives us actionable feedback on aspects users appreciate and areas for potential improvement.

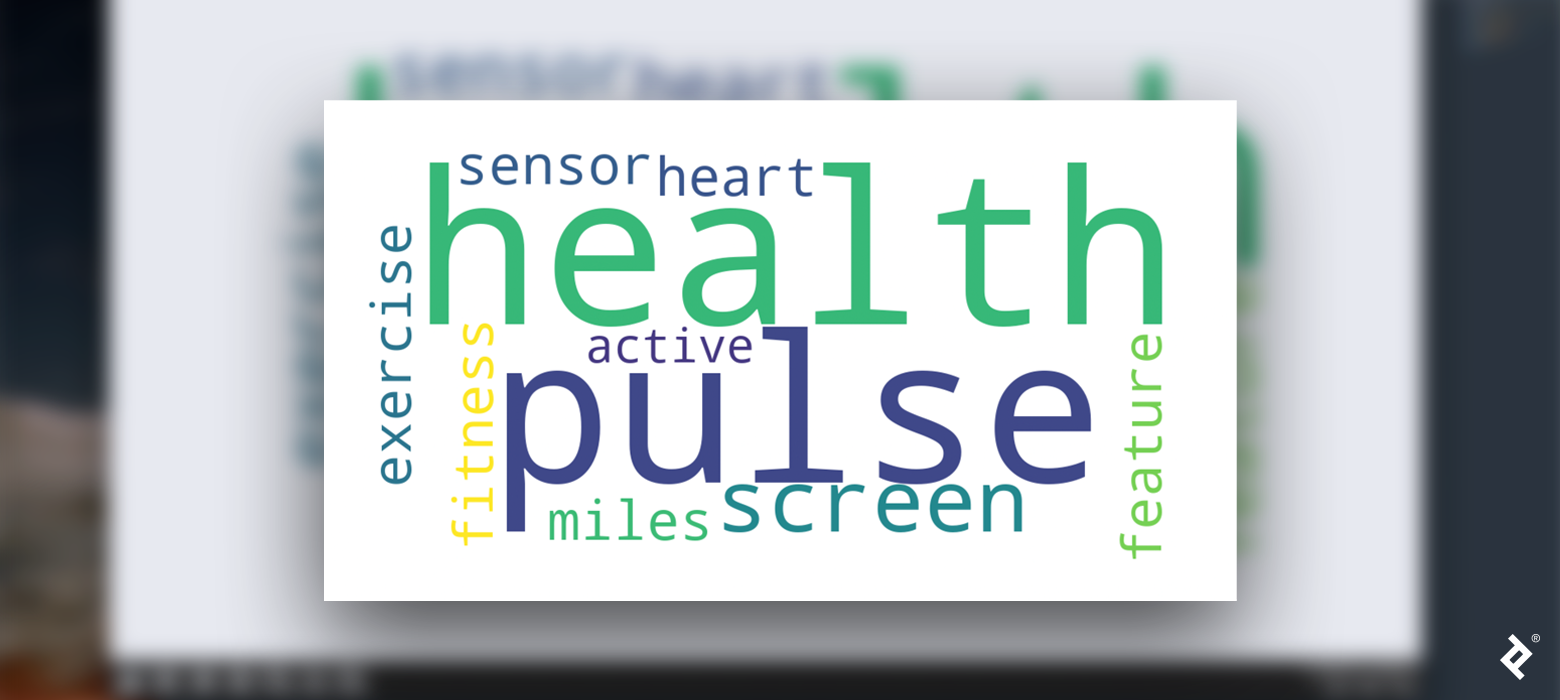

To enhance the presentation of your findings, consider creating a word cloud or a similar visualization to highlight the key topics identified in this tutorial.

Unleashing the Power of Social Media Analysis

Through this analysis, we’ve gained insights into user perceptions of a target product and its competitors, identified the aspects users love about top brands, and pinpointed potential areas for product improvement. Analyzing public social media data empowers businesses to make data-driven decisions, prioritize initiatives effectively, and enhance overall user satisfaction. Incorporating social media analysis into your product development cycle can lead to more effective marketing campaigns and better product design—because listening to your audience is paramount.

The editorial team of the Toptal Engineering Blog extends its gratitude to Daniel Rubio for reviewing the code samples and other technical content presented in this article.