Part 1: Tokenizer

This series will guide you through the step-by-step development of a new scripting language.

The first question that might pop into your head is: “Do we really need another programming language?”

Do We Really Need a New Programming Language?

Let’s take a detour to answer this question.

Imagine yourself as an architect (someone who designs buildings, not software). You’re stuck in a country drowning in red tape. You have a fantastic idea for a shopping mall in your underdeveloped hometown. You meticulously craft the project and confidently submit your building permit application. As expected, it’s immediately rejected because you don’t have a registered company. Determined, you start the company registration process, which involves depositing a hefty sum. You then try to find a suitable name for your company, but every suggestion is met with disapproval. Finally, after five attempts, your company name is accepted, and your application is placed at the bottom of a massive pile. Exhausted and defeated, you either give up or discover that someone else has already built a shopping mall, capitalizing on your brilliant idea.

Thankfully, we are software engineers, not architects bound by physical limitations. We have the power to bring our ideas to life without the need for permits or navigating bureaucratic nightmares. All we need is some free time and the dedication to spend it coding instead of solving sudoku puzzles.

If you’re still not convinced that pure curiosity is a valid reason to create a programming language, let me share my own experience. My first foray into language design was driven by necessity, not just curiosity. However, that shouldn’t be your primary motivation for reading this blog. I believe the concepts presented here are intriguing and innovative enough to keep you engaged, even if you don’t plan on developing your own programming language.

Inspired by our goal of creating a compact scripting language, we’ve decided to name it “Stork.” And the best part is, we don’t need to seek approval from any bureaucratic entity for the name!

This series will document the programming language’s development as it unfolds, so there might be a few bugs along the way. Rest assured, these will be addressed as we approach the series’ conclusion.

You can access the complete source code for everything discussed here at GitHub.

To finally address the question posed in this section’s title — no, we don’t necessarily need a new programming language. However, since this series aims to demonstrate how to create one using C++, we’ll be building one for illustrative purposes.

Tokenizer’s Little Helpers

As a programmer, I frequently encounter this issue: I need a key-value container with fast, logarithmic-time value retrieval. However, once the container is initialized, I don’t intend to add any new values. This makes std::map<Key, Value> (or std::unordered_map<Key, Value>) excessive, as they prioritize fast insertion as well.

While I’m generally against unnecessary optimization, in this instance, I feel like a significant amount of memory is wasted. Moreover, we’ll eventually need to implement a maximal munch algorithm on top of this container, and map isn’t the most suitable choice.

Another option is to use std::vector<std::pair<Key,Value>> and sort it after insertions. However, this approach compromises code readability, as we need to constantly remember that the vector is sorted. To address this, I developed a simple class that enforces this constraint.

(Note: All functions, classes, etc., are declared within the stork namespace. For better readability, I’ll omit this namespace.)

| |

The class implementation is quite straightforward. I chose to implement only the necessary methods for our purposes. As the underlying container is a vector, it can be initialized with a pre-populated vector or an initializer_list.

The tokenizer will process characters from an input stream. At this stage, the exact nature of this input stream is still undecided, so I’ll utilize std::function.

| |

We’ll adhere to the established conventions of C-style stream functions, such as getc, which returns an int instead of char, using negative values to signify the end of the file.

However, in a tokenizer, it’s beneficial to read a few characters ahead before determining the token type. To achieve this, I implemented a stream that allows us to “unread” characters.

| |

For brevity, I’ll omit the implementation details, which can be found on my GitHub page.

As you can see, push_back_stream is initialized using the get_character function. The overloaded operator() retrieves the next character, while push_back returns a character back to the stream. For error reporting, we have the convenience methods line_number and char_number.

It’s essential to remember that char_index represents the overall index of the character, not just its position within the current line. Otherwise, correctly implementing the push_back function would require storing all previous characters in a separate container.

Reserved Tokens

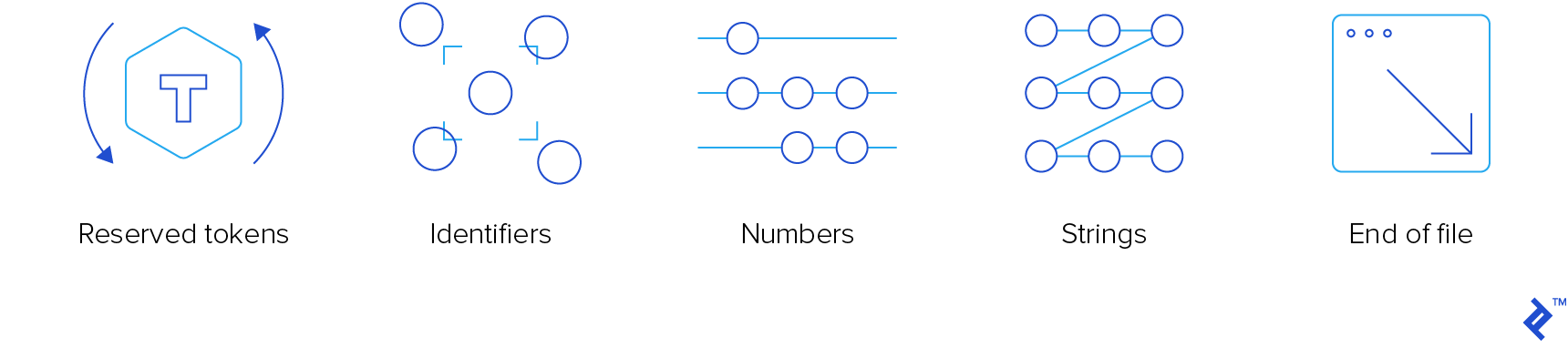

The tokenizer, the lowest-level compiler component, is responsible for reading the input and generating “tokens.” We’re interested in four token types:

- Reserved tokens

- Identifiers

- Numbers

- Strings

Comments are disregarded; the tokenizer will simply consume them without any notification.

To ensure widespread adoption and familiarity, we’ll embrace the widely recognized C-like syntax. Given its success in languages like C, C++, JavaScript, Java, C#, and Objective-C, it’s a safe bet for Stork. If you need a refresher, you can refer to one of our previous articles on C/C++ learning resources.

Let’s take a look at the reserved tokens enumeration:

| |

Enumeration members prefixed with “kw_” represent keywords. This prefix distinguishes them from C++ keywords that might share the same name. Members without a prefix are operators.

Most of them adhere to the C convention. Exceptions include:

-concat and concat_assign (.. and ..=), employed for concatenation

- idiv and idiv_assign (\ and \=), used for integer division

- kw_var for variable declaration

- kw_fun for function declaration

- kw_number for numeric variables

- kw_string for string variables

We’ll introduce additional keywords as needed.

One noteworthy new keyword is kw_elif. Personally, I’m not a fan of single-statement blocks (without curly braces). I rarely use them and don’t feel like they add much value, except in two specific scenarios:

- When I accidentally type a semicolon right after a

for,while, orifstatement before the intended block. Sometimes, I’m lucky and get a compile-time error. Other times, it results in a redundant if-statement and a block that always executes. Fortunately, years of coding have taught me to avoid this mistake, so it rarely happens anymore. Even Pavlov’s dog eventually learned. - When I have a chain of if-statements, comprising an if-block followed by one or more else-if blocks, and potentially an else-block. Technically, when I write

else if, it’s essentially anelseblock containing a single if-statement.

By introducing elif, we can potentially eliminate braceless statements entirely. However, the decision to allow or disallow them can wait for now.

Two helper functions assist in retrieving reserved tokens:

| |

The get_keyword function checks if a provided word corresponds to a reserved keyword. This “word” is a sequence of letters, digits, and underscores, beginning with either a letter or an underscore. If it matches a keyword, the function returns a reserved_token. Otherwise, the tokenizer assumes it’s an identifier.

The get_operator function attempts to read as many characters as possible, as long as the sequence forms a valid operator. If it reads more characters than necessary, it “unreads” any extra characters after the longest recognized operator.

To implement these functions efficiently, we require two lookups between string_view and reserved_keyword.

| |

Implementing get_keyword is fairly simple. However, get_operator requires a custom comparator that compares a given character with potential operators, considering only the n-th character.

| |

This is a standard lexical comparator that focuses solely on the character at position idx. If a string is shorter than idx, it treats it as if it has a null character at that position, which is considered lesser than any other character.

With this understanding of the maximal_munch_operator class, let’s examine the implementation of get_operator:

| |

Initially, we treat all operators as potential candidates. We then read characters one by one, filtering the candidates using equal_range and comparing only the n-th character. There’s no need to compare preceding characters as they have already been evaluated. Similarly, we avoid comparing subsequent characters as they are irrelevant at this stage.

If the resulting range is not empty, we check if the first element (if one exists) has any remaining characters. If such an element exists, it always appears at the beginning of the range due to the sorted nature of the lookup. Finding such an element indicates a match with a valid operator. We return the longest matched operator and “unread” any extra characters read beyond it.

Tokenizer

Given the heterogeneous nature of tokens, we define a convenient token class. This class utilizes std::variant to encapsulate different token types:

- Reserved token

- Identifier

- Number

- String

- End of file

| |

The identifier is a simple class with a single member of type std::string, introduced for convenience. Using distinct types for each alternative in std::variant enhances code clarity.

Now, let’s create the tokenizer. It will be a single function that accepts a push_back_stream and returns the next token.

The key is to branch based on the type of the first character read:

- If we encounter the end-of-file character, we return from the function.

- If the character is whitespace, we skip it.

- If it’s an alphanumeric character (letter, digit, or underscore), we read all consecutive characters of that type, including dots if the first character is a digit. If the first character is indeed a digit, we attempt to parse the sequence as a number. Otherwise, we use the

get_keywordfunction to determine if it’s a keyword or an identifier. - A quotation mark signifies a string; we’ll treat it as such, unescaping any escaped characters.

- If we encounter a slash character (

/), we check the next character. If it’s another slash or an asterisk (*), we skip the line/block comment. - For any other character, we utilize the

get_operatorfunction.

Here’s the implementation of the tokenize function. I’ll omit the implementation details of the functions it calls.

| |

Notice that the function pushes back characters it reads before calling lower-level functions. While this might seem like a performance hit, the impact is negligible, and it significantly improves the code readability of the lower-level functions.

Exceptions

During one of his rants about exceptions, my brother proclaimed:

“There are two types of people: those who throw exceptions and those who have to catch them. I always find myself in the latter group.”

I share his sentiment. I’m not particularly fond of exceptions; throwing them often makes code harder to maintain and understand.

However, I’ve decided to make an exception (pun intended) to this rule. Throwing exceptions in a compiler can be quite useful for unwinding from deeply nested compilation stages.

Here’s the exception implementation:

| |

Rest assured, I promise to catch all exceptions in the top-level code. For convenient pretty-printing, I’ve even added line_number and char_index members, along with a dedicated function:

| |

Wrapping Up

That concludes the first installment of our series. While it might not have been overly exciting, we now have a functional tokenizer and basic parsing error handling, both of which are essential building blocks for the more captivating topics covered in upcoming articles.

I hope this post sparked some ideas. If you’re eager to delve into the details, you can find them on my GitHub page.