In the previous installment of our series on parsing JSON data on macOS/iOS, we managed to significantly reduce the use of temporary objects in our parser-to-builder protocol. This resulted in a dramatic performance improvement, reaching 195 MB/s, nearly 20 times faster than Swift’s native JSONDecoder. Currently, the combined process of object creation and array population consumes 45% of the processing time. This seems like an unavoidable cost, but is it really?

Object Streaming

While the objective is to generate objects from the JSON data, it’s not explicitly required to have all objects exist simultaneously. Rather than waiting for the entire array of objects to be parsed before returning the result, we can instruct the parser to deliver objects to a designated target as they are processed. This is achieved by setting a streamingThreshold, which dictates the depth within the JSON structure at which streaming begins.

` ``` -(void)decodeMPWDirectStream:(NSData*)json { MPWMASONParser *parser=[[MPWMASONParser alloc] initWithClass:[TestClass class]]; MPWObjectBuilder builder=(MPWObjectBuilder)[parser builder]; [builder setStreamingThreshold:1]; [builder setTarget:self]; [parser parsedData:json]; }

``` `

When designating ourselves as the streaming target, we need to implement a writeObject: method to adhere to the Streaming protocol.

` ``` -(void)writeObject:(TestClass*)anObject { if (!first) { first=[MPWRusage current]; } objCount++; hiCount+=anObject.hi; }

``` `

This method tracks the number of received objects, calculates the sum of their hi instance variables, and records the arrival time of the first object. How does this perform?

Remarkably well, achieving 192 ms and 229 MB/s. Additionally, the time to receive the first object is approximately 700 µs, meaning applications can start processing and providing user feedback in under a millisecond.

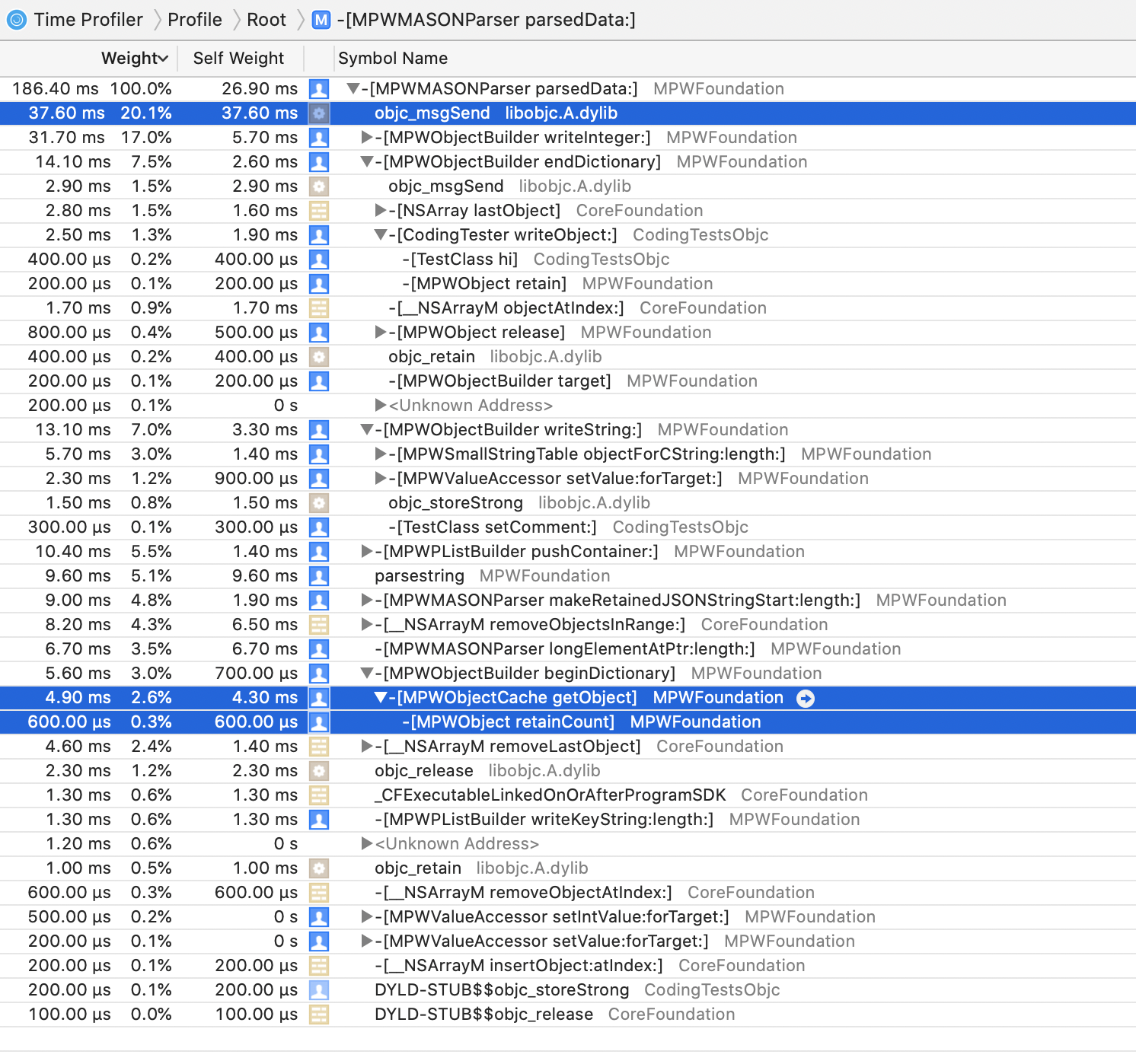

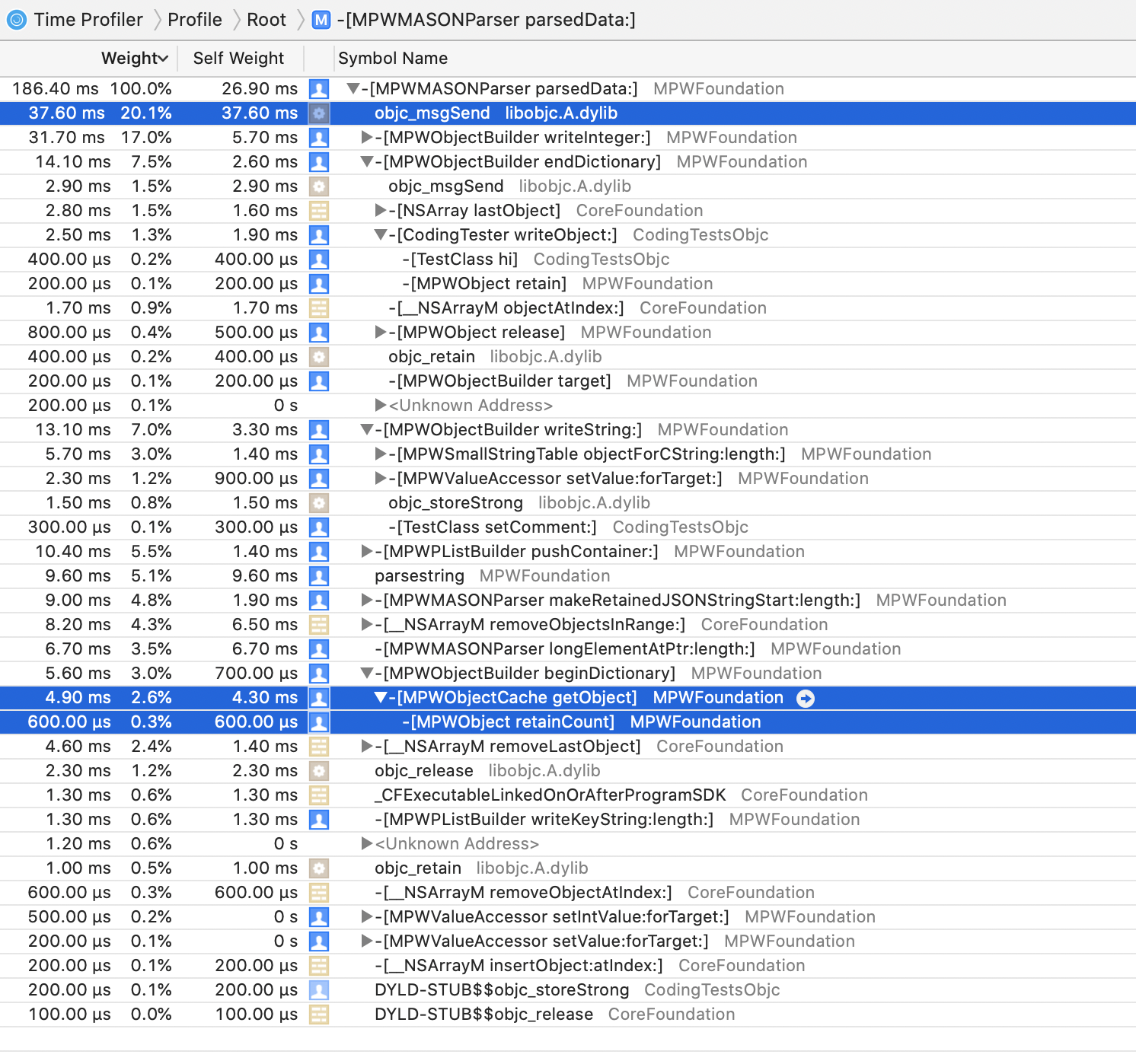

A striking observation is the relocation of the beginDictionary method in the performance profile. It now resides near the bottom, consuming a mere 2.6% of the total runtime, equivalent to 4.3ms.

How is this level of efficiency possible? We are still handling 1 million objects, so their creation remains a necessity, even if they are processed incrementally. Or are we creating all of them?

MPWObjectCache

The MPWObjectCache class (.h .m) utilizes a circular object buffer, enabling objects to be reinitialized and reused after the application has finished with them. This concept is elaborated upon in my book (yes, I wrote a book!), specifically in a section that Pearson has generously made available to the public: available.

By incorporating such a cache, only enough objects to populate the cache are instantiated. These objects are then continuously recycled, incurring a minimal overhead of a few function calls. It’s important to note that retained objects are not eligible for reuse.

Column stores, or structures of arrays

An alternative interpretation of dematerialization involves storing data in a columnar format, also known as a structure of arrays (SoA). This approach stands in contrast to the typical Array of Structures (AoS) organization (credit for this suggestion goes to Holgi).

To implement this, a specialized builder (MPWArraysBuilder, .h .m) is employed. This builder manages a collection of mutable arrays, each associated with a specific key. Upon receiving a value, it identifies the corresponding array using the key and appends the value to that array:

` ``` -(void)writeInteger:(long)number { if ( _arrayMap && keyStr) { MPWIntArray *a=OBJECTFORSTRINGLENGTH(_arrayMap, keyStr, keyLen); [a addInteger:(int)number]; keyStr=NULL; } }

-(void)writeString:(id)aString { if ( _arrayMap && keyStr) { NSMutableArray *a=OBJECTFORSTRINGLENGTH(_arrayMap, keyStr, keyLen); [a addObject:aString]; keyStr=NULL; } }

``` `

For integer values, an MPWIntArray (.h .m) is used, while a standard NSMutableArray handles strings.

This strategy yields even better results, achieving 155 ms and 284 MB/s.

Other options

The approaches discussed so far are not exhaustive. Interestingly, the protocol connecting the parser and builder was initially designed for a different purpose: it extends the Streaming protocol to manage disassembled hierarchies. This allows a tree structure to be deconstructed, processed through a pipeline, and then faithfully reassembled at the other end.

This protocol finds application in Polymorphic Write Streams, enabling Standard Object Out as demonstrated at DLS ‘19. An earlier iteration of this concept was presented at Macoun 2018:

Outlook

We are approaching a point of diminishing returns in terms of performance gains, particularly for a proof-of-concept implementation. However, there are still opportunities for optimization, such as caching objc_msgSend() calls using IMPs and reducing redundant character input processing.

Beyond raw performance improvements, minor correctness issues need to be addressed. These include the proper handling of JSON escape characters within keys and accurate hierarchy management. While not overly complex, these aspects require careful consideration and implementation, drawing inspiration from the XML handling in the superclass.

Another area of exploration is integration with Swift in general (a preliminary attempt to bridge the gap encountered issues with method resolution) and the Codable protocol in particular. Codable integration necessitates a different strategy: instead of direct object instantiation and property setting, a temporary structure is created and passed to the object’s decoder for self-decoding. This approach is used in the MAX superclass, providing a potential roadmap for implementation. The primary challenge lies in reconciling the hierarchical/recursive nature of this approach with the protocol’s streaming requirement.

Deeper analysis and explanation of the rationale and mechanics behind this approach are also possible future avenues for exploration. Therefore, your feedback is highly valuable: what aspects pique your interest the most? Would a production-ready version of this be beneficial? Are you interested in exploring more aggressive optimizations (the optimizations explored so far have been relatively conservative)?

Note

My expertise extends beyond assisting Apple; I’m available to support you and your company/team with performance and agile coaching, workshops, and consulting services. Reach out to me at info at metaobject.com.

TOC

Somewhat Less Lethargic JSON Support for iOS/macOS, Part 1: The Status Quo

Somewhat Less Lethargic JSON Support for iOS/macOS, Part 2: Analysis

Somewhat Less Lethargic JSON Support for iOS/macOS, Part 3: Dematerialization

Equally Lethargic JSON Support for iOS/macOS, Part 4: Our Keys are Small but Legion

Less Lethargic JSON Support for iOS/macOS, Part 5: Cutting out the Middleman

Somewhat Faster JSON Support for iOS/macOS, Part 6: Cutting KVC out of the Loop

Faster JSON Support for iOS/macOS, Part 7: Polishing the Parser

Faster JSON Support for iOS/macOS, Part 8: Dematerialize All the Things!

Beyond Faster JSON Support for iOS/macOS, Part 9: CSV and SQLite