How has machine learning truly affected SEO? Over the past year, this question has been a significant point of contention in the SEO community.

I have to admit, I’ve become somewhat preoccupied with the concept of machine learning. Based on my observations, I believe that RankBrain and/or other machine learning components within Google’s core algorithm are progressively favoring web pages that demonstrate strong user engagement.

In essence, Google’s goal is to identify exceptional web pages, those that exhibit outstanding user engagement metrics such as organic search click-through rate (CTR), dwell time, bounce rate, and conversion rate. The search giant then aims to elevate these high-performing pages in organic search rankings.

After all, happier, more engaged users translate to more effective search results, wouldn’t you agree?

So, in a nutshell, you can think of machine learning as Google’s very own Unicorn Detector.

The Connection Between Machine Learning & Click-Through Rate

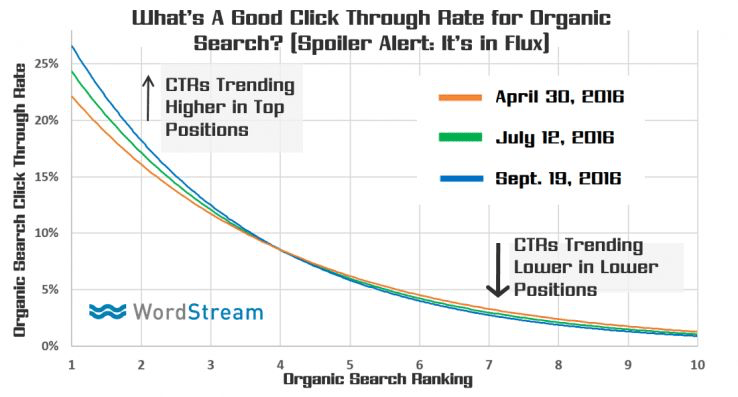

Numerous SEO professionals and thought leaders have asserted that it’s completely impossible to find any tangible proof of Google RankBrain’s existence in real-world scenarios. That’s absurd. All it takes is conducting SEO experiments and being more strategic and insightful in how you design and execute those experiments. That’s precisely why I previously ran an SEO test specifically focusing on CTR over an extended period. My hope was to find evidence of machine learning. The results were intriguing: pages with higher organic search CTRs experienced a boost in SERP rankings and received a greater number of clicks:

However, click-through rate is just one indicator of the impact of machine learning algorithms. Let’s now shift our attention to another crucial engagement metric: long clicks, which represent visits that remain on a website for an extended duration after originating from the SERP.

Using Time on Site as a Substitute for Long Clicks

Are you still not entirely convinced that long clicks have a bearing on organic search rankings (either directly or indirectly)? Well, I’ve devised a remarkably simple method for you to personally verify the significance of long clicks – all while uncovering the influence of machine learning algorithms. In this experiment, we’ll be examining time on page. To clarify, time on page is not synonymous with dwell time or a long click (which measures how long visitors stay on your site before clicking the back button to return to the search results they came from). Unfortunately, we can’t track long clicks or dwell time in Google Analytics. Only Google possesses access to this particular data. In reality, time on page itself isn’t our primary focus. We’re interested in it solely because it’s highly probable that it correlates with the metrics we’re actually interested in.

The Relationship Between Time on Site & Organic Traffic (Pre-RankBrain)

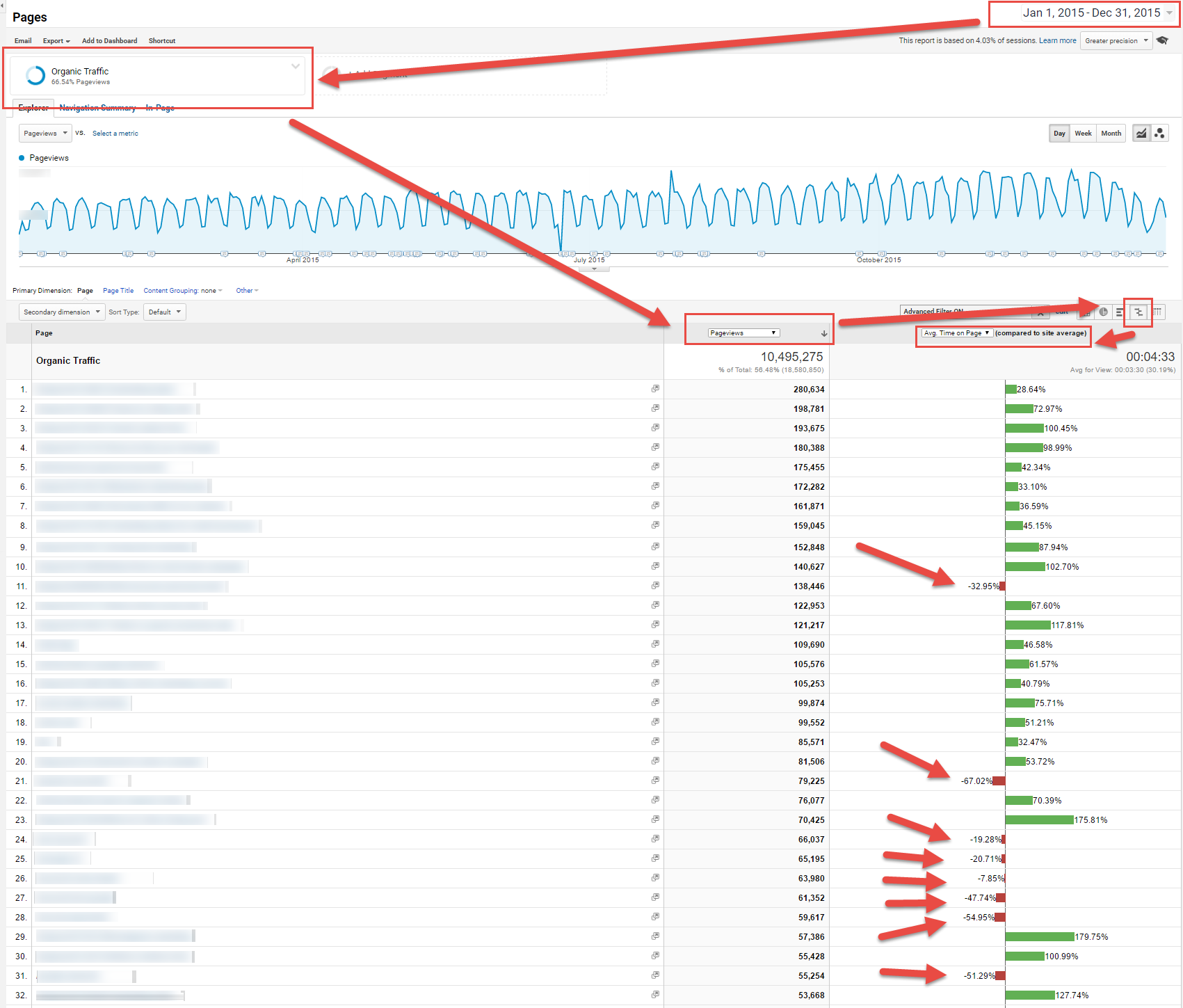

To begin, navigate to your analytics account. Select a time frame before the rollout of the new algorithms (let’s say 2015). Within your content report, apply a segment to isolate your organic traffic, then sort the results by pageviews. Next, execute a Comparison Analysis to compare your pageviews against the average time on page. Your results should resemble the following:

Click image to enlarge These 32 pages were the top performers in driving organic traffic in 2015. While approximately two-thirds of these pages boast above-average time on site, the remaining third fall below the average. Notice those red arrows? They represent what we call “donkeys” – pages that were ranking well in organic search but, frankly, probably didn’t deserve to be there, at least not for the search queries that were generating the most traffic. Their time on page was significantly lower – half or even a third – compared to the site average.

The Relationship Between Time on Site & Organic Traffic (Post-RankBrain)

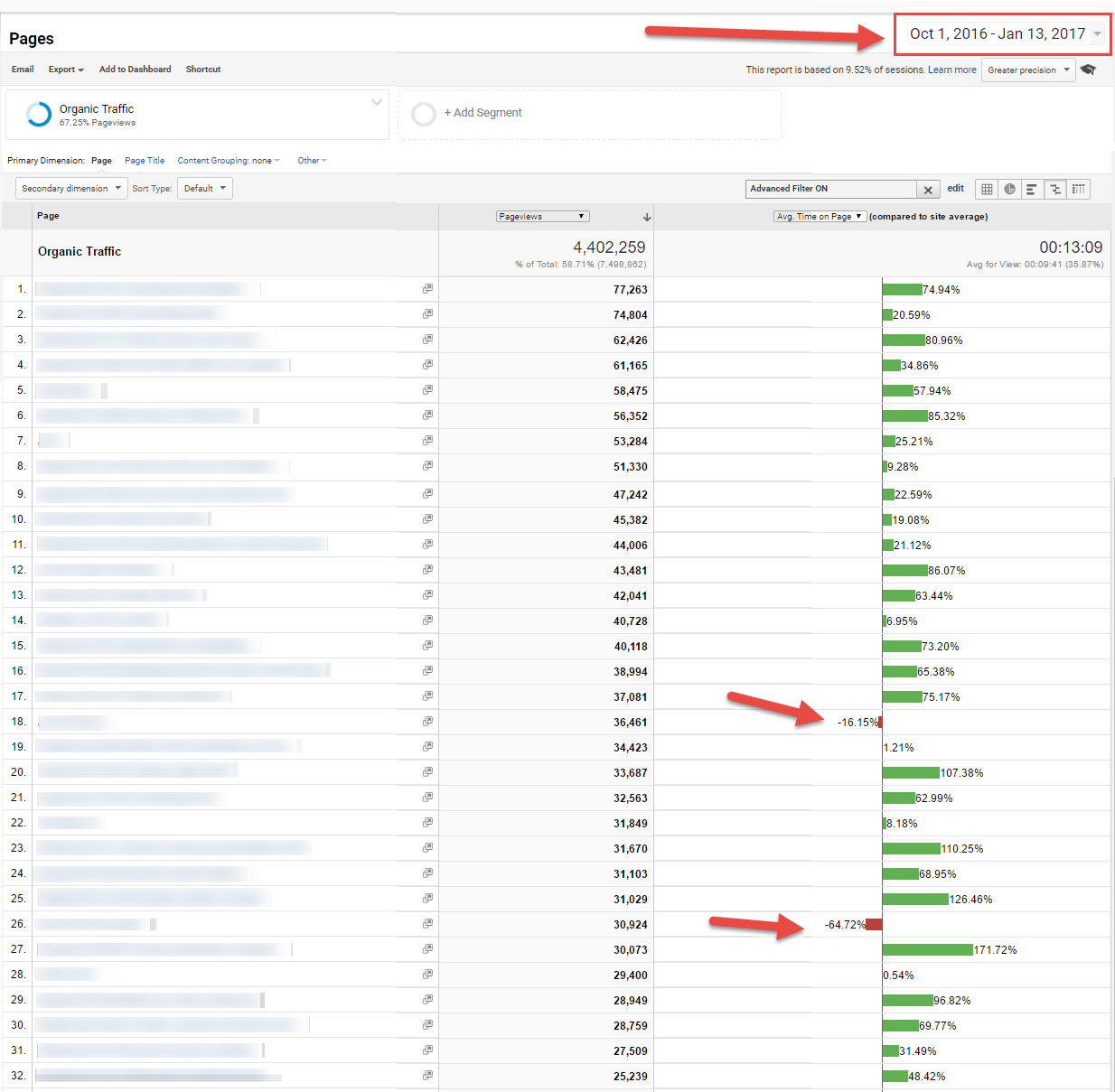

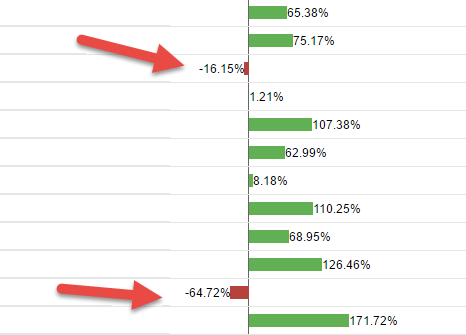

Now, let’s replicate the analysis, but this time using a more recent timeframe when we know Google’s machine learning algorithms were operational (e.g., the past three to four months). Perform the same comparison analysis, and you’ll observe the following:

Click image to enlarge Pay close attention to what happens when we examine the organic traffic data. With the exception of two pages, all our top-performing pages now exhibit above-average time on page. This is a remarkable finding. What could be the reason behind this shift?

Is There a Correlation Between Longer Dwell Time & Higher Search Rankings?

It appears that Google’s machine learning algorithms have effectively identified those pages that were ranking well in 2015 despite not truly earning their positions. Based on these observations, it certainly seems like Google is rewarding pages that demonstrate higher dwell time with more prominent search rankings.

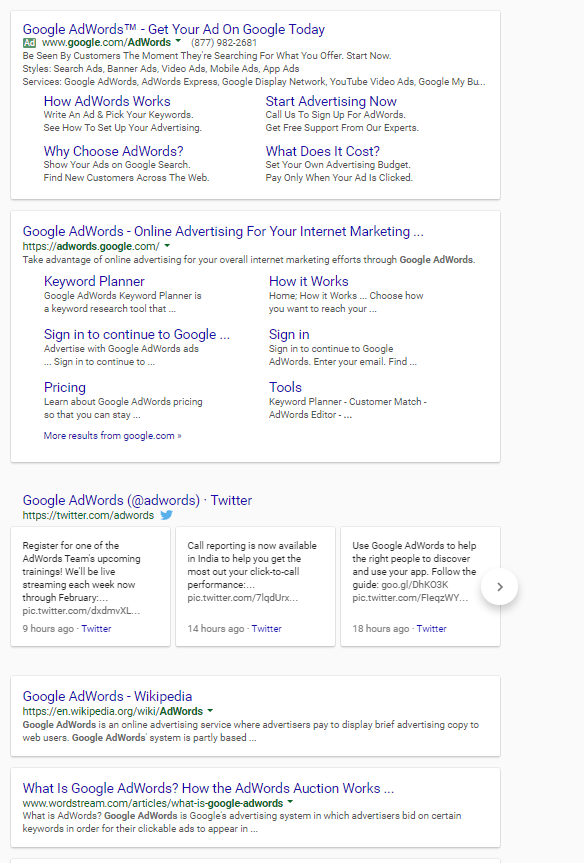

Google successfully detected a majority of the “donkeys” (approximately 80 percent!) and now, almost all the pages driving the most substantial organic traffic are time-on-site “unicorns.” While I won’t disclose the specific pages on the nexus-security site that were identified as “donkeys,” I can reveal that some of those pages were created with the sole purpose of attracting traffic (mission accomplished!). However, their alignment with search intent was less than ideal. It’s likely that someone else created a page that better addressed the users’ search intent. Here’s a concrete example: Our AdWords Grader tool used to hold a page 1 ranking for the query “google adwords” (which has an enormous search volume – over 300,000 searches per month!). However, the intent match for this query was low – the majority of users searching for this phrase are simply using it as a navigational keyword to reach the AdWords website. A small percentage might be seeking more information about Google AdWords and its functionality, essentially treating Google as a substitute for Wikipedia. There’s no indication from the query that users are looking for a tool to diagnose issues with their AdWords accounts. And guess what? In 2015, the Grader page was among those top 30 pages but exhibited below-average time on site. At some point, Google experimented with a different result in place of the Grader – our infographic explaining how Google AdWords works. This infographic continues to rank on page 1 for that specific search query and demonstrates a much stronger alignment with the informational intent behind the keyword.

Important Considerations Regarding the Data

To be perfectly clear, I acknowledge that these analytics reports don’t provide direct evidence of a decline in rankings. There could be alternative explanations for the discrepancies in the reports – perhaps we generated a significant amount of new, exceptional content that’s ranking for higher-volume keywords, and this new content simply displaced the time-on-site “donkeys” from 2015. Moreover, certain types of pages might naturally have lower time on site for entirely valid reasons (for instance, they might efficiently deliver the information users are seeking). However, internally, we have definitive knowledge that several of our pages with below-average time on site have indeed experienced ranking drops (at least for certain keywords) over the past couple of years. Regardless of these considerations, it’s incredibly compelling to observe that the pages driving the most substantial organic traffic overall (the nexus-security site comprises thousands of pages) consistently exhibit significantly above-average time on site. This strongly suggests that pages with exceptional, “unicorn-level” engagement metrics are poised to be the most valuable assets to your business in the long run.

This report also unveiled a critical insight for us: pages with below-average time on site are our most vulnerable from an SEO perspective. In other words, those two remaining pages in the second chart that still exhibit below-average time on site are the most susceptible to losing organic rankings and traffic in the evolving landscape of machine learning. The beauty of this report lies in its simplicity – you don’t need to conduct extensive research or hire an SEO to perform a comprehensive audit. Just access your analytics data and examine it yourself. You should be able to compare historical data from a significant time ago to more recent activity (the last three to four months).

Interpreting the Findings

Think of this report as your personalized “donkey detector.” It highlights the content that’s most vulnerable to potential future losses in incremental traffic and search rankings due to Google’s algorithm updates. Here’s the key takeaway regarding how machine learning operates: it doesn’t wipe out all your traffic overnight (unlike algorithms like Panda or Penguin). Its impact is more gradual and incremental. So, what’s the best course of action if you discover a significant amount of “donkey” content? Prioritize the pages that are most at risk – those with below-average or borderline time on site. Unless there’s a genuinely compelling reason for these pages to have low time on site, place them at the top of your list for rewriting or optimization to ensure they align more effectively with user intent. Now it’s your turn! Analyze your own data and see if your findings support the notion that time-on-site plays a role in your organic search rankings. Don’t simply take my word for it. Run your own reports and share your discoveries with me.