In the past year, Daniel Pérez Rubio, a Toptal data scientist specializing in natural language processing (NLP), has dedicated his efforts to developing sophisticated language models like BERT and GPT. These models form the foundation of widely used generative AI technologies like ChatGPT, developed by OpenAI. This article recaps a recent informal question-and-answer session on Slack where Rubio addressed inquiries about AI and NLP from fellow Toptal engineers globally.

This detailed Q&A will not only explain what an NLP engineer does but also delve into topics like fundamental NLP concepts, suggested technologies, advanced language models, business and product considerations, and the future trajectory of NLP. NLP practitioners from all backgrounds can find valuable takeaways from the discussion.

Note: For clarity and conciseness, minor edits have been made to some questions and answers.

Starting Out: NLP Essentials

What steps should a developer take to transition from traditional application development to professional machine learning (ML) work? —L.P., Córdoba, Argentina

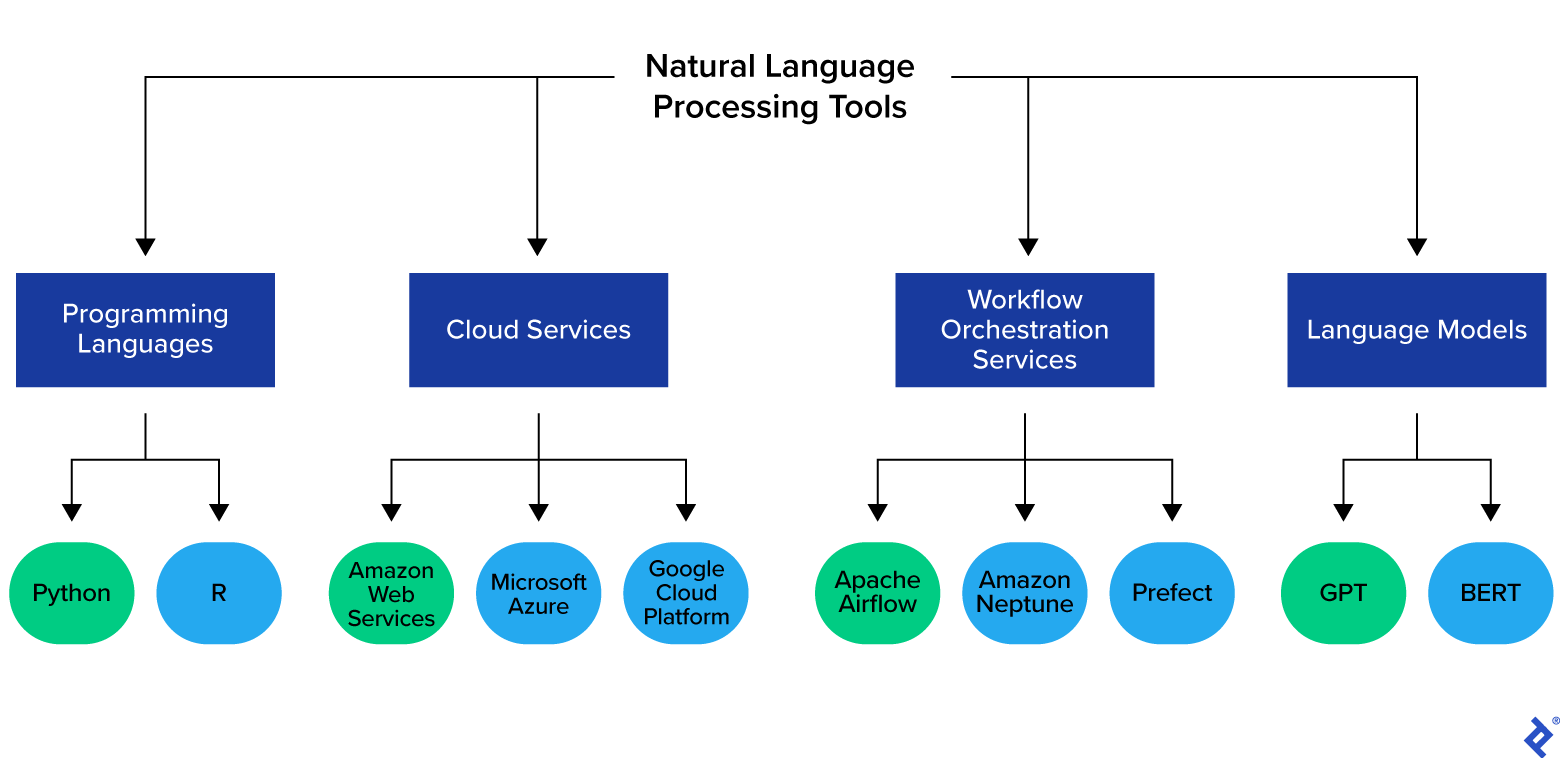

While a strong theoretical understanding is paramount in data science, practical experience with new tools is also essential. I recommend exploring online courses and actively applying the acquired knowledge. Python is my preferred programming language due to its similarity to other high-level languages, supportive community, and extensive library documentation, which offers further learning opportunities.

How familiar are you with linguistics as a formal discipline, and does it benefit NLP? What about the role of information theory (e.g., entropy, signal processing, cryptanalysis)? —V.D., Georgia, United States

As a telecommunications graduate, information theory underpins my analytical approaches. Given the strong connection between data science and information theory, my background has significantly shaped my professional journey. Although I lack formal training in linguistics, my passion for language and communication has led me to learn through online resources and practical experiences, enabling me to collaborate effectively with linguists on NLP solutions.

Could you elaborate on BERT and GPT models, including real-world examples? —G.S.

To put it simply, and there are plenty of excellent literature on this subject, BERT and GPT are language models. They learn from vast amounts of text data, mastering tasks like text infilling, which makes them suitable for conversational AI applications. You may have heard that these language models are so powerful that they can excel in unexpected areas, such as solving math problems.

Useful NLP Tools and Practices

Beyond BERT and GPT, what other language models do you recommend? —R.K., Korneuburg, Austria

Based on my experience, GPT-2 (with GPT-4 being the latest version) remains a top contender. It strikes a good balance between efficiency and capability for many tasks.

For text analysis, do you favor Python or R? —V.E.

My bias leans heavily toward Python for everything, not just data science! It boasts a fantastic community and a wealth of high-quality libraries. Although I’m familiar with R, its unique nature can make it challenging for production use. However, its statistical prowess surpasses Python’s, though Python offers excellent open-source projects to bridge the gap.

Do you have a preferred cloud provider (e.g., AWS, Azure, Google Cloud**) for building and deploying models?** —D.B., Traverse City, United States

That’s an easy one! My dislike for vendor lock-in makes AWS my clear favorite.

Do you suggest using workflow orchestration tools like Prefect, Airflow**, Luigi, or Neptune for NLP pipelines, or do you prefer custom solutions?** —D.O., Registro, Brazil

While I’m familiar with Airflow, I only use it to manage multiple processes, especially when I anticipate adding new ones or modifying pipelines. These tools are particularly valuable for big data tasks involving extensive extract, transform, and load (ETL) operations.

How about less complex pipelines? The most common approach I encounter is building a web API with Flask or FastAPI and having a front end interact with it. Any alternative recommendations? —D.O., Registro, Brazil

I strive for simplicity, avoiding unnecessary complexity that could cause issues later. If an API is essential, I prioritize robustness using the best tools available. I recommend combining FastAPI](https://fastapi.tiangolo.com/deployment/server-workers/#gunicorn-with-uvicorn-workers) with a [Gunicorn server and Uvicorn workers—this setup works incredibly well!

However, I typically avoid starting with architectures like microservices. My approach emphasizes modularity, readability, and clear documentation. If a microservices transition becomes necessary, you can then address it, viewing it as a positive sign that your product’s importance warrants the effort.

Currently, I use MLflow for experiment tracking and Hydra for configuration management. I’m considering exploring Guild AI and BentoML for model management. Are there any other similar tools for machine learning or natural language processing that you recommend? —D.O., Registro, Brazil

I primarily rely on custom visualizations and pandas’ style method for quick comparisons.

I opt for MLflow when a shared repository for experiment results is needed within a data science team. Even then, I gravitate toward familiar report formats (with a slight preference for plotly over matplotlib for interactivity). Exporting reports as HTML allows for immediate consumption with full format control.

I’m interested in trying Weights & Biases, particularly for deep learning, as monitoring tensors is significantly more complex than tracking metrics. I’ll be happy to share my findings once I do.

Leveling Up: Advanced NLP Topics

Regarding real-world applications, can you break down your typical workflow for data cleaning and model building? —V.D., Georgia, USA

Data cleaning and feature engineering consume approximately 80% of my time. High-quality data is crucial for any successful machine learning solution. I aim to streamline model building, especially since a business’s target performance might not necessitate complex techniques.

Real-world applications are my main focus—I thrive on seeing my creations solve tangible problems!

Imagine being tasked with a machine learning model that consistently underperforms, regardless of training effort. How would you conduct a feasibility analysis to demonstrate the need for alternative approaches? —R.M., Dubai, United Arab Emirates

A Lean approach can help assess the optimal solution’s potential. This involves minimal data preprocessing, using straightforward models, and adhering to best practices like separating training, validation, and test datasets and employing cross-validation when feasible.

Is it possible to create smaller models that rival the performance of larger ones while consuming fewer resources, perhaps through pruning? —R.K., Korneuburg, Austria

Absolutely! DeepMind’s Chinchilla model](https://www.deepmind.com/publications/an-empirical-analysis-of-compute-optimal-large-language-model-training) exemplifies this, outperforming [GPT-3 and its counterparts while being significantly smaller in terms of computational requirements.

AI Product and Business Perspectives

Can you provide more details about your approach to machine learning product development ? —R.K., Korneuburg, Austria

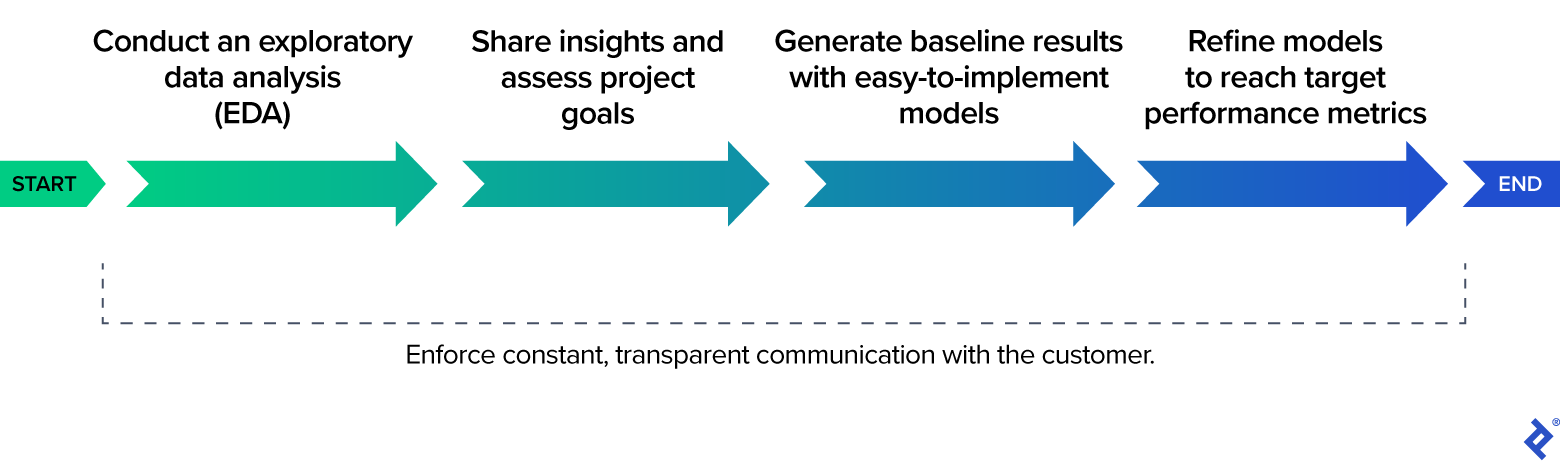

My process typically begins with a thorough exploratory data analysis, delving deep until I fully grasp the data’s nuances. In supervised machine learning, data is the foundation of value.

Armed with this understanding, usually after several iterations, I collaborate closely with the customer to understand their objectives and gain a deeper appreciation for the project’s context and use cases.

Next, I establish quick and practical baseline results using readily available models. This helps gauge the difficulty of achieving the desired performance metrics.

From there, I prioritize data as the cornerstone. Investing extra effort in preprocessing and feature engineering pays dividends, and maintaining open communication with the customer throughout ensures a shared understanding.

What are the current limitations of AI and ML applications in product development? —R.K., Korneuburg, Austria

Two major hurdles currently limit AI and ML.

The first is artificial general intelligence (AGI), which is gaining increasing attention, as seen with DeepMind’s Gato. However, achieving AI that possesses a generalized skill set across multiple tasks, including untrained ones, remains a distant goal.

The second challenge is reinforcement learning. Overcoming the reliance on large datasets and supervised learning is crucial for addressing future obstacles. Gathering enough data to teach a model every task a human performs is likely unattainable in the foreseeable future. Even if achieved, it might not guarantee human-level performance in a changing world.

I don’t anticipate the AI community overcoming these challenges anytime soon, if ever. Should we succeed, I believe computational efficiency will become paramount—but humans might not be the ones exploring those frontiers!

When and how should you integrate machine learning operations (MLOps) technologies into a product? Any advice on persuading clients or managers of its necessity? —N.R., Lisbon, Portugal

MLOps offers numerous benefits for various products and business objectives, such as serverless architectures that bill based on usage, ML APIs designed for common business needs, and leveraging free tools like MLflow for tracking experiments during development and monitoring application performance later on. It’s particularly advantageous for large-scale applications, reducing technical debt and boosting development efficiency.

However, it’s vital to assess the suitability of your chosen solution. For instance, if you have available server capacity, can guarantee your SLA requirements, and have predictable traffic, a managed MLOps service might be unnecessary.

One common pitfall is assuming that managed services will automatically address all project requirements, such as model performance, service-level agreements (SLAs), and scalability. Building an OCR API, for example, requires rigorous testing to identify and understand failure points, which can help evaluate potential obstacles to achieving desired outcomes.

Ultimately, it depends on your specific goals. But if your project aligns with MLOps principles, it generally provides better cost-effectiveness and risk management than a custom-built solution.

How effectively are organizations defining their business needs to ensure that data science tools generate models that support informed decision-making? —A.E., Los Angeles, United States

That’s a crucial question. Data science tools introduce an additional layer of ambiguity compared to traditional software engineering: your product not only handles uncertainty but often relies on it.

Therefore, consistent customer involvement is paramount. Any effort invested in clearly communicating your work is worthwhile. Clients possess the most in-depth understanding of project requirements and ultimately approve the final deliverables.

Looking Ahead: The Future of NLP and Ethical Considerations

What are your thoughts on the increasing energy consumption associated with the massive convolutional neural networks (CNNs) that companies like Meta are now building regularly? —R.K., Korneuburg, Austria

That’s a valid and important concern. Some argue that these large models (e.g., Meta’s LLaMA) are frivolous and wasteful. However, having witnessed their potential and considering they’re often made publicly available for free, I believe the resources invested in training them will yield long-term benefits.

What’s your perspective on claims of AI models achieving sentience? Based on your experience, do you believe they’re approaching consciousness? —V.D., Georgia, United States

Defining and evaluating something like AI sentience is a deeply philosophical matter. I’m not fond of the sensationalism surrounding such discussions and the negative publicity it brings to the NLP field. In general, most artificial intelligence endeavors aim to be precisely that—artificial.

Should we be concerned about the ethical implications of AI and ML? —O.L., Ivoti, Brazil

Absolutely—especially with especially with recent advances in AI systems like ChatGPT! However, a solid understanding of the subject matter is crucial for a productive conversation, and I fear some key stakeholders (like governments) still need time to reach that level of understanding.

One pressing ethical consideration is minimizing and eliminating bias (such as racial or gender bias). This requires a concerted effort from technologists, companies, and even clients. Ensuring fairness and equitable treatment for all individuals, regardless of the cost, is paramount.

Overall, I see ML as a driving force that could potentially propel humanity toward its next Industrial Revolution. While the previous Industrial Revolution rendered some jobs obsolete, it also paved the way for new, less laborious, and more creative roles. I’m optimistic that we’ll adapt to ML and AI in a similar fashion!

The Toptal Engineering Blog editorial team expresses its appreciation to Rishab Pal for reviewing the technical accuracy of this article.