The increasing prevalence of deep neural networks across diverse industries is undeniable. Their efficacy in addressing various challenges through supervised learning is well-documented. However, achieving optimal performance hinges on the availability of substantial, high-quality training data that accurately reflects the production setting.

Although vast amounts of data exist online, a large portion remains unprocessed and unsuitable for machine learning (ML) applications. Consider the development of a traffic light detector for autonomous driving. Training images must incorporate traffic lights and precise bounding boxes to delineate their boundaries. Transforming raw data into this structured, labeled, and usable format is both time-intensive and complex.

To streamline this process, I created Cortex: The Biggest AI Dataset, a novel SaaS product centered around image data labeling and computer vision. Its capabilities extend beyond these areas to encompass diverse data types and artificial intelligence (AI) subfields. Cortex offers numerous use cases across multiple domains and image categories:

- Enhanced model performance through fine-tuning with custom data sets: Pretraining a model on a large, diverse data set like Cortex can substantially elevate its performance when fine-tuned on a smaller, specialized data set. Take, for instance, a cat breed identification app. Pretraining a model on a comprehensive collection of cat images enables it to swiftly discern features across different breeds. This, in turn, enhances the app’s accuracy in classifying breeds when fine-tuned on a targeted data set.

- Training a model for general object detection: The data set’s labeled images of various objects allow for training models to detect and classify specific objects within images. A prevalent example is car identification, which proves valuable in applications like automated parking, traffic control, law enforcement, and security. This general object detection methodology can be broadened to encompass other MS COCO classes (currently, the data set handles only MS COCO classes).

- Training a model for object embedding extraction: Object embeddings represent objects in a high-dimensional space. Training a model using Cortex enables it to generate object embeddings from images. These embeddings have applications in areas such as similarity search or clustering.

- Semantic metadata generation for images: Cortex can produce semantic metadata, such as object labels, for images. This empowers application users with richer insights and interactivity (e.g., clicking objects in an image for further details or viewing related images on news platforms). This feature proves particularly beneficial in interactive learning environments where users can delve deeper into objects (like animals, vehicles, or household items).

Our exploration of Cortex will concentrate on the last use case: extracting semantic metadata from website images and overlaying them with clickable bounding boxes. Clicking on a bounding box triggers a Google search for the MS COCO object class identified within it.

The Crucial Role of High-quality Data in Modern AI

Recent years have witnessed remarkable advancements in various AI subfields, including computer vision, natural language processing (NLP), and tabular data analysis. These breakthroughs share a common thread: a reliance on high-quality data. Since AI systems are limited by the data they learn from, data-centric AI has emerged as a critical research area. Techniques such as transfer learning and synthetic data generation have been developed to address data scarcity, while data labeling and cleaning remain vital for ensuring data quality.

Labeled data is paramount in developing modern AI models, including fine-tuned LLMs and computer vision models. While obtaining basic labels for pretraining language models (e.g., predicting the next word in a sentence) is straightforward, collecting labeled data for conversational AI models like ChatGPT is more intricate. These labels must exemplify the model’s desired behavior to create the illusion of meaningful conversation. Image labeling presents even greater challenges. Training models like DALL-E 2 and Stable Diffusion to generate images from user prompts necessitates vast data sets comprising labeled images and textual descriptions.

Using subpar data for systems like ChatGPT would result in poor conversational abilities. Similarly, low-quality image object bounding box data would lead to inaccurate predictions, such as misclassifications, missed objects, and other errors. Noise and blurriness in image data further exacerbate these issues. Cortex seeks to provide developers with readily available, high-quality data for creating and training image models, making the process faster, more efficient, and predictable.

A Glimpse into Large Data Set Processing

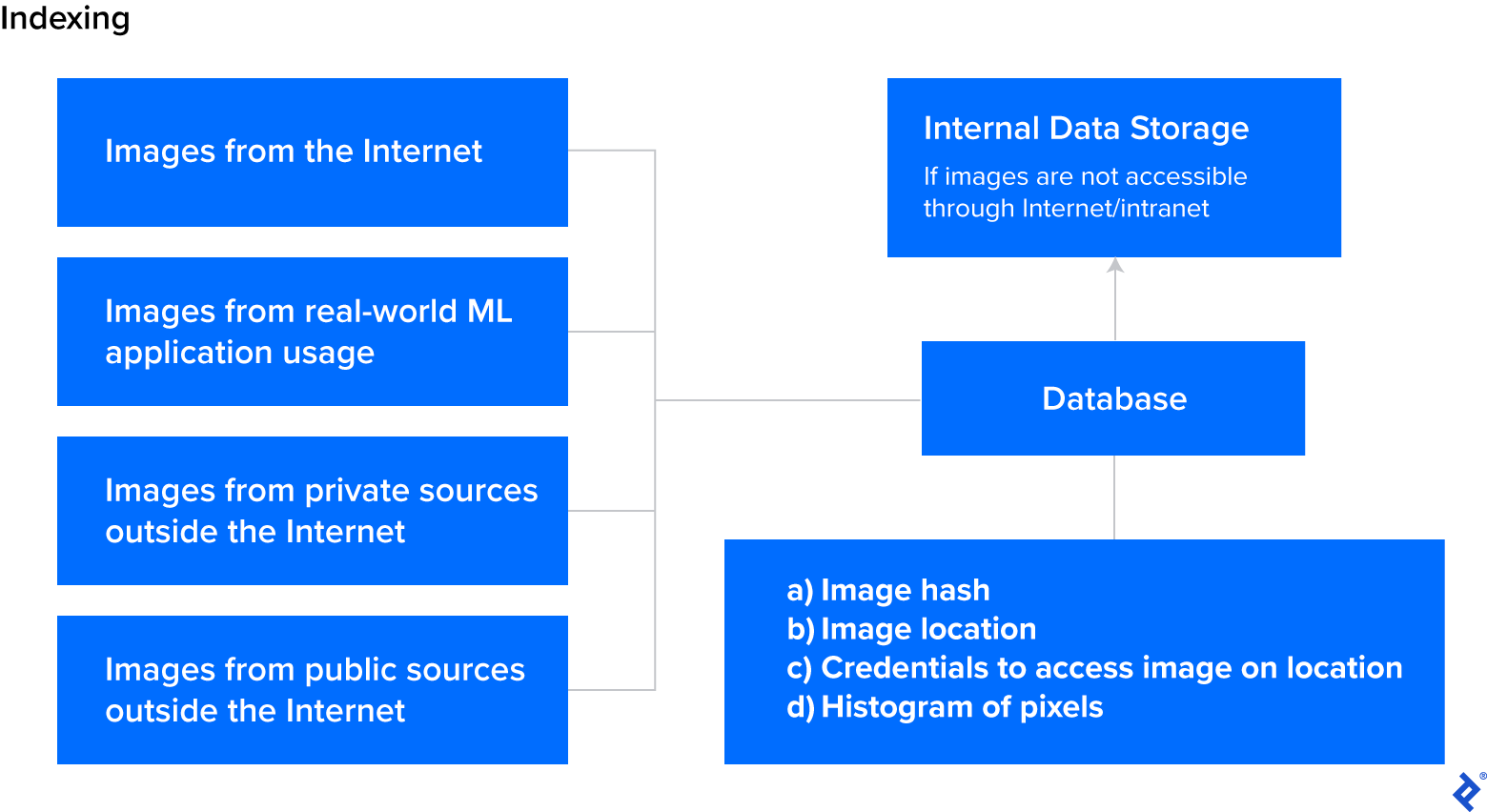

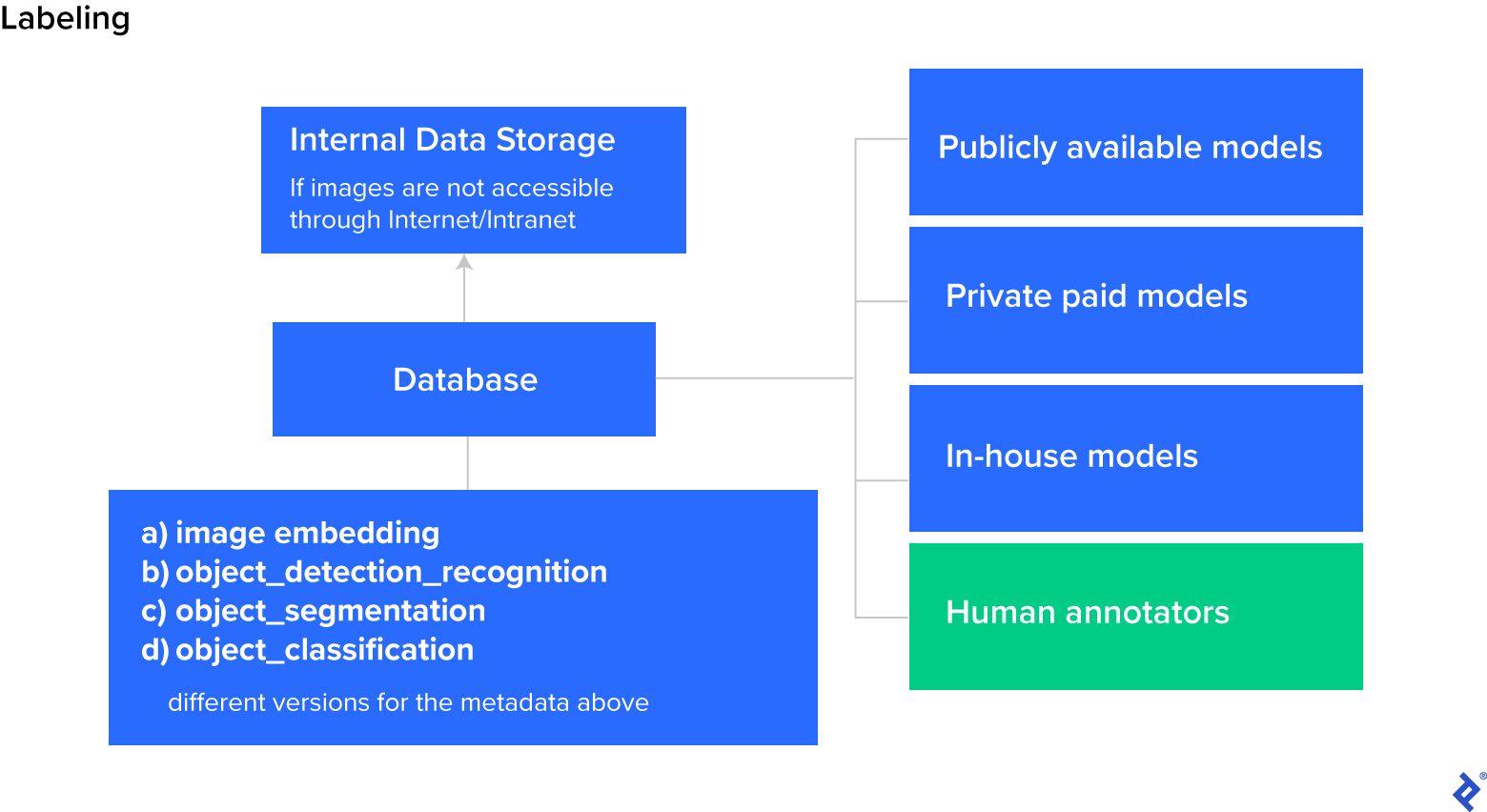

Building a comprehensive AI data set is a multi-phased process. It typically begins with data collection, where images are scraped from the Internet, and their URLs and structural attributes (e.g., image hash, dimensions, and histogram) are stored. Subsequently, models automatically label these images with semantic metadata (e.g., image embeddings, object detection labels). Finally, quality assurance (QA) measures, encompassing rule-based and ML-based approaches, verify the accuracy of the assigned labels.

Data Collection

Several methods exist for acquiring data for AI systems, each with its own strengths and weaknesses:

Labeled data sets: Developed by researchers to address specific problems, these data sets, such as MNIST and ImageNet, come pre-labeled for model training. Platforms like Kaggle facilitate the sharing and discovery of such data sets, although they are primarily intended for research rather than commercial use.

Private data: Proprietary to organizations, this data type is often rich in domain-specific information. However, it often requires additional cleaning, data labeling, and potential consolidation from different subsystems.

Public data: Freely available online, this data can be collected using web crawlers. However, this approach can be time-consuming, especially when data resides on high-latency servers.

Crowdsourced data: Involving human workers to gather real-world data, this method can lead to inconsistencies in data quality and format due to variations in individual worker output.

Synthetic data: Generated by applying controlled modifications to existing data, synthetic data techniques, including generative adversarial networks (GANs) or basic image augmentations, prove particularly useful when substantial data is already available.

Obtaining the right data is paramount to building effective and accurate AI systems.

Data Labeling

Data labeling involves assigning labels to data samples, enabling AI systems to learn from them. Commonly used data labeling methods include:

Manual data labeling: The most straightforward method, manual data labeling involves human annotators examining and labeling each data sample individually. While time-consuming and potentially costly, this approach is often necessary for data requiring specific domain knowledge or subjective interpretation.

Rule-based labeling: An alternative to manual labeling, this method employs predefined rules or algorithms to assign labels. For instance, when labeling video frames, annotating the first and last frames and programmatically interpolating for intermediate frames can replace manual annotation of every frame.

ML-based labeling: Utilizing pre-trained machine learning models, this approach automatically generates labels for new data samples. For example, a model trained on a large, labeled image data set can automatically label new images. While requiring a substantial amount of labeled data for training, this method can be highly efficient. Recent research even suggests that ChatGPT is already outperforming crowdworkers for text annotation tasks.

The optimal labeling method depends on factors like data complexity and resource availability. Selecting and implementing the appropriate method allows researchers and practitioners to create high-quality labeled data sets for training increasingly sophisticated AI models.

Quality Assurance

Quality assurance ensures that the data and labels used for training are accurate, consistent, and relevant to the task. Common QA methods mirror data labeling methods:

Manual QA: This involves manually inspecting data and labels for accuracy and relevance.

Rule-based QA: This method uses predefined rules to check data and labels for accuracy and consistency.

ML-based QA: This approach leverages machine learning algorithms to automatically detect errors or inconsistencies in data and labels.

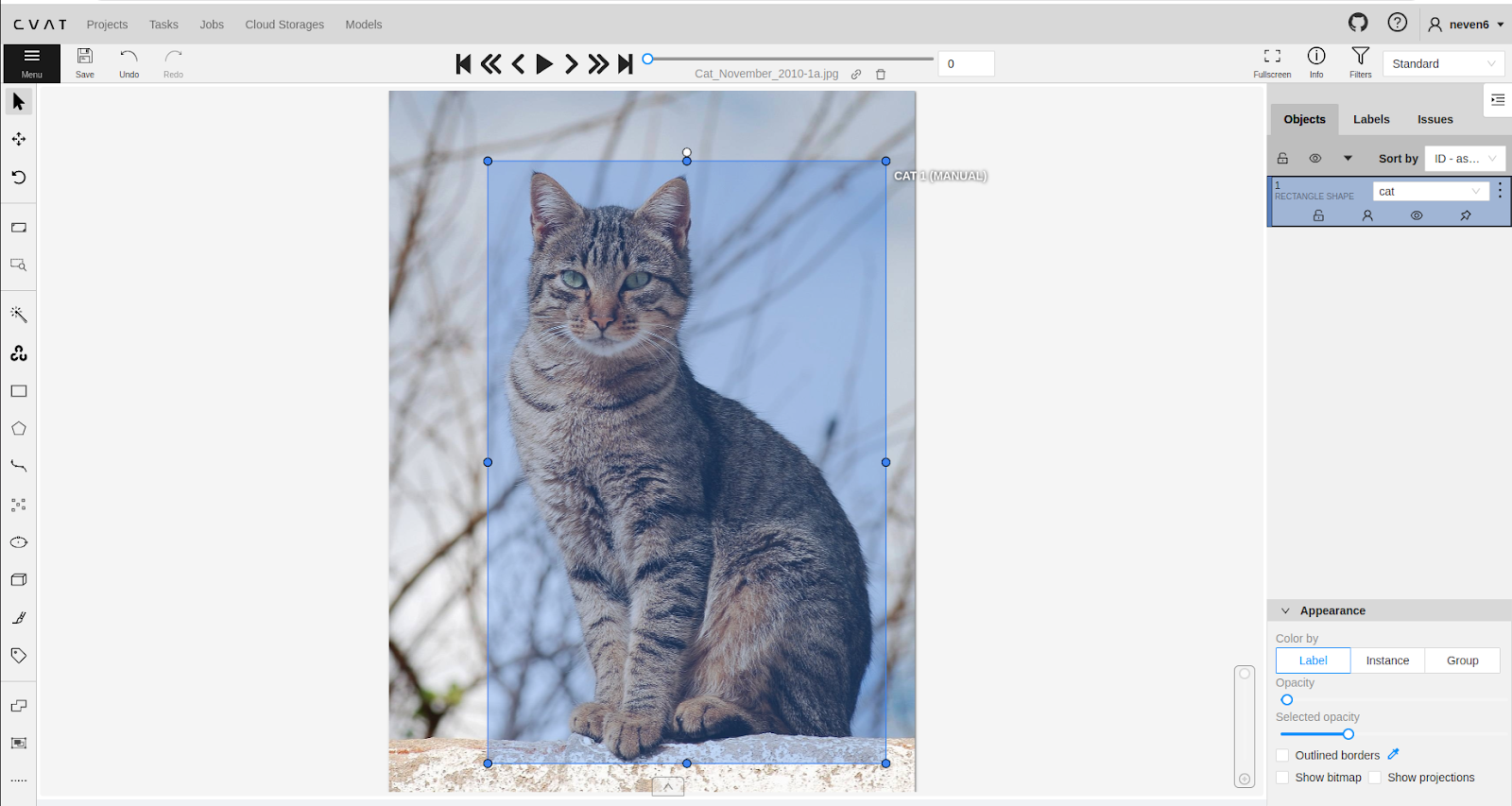

FiftyOne, an open-source toolkit for building high-quality data sets and computer vision models, is one such ML-based QA tool. For manual QA, tools like CVAT can enhance the efficiency of human annotators. Human annotation, being the most expensive and least desirable option, should only be employed when automatic annotation fails to yield high-quality labels.

When validating data processing efforts, the level of labeling detail should align with the task’s requirements. While some applications demand pixel-level precision, others may be more forgiving.

QA is a critical step in ensuring the efficacy and reliability of high-quality neural network models. Whether manual, rule-based, or ML-based, diligence and thoroughness in QA are essential for achieving the best outcomes.

Cortex Walkthrough: From URL to Labeled Image

Cortex employs a combination of manual and automated processes for data collection, labeling, and QA. The objective is to minimize manual effort by feeding human outputs into rule-based and ML algorithms.

Cortex samples consist of URLs referencing original images scraped from the Common Crawl database. Data points are labeled with object bounding boxes. Object classes adhere to the MS COCO classification system, including categories like “person,” “car,” and “traffic light.” Users can download their desired images from the provided URLs using img2dataset. Within Cortex, labels are referred to as semantic metadata, as they impart meaning to the data and unveil expose useful knowledge embedded within each data sample (e.g., image dimensions).

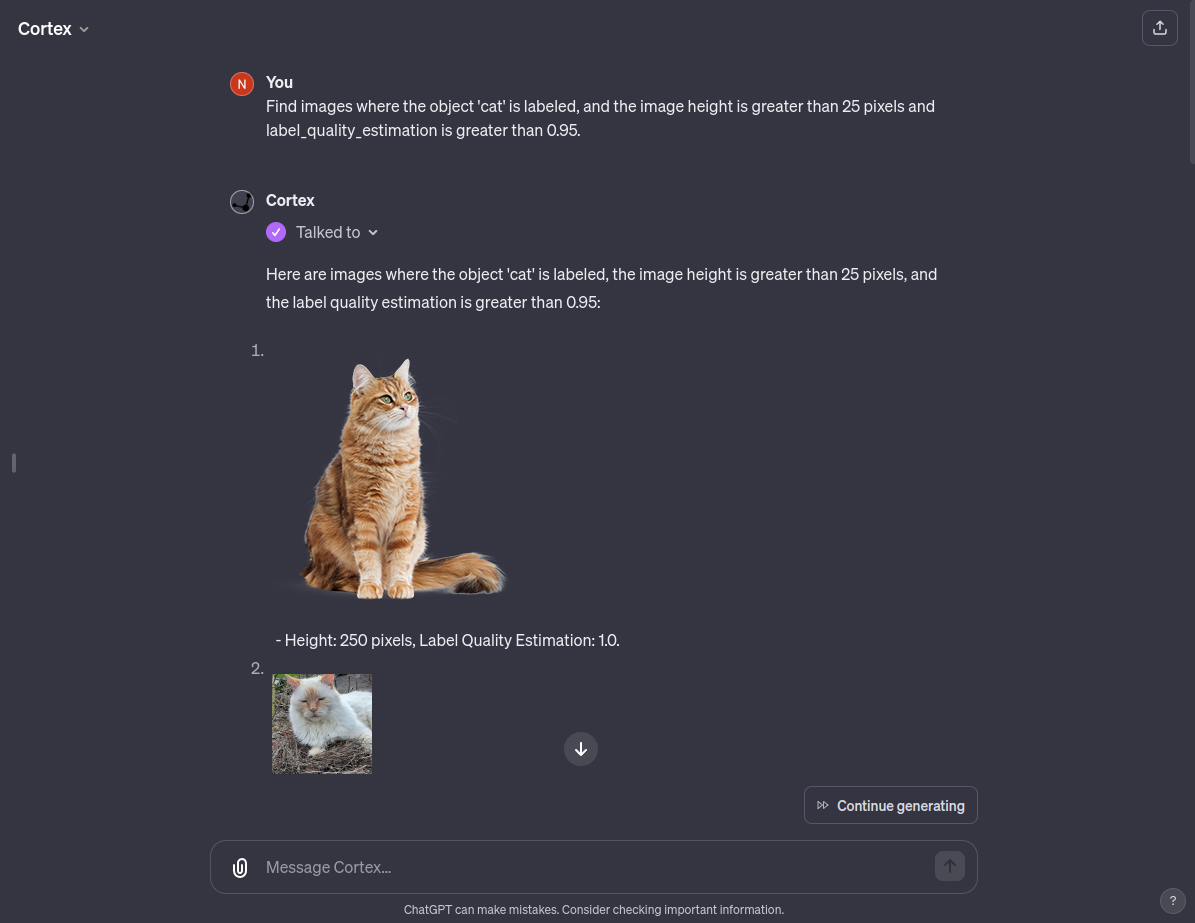

The Cortex data set also incorporates a filtering feature, enabling users to search the database for specific images. An interactive image labeling feature allows users to submit links to unindexed images. The system dynamically annotates these images and presents their semantic metadata and structural attributes.

Code Examples and Implementation

Cortex lives on RapidAPI and offers free semantic metadata and structural attribute extraction for any URL. The paid version grants users access to batches of scraped, labeled data from the Internet, utilizing filters for bulk image labeling.

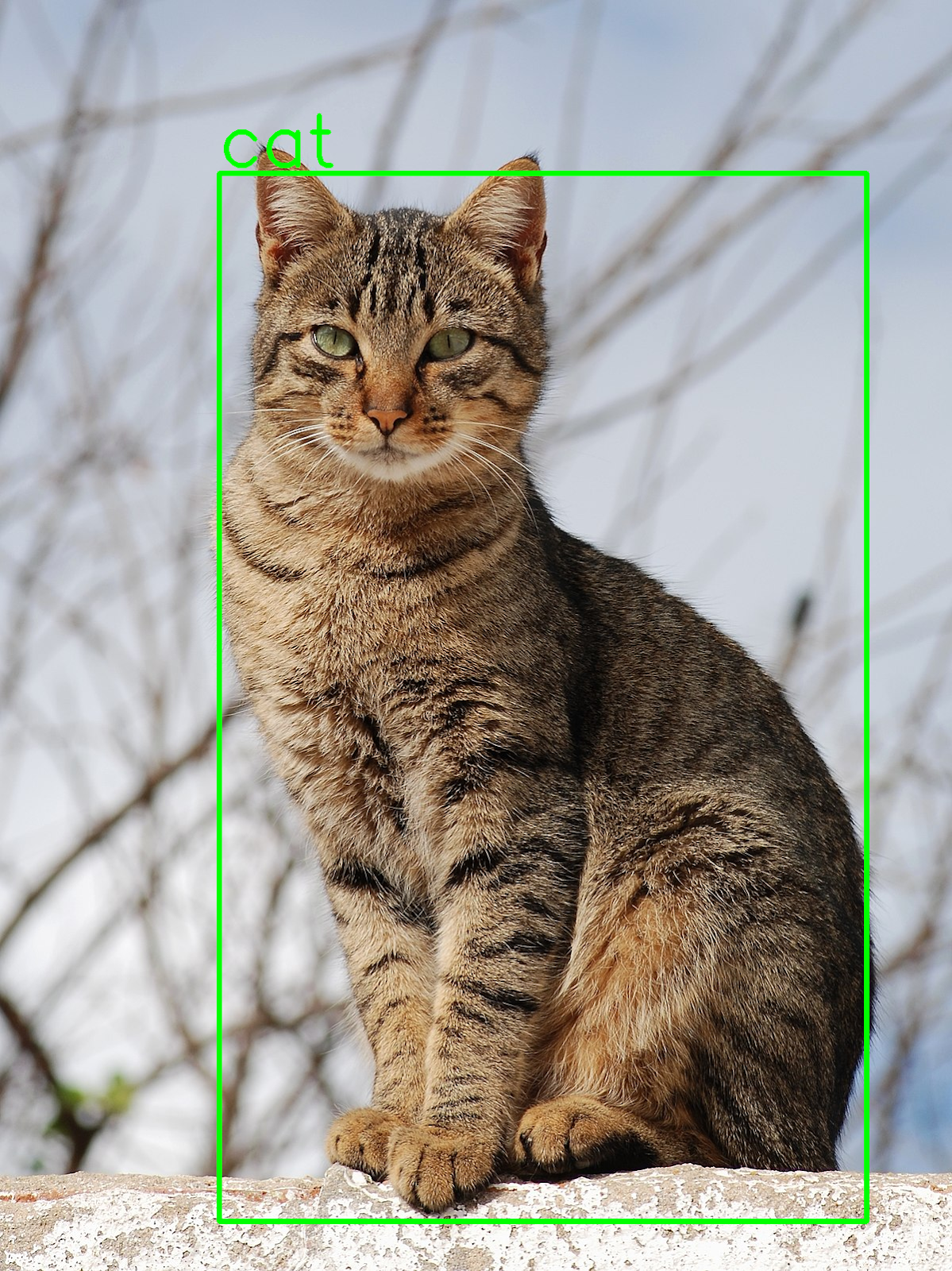

This section’s Python code example demonstrates using Cortex to retrieve semantic metadata and structural attributes for a given URL and generate bounding boxes for object detection. As the system evolves, its functionality will expand to include additional attributes like histograms, pose estimation data, and more. Each new attribute enriches the processed data, broadening its applicability to diverse use cases.

| |

The response structure resembles the following:

| |

Let’s break down each piece of information and its potential uses:

_id: This self-explanatory identifier is used internally for data indexing.url: This field contains the image URL, revealing its origin and enabling filtering based on source.datetime: Displaying the date and time of the image’s initial processing, this data proves crucial for time-sensitive applications, such as those processing real-time data streams.object_analysis_processed,pose_estimation_processed, andface_analysis_processed: These flags indicate whether labels for object analysis, pose estimation, and face analysis have been generated.type: Denoting the data type (e.g., image, audio, video), this flag will expand to encompass other data types beyond Cortex’s current image-centric focus.heightandwidth: These self-explanatory structural attributes provide the sample’s dimensions.hash: This field displays the hashed key.object_analysis: This section contains object analysis label information, including crucial semantic metadata like class name and confidence level.label_quality_estimation: Ranging from 0 (poor quality) to 1 (good quality), this score reflects the label quality assessment calculated using ML-based QA.

The visualization.png image generated by the Python code snippet appears as follows:

The next code snippet illustrates the use of Cortex’s paid version to filter and retrieve URLs of scraped images:

| |

This endpoint employs a MongoDB Query Language query (q) to filter the database based on semantic metadata and retrieves data based on the page number provided in the page body parameter.

The example query returns images tagged with the “cat” object analysis semantic metadata and having a width exceeding 100 pixels. The response content is structured as follows:

| |

Each page of the output contains up to 25 data points, including semantic metadata, structural attributes, and source information (e.g., “commoncrawl”). The total number of query results is indicated by the length key.

Foundation Models and ChatGPT Integration

Foundation models, or AI models pretrained on vast amounts of unlabeled data using self-supervised learning, have revolutionized AI since their emergence in 2018. These models can be further fine-tuned for specific tasks (e.g., mimicking a particular writing style) using smaller, labeled data sets, making them adaptable to a wide range of applications.

Cortex’s labeled data sets can serve as a reliable data source to enhance the starting point provided by pretrained models, surpassing even foundation models that still rely on labels for self-supervised pretraining. By harnessing the power of Cortex’s extensive labeled data, AI models can achieve higher pretraining effectiveness and, consequently, greater accuracy when fine-tuned. Cortex differentiates itself through its scale and diversity, with the data set continuously expanding and incorporating new data points and diverse labels. At the time of publication, it housed over 20 million data points.

Furthermore, Cortex offers a customized ChatGPT chatbot, granting users unparalleled access to and utilization of its meticulously labeled data repository. This user-friendly feature augments ChatGPT’s capabilities by providing deep access to both semantic and structural metadata for images, with plans to extend this functionality beyond images in the future.

In its current state, Cortex allows users to task the customized ChatGPT chatbot with retrieving images containing specific items occupying most of the image space or images featuring multiple items. This customized ChatGPT comprehends deep semantics and can search for particular image types based on simple prompts. Future refinements incorporating diverse object classes will transform this custom GPT into a powerful image search chatbot.

Image Data Labeling: The Backbone of AI Systems

We are inundated with data, yet most unprocessed raw data holds little value for training purposes and requires refinement for building successful AI systems. Cortex addresses this challenge by facilitating the transformation of vast raw data quantities into valuable data sets. By enabling the rapid refinement of raw data, Cortex reduces reliance on external data sources and services, accelerates training, and empowers the creation of more accurate and tailored AI models.

While the system currently provides semantic metadata for object analysis with quality estimations, it will eventually encompass face analysis, pose estimation, and visual embeddings. Plans are also underway to incorporate modalities beyond images, including video, audio, and text data. While currently returning width and height structural attributes, the system will expand to include pixel histograms as well.

As AI systems become increasingly ubiquitous, the demand for high-quality data will continue to soar, prompting an evolution in data collection and processing methods. Current AI solutions are only as good as the data they learn from. Meticulous training on large, high-quality data sets is crucial for unlocking their full potential. Ultimately, the goal is to utilize Cortex to index and annotate as much publicly available data as possible, creating an invaluable repository of high-quality labeled data for training the next generation of AI systems.

The editorial team of the Toptal Engineering Blog extends its sincere appreciation to Shanglun Wang for reviewing the code samples and technical content presented in this article.

All data set images and sample images are courtesy of Pičuljan Technologies._