I once had the opportunity to drive an Audi equipped with a powerful V8 twin-turbo engine, and the performance was truly remarkable. I vividly recall pushing the car to around 140MPH on the IL-80 highway near Chicago at 3AM, taking advantage of the empty road. That experience solidified the association between the term “V8” and exceptional performance in my mind.

While Audi’s V8 engine is undeniably potent, it’s important to remember that you’re ultimately constrained by the fuel tank’s capacity. The same principle applies to Google’s V8, the JavaScript engine powering Node.js. Despite its exceptional performance and suitability for numerous use cases, it faces limitations imposed by heap size. When your Node.js application needs to handle a larger volume of requests, you’re presented with two primary scaling options: vertical or horizontal.

Horizontal scaling involves running multiple instances of your application concurrently. When implemented effectively, this approach enables you to accommodate a higher influx of requests. Vertical scaling, on the other hand, focuses on enhancing your application’s memory utilization and performance or bolstering the resources allocated to your application instance.

In a recent project with a Toptal client, I was tasked with addressing a memory leak issue in their Node.js application. The application, an API server, was designed to manage a substantial load of hundreds of thousands of requests per minute. However, the initial implementation consumed a considerable 600MB of RAM, prompting us to refactor and reimplement the heavily utilized API endpoints. Overhead costs become a significant concern when dealing with high request volumes.

For the revamped API, we opted for a lightweight stack comprising restify, the native MongoDB driver, and Kue for handling background jobs. While this stack appeared lean, it still exhibited significant memory consumption. Under peak load, a new instance of the application could utilize up to 270MB of RAM, dashing my hopes of running two application instances per 1X Heroku Dyno.

Toolkit for Node.js Memory Leak Debugging

Memwatch

When searching for ways to identify memory leaks in Node.js, memwatch is often the first tool encountered. While the original package has been discontinued, updated and maintained versions are readily available on GitHub’s fork list for the repository. This module proves valuable by emitting leak events upon detecting heap growth over five consecutive garbage collections.

Heapdump

This excellent tool empowers Node.js developers to capture heap snapshots for later analysis using Chrome Developer Tools.

Node-inspector

Serving as an even more powerful alternative to heapdump, node-inspector enables you to connect to a running application, capture heap dumps, and even perform real-time debugging and recompilation.

Putting “node-inspector” to the Test

Unfortunately, connecting to production applications hosted on platforms like Heroku, which restrict signal transmission to running processes, isn’t feasible with node-inspector. However, Heroku is just one among many hosting options.

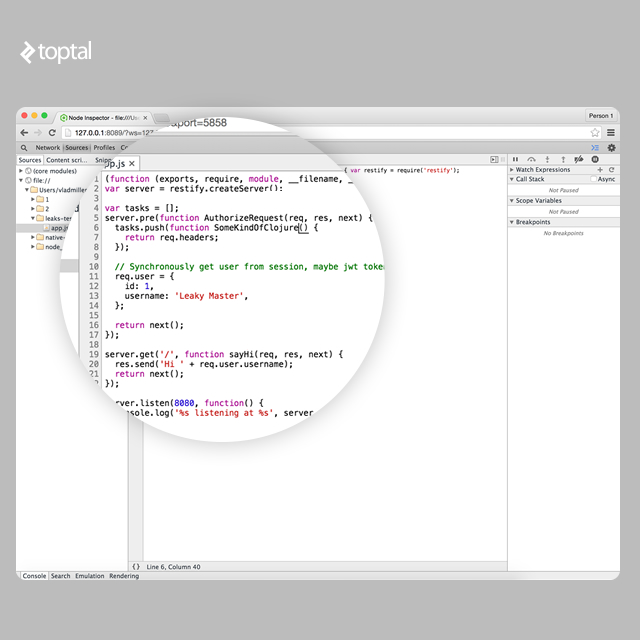

To illustrate node-inspector’s capabilities, let’s craft a simple Node.js application using restify and introduce a deliberate memory leak. Our experiments will utilize Node.js v0.12.7, compiled against V8 v3.28.71.19.

| |

This rudimentary application exhibits an obvious memory leak. The tasks array expands throughout the application’s lifecycle, leading to performance degradation and eventual crashes. The crux of the problem lies in leaking not only closures but entire request objects as well.

V8’s garbage collection employs a stop-the-world strategy, implying that a larger memory footprint results in lengthier garbage collection pauses. The log clearly demonstrates that garbage collection initially takes a negligible 20ms on average, but after handling a few hundred thousand requests, this duration escalates to around 230ms. Users accessing the application would experience this 230ms delay due to garbage collection. Moreover, the frequent garbage collection cycles, occurring every few seconds, translate into recurrent user-facing issues. This delay persists until the application ultimately collapses.

| |

These log entries appear when a Node.js application is launched with the –trace_gc flag:

| |

Assuming our Node.js application is running with this flag, before connecting with node-inspector, we need to send the SIGUSR1 signal to the running process. If you’re using Node.js in cluster mode, ensure you connect to one of the worker processes.

| |

This action triggers debugging mode within the Node.js application, specifically in the V8 engine. Consequently, the application automatically listens for debugging connections on port 5858 using V8 Debugging Protocol.

Next, we launch node-inspector, which establishes a connection to the running application’s debugging interface and exposes another web interface on port 8080.

| |

If your application is running in a production environment behind a firewall, you can tunnel remote port 8080 to your local machine:

| |

With this setup, you can now open your Chrome web browser and gain comprehensive access to Chrome Developer Tools, seamlessly integrated with your remote production application. Regrettably, Chrome Developer Tools remains unsupported in other browsers.

Embarking on a Leak Hunt!

Unlike traditional memory leaks encountered in C/C++ applications, memory leaks in V8 manifest as variables that are “forgotten” rather than truly lost. Our objective is to locate these abandoned variables and remind them of their freedom.

Chrome Developer Tools provides a range of profilers, but the one that piques our interest is Record Heap Allocations. This profiler captures multiple heap snapshots over time, offering insights into which objects contribute to the leak.

Let’s initiate heap allocation recording and simulate 50 concurrent users accessing our homepage using Apache Benchmark.

| |

Prior to taking new snapshots, V8 performs a mark-sweep garbage collection cycle, ensuring that the snapshots are free from obsolete data.

On-the-Fly Leak Remediation

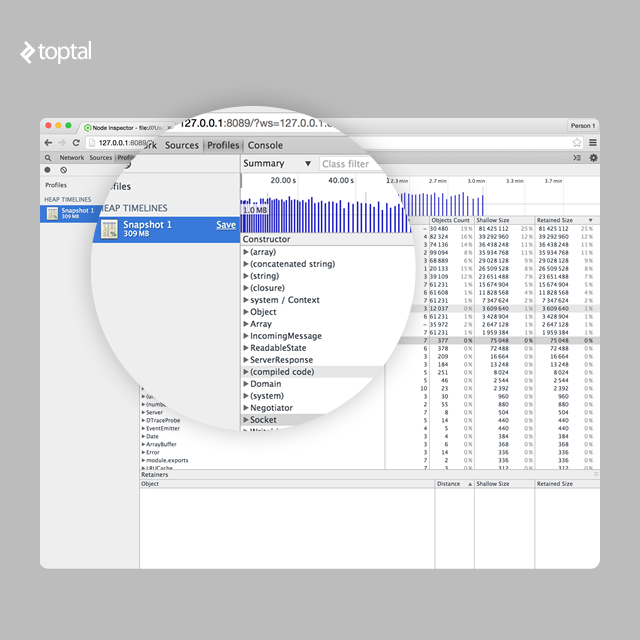

After gathering heap allocation snapshots over a 3-minute period, we observe a pattern similar to the following:

The presence of massive arrays, along with numerous IncomingMessage, ReadableState, ServerResponse, and Domain objects within the heap, becomes evident. Let’s delve into analyzing the leak’s origin.

By selecting the heap diff on the chart between the 20s and 40s marks, we can isolate objects added after the 20-second mark since profiling began, effectively excluding regular data.

Noting the object counts for each type, we extend the filter from 20 seconds to 1 minute. We notice that the already substantial arrays continue to expand. Under “(array),” we spot multiple “(object properties)” objects with consistent spacing. These objects are the culprits behind our memory leak.

Furthermore, we observe a rapid increase in “(closure)” objects as well.

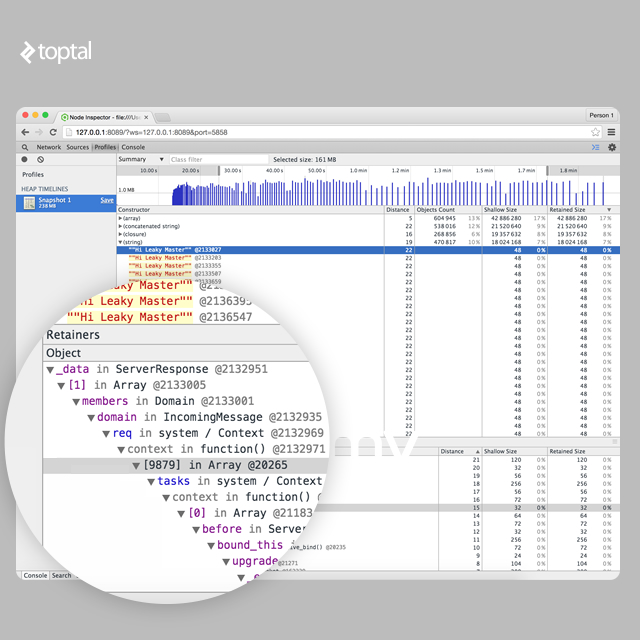

Examining the strings can provide additional clues. The strings list reveals a multitude of “Hi Leaky Master” phrases, potentially offering valuable hints.

In our scenario, we know that the string “Hi Leaky Master” is assembled exclusively within the “GET /” route.

Inspecting the retainers path, we discover that this string is referenced through req, leading to the creation of a context, which is then added to a massive array of closures.

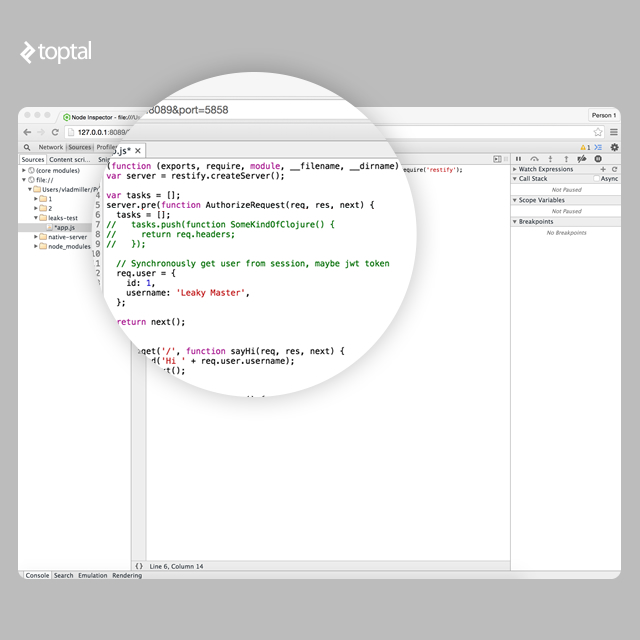

Armed with the knowledge that we’re dealing with a massive array of closures, let’s assign names to all our closures in real-time within the sources tab.

Once the code modifications are complete, a simple CTRL+S saves and recompiles the code on the fly!

Now, let’s capture another Heap Allocations Snapshot to identify the memory-hogging closures.

It becomes apparent that SomeKindOfClojure() is the root cause. We discover that SomeKindOfClojure() closures are being added to an array named tasks within the global scope.

The uselessness of this array is evident. We could comment it out. However, the question arises: how do we reclaim the memory it already occupies? The solution is straightforward: we assign an empty array to tasks. Upon the next request, this empty array will be overwritten, and the memory will be freed during the subsequent garbage collection event.

Dobby is indeed free!

Unveiling the Life Cycle of Garbage in V8

The V8 heap is segmented into several distinct spaces:

- New Space: This relatively small space, typically ranging from 1MB to 8MB, serves as the initial allocation area for most objects.

- Old Pointer Space: Objects that potentially hold pointers to other objects reside here. Objects that survive long enough in New Space are promoted to Old Pointer Space.

- Old Data Space: This space houses raw data such as strings, boxed numbers, and arrays of unboxed doubles. Objects that endure garbage collection in New Space for an extended period are also moved here.

- Large Object Space: Objects too large to fit in other spaces are created here. Each object in this space occupies its own

mmap‘ed memory region. - Code space: This space contains the assembly code generated by the JIT compiler.

- Cell space, property cell space, map space: These spaces contain

Cells,PropertyCells, andMaps, simplifying garbage collection.

Each space comprises pages, which are memory regions allocated from the operating system using mmap. Pages are typically 1MB in size, except for those within the large object space.

V8 employs two primary garbage collection mechanisms: Scavenge, and Mark-Sweep & Mark-Compact.

Scavenge is a highly efficient garbage collection technique operating on objects within New Space. Essentially an implementation of Cheney’s Algorithm, Scavenge divides New Space into two equal semi-spaces: To-Space and From-Space. When To-Space becomes full, a Scavenge garbage collection cycle is triggered. This process involves swapping To-Space and From-Space, copying live objects to To-Space, promoting objects that have survived two scavenges to one of the old spaces, and completely erasing the old From-Space. While scavenges are remarkably fast, they come with the overhead of maintaining a doubled heap size and constantly copying objects in memory. However, their use is justified by the ephemeral nature of most objects.

Mark-Sweep & Mark-Compact, also known as the full garbage collector, marks all live nodes, sweeps dead nodes, and defragments memory.

Optimizing GC Performance and Debugging Tips

While high performance might not be as critical for web applications as for other software domains, preventing memory leaks remains paramount. During the mark phase of full garbage collection, the application is paused until the process completes. Consequently, a larger heap size directly translates into longer garbage collection pauses and increased user-perceived latency.

Name Your Closures and Functions

Assigning meaningful names to closures and functions significantly simplifies stack trace and heap inspection.

| |

Avoid Large Objects in Frequently Executed Functions

Strive to minimize the use of large objects within hot functions, ensuring that data fits comfortably within New Space. CPU and memory-intensive operations should be offloaded to background processes. Additionally, avoid deoptimization triggers for hot functions, as optimized functions consume less memory than their non-optimized counterparts.

Optimize Hot Functions

Optimizing frequently executed functions not only enhances their speed but also reduces their memory footprint, resulting in less frequent garbage collection cycles. V8 provides tools to identify non-optimized or deoptimized functions.

Minimize Polymorphism in Inline Caches (ICs) for Hot Functions

Inline Caches (ICs) accelerate code execution by caching object property access (obj.key) or simple function calls.

| |

Upon the first invocation of x(a,b), V8 creates a monomorphic IC. Subsequent calls to x trigger the creation of new polymorphic ICs, first supporting both integer and string operands (level 2) and then reaching higher levels.

However, there’s a limit. When the IC level reaches 5 (configurable via the –max_inlining_levels flag), the function becomes megamorphic and is no longer considered optimizable.

Intuitively, monomorphic functions exhibit the best performance and smallest memory footprint.

Avoid Loading Large Files into Memory

This well-established practice advises against loading large files, such as CSV files, entirely into memory. Instead, process them in smaller chunks, reading and processing line by line. In most cases, a single CSV line won’t exceed 1MB, allowing it to fit within New Space.

Prevent Main Server Thread Blocking

Offload time-consuming operations, such as image resizing, from hot API endpoints to separate threads or background jobs. CPU-intensive tasks can block the main thread, causing other requests to queue up and potentially leading to memory issues as unprocessed request data accumulates.

Generate Data Only When Necessary

Avoid generating unnecessary data. For instance, generating detailed error objects with stack traces for invalid URLs can lead to excessive memory consumption, especially under high load. While such data proves useful during development, it’s often unnecessary in production.

Master Your Tools

Familiarize yourself with the array of debuggers, leak detectors, and usage graph generators available. These tools are invaluable for enhancing software performance and efficiency.

Conclusion

A solid understanding of V8’s garbage collection and code optimization mechanisms is crucial for achieving optimal application performance. V8 compiles JavaScript to native assembly, and well-written code can often achieve performance comparable to applications compiled with traditional languages like C++.

And in case you’re wondering, the revamped API application for my Toptal client, while still open to further refinement, is performing admirably!

It’s worth noting that Joyent recently released a new version of Node.js incorporating one of the latest V8 versions. While some applications written for Node.js v0.12.x might not be directly compatible with the new v4.x release, the performance and memory usage improvements in the newer Node.js version are substantial.

Further Exploration on the Toptal Engineering Blog:

- The 10 Most Common JavaScript Issues Developers Face

- Benchmarking A Node.js Promise

- Is It Time to Use Node 8?

- Software Reengineering: From Spaghetti to Clean Design

- Eliminating the Garbage Collector: The RAII Way