The concept of intelligence in artificial intelligence originates from massive datasets used to train machine learning (ML) models. Cutting-edge language models, such as GPT-4 and Gemini, process trillions of small data units known as tokens. This training dataset is not just raw data taken from the internet. For effective training, the data needs to be labeled.

Data labeling adds context and meaning to raw information by annotating or tagging it. By effectively highlighting what the system should learn, this process improves model training accuracy. Examples of data labeling include sentiment analysis in text, object identification in images, audio transcription, and action labeling in video sequences.

Unsurprisingly, data labeling quality significantly impacts training. The saying coined by William D. Mellin in 1957 is a common phrase in machine learning for a reason. ML models trained on inaccurate or inconsistent labels struggle to adapt to new data and may exhibit biases in predictions, resulting in inaccurate output. Additionally, low-quality data can compound, leading to further complications.

This guide to data labeling systems helps improve data quality and gain a competitive advantage, regardless of your current annotation stage. We’ll begin by examining data labeling architecture platforms and tools, comparing different technologies. We’ll then explore key aspects like bias reduction, privacy protection, and maximizing labeling accuracy.

Understanding Data Labeling within the ML Pipeline

Machine learning model training usually falls into three types: supervised, unsupervised, and reinforcement learning. Supervised learning uses labeled training data, which consists of input data points with their corresponding correct output labels. The model learns to map input features to output labels, allowing it to make predictions on unseen input data. This differs from unsupervised learning, which analyzes unlabeled data to find hidden patterns or data groupings. In reinforcement learning, training involves a trial-and-error approach, with human involvement primarily in the feedback stage.

Supervised learning is the method used to train most modern machine learning models. High-quality training data is crucial and needs consideration at every stage of the training pipeline, with data labeling playing a vital role.

Before labeling, data undergoes collection and preprocessing. Raw data originates from various sources, including sensors, databases, log files, and application programming interfaces (APIs). This data often lacks a standardized structure or format and contains inconsistencies like missing values, outliers, or duplicates. Preprocessing involves cleaning, formatting, and transforming the data for consistency and compatibility with data labeling. Different techniques can be used, such as removing rows with missing values or updating them using imputation, a statistical estimation method. Outliers can be flagged for further examination.

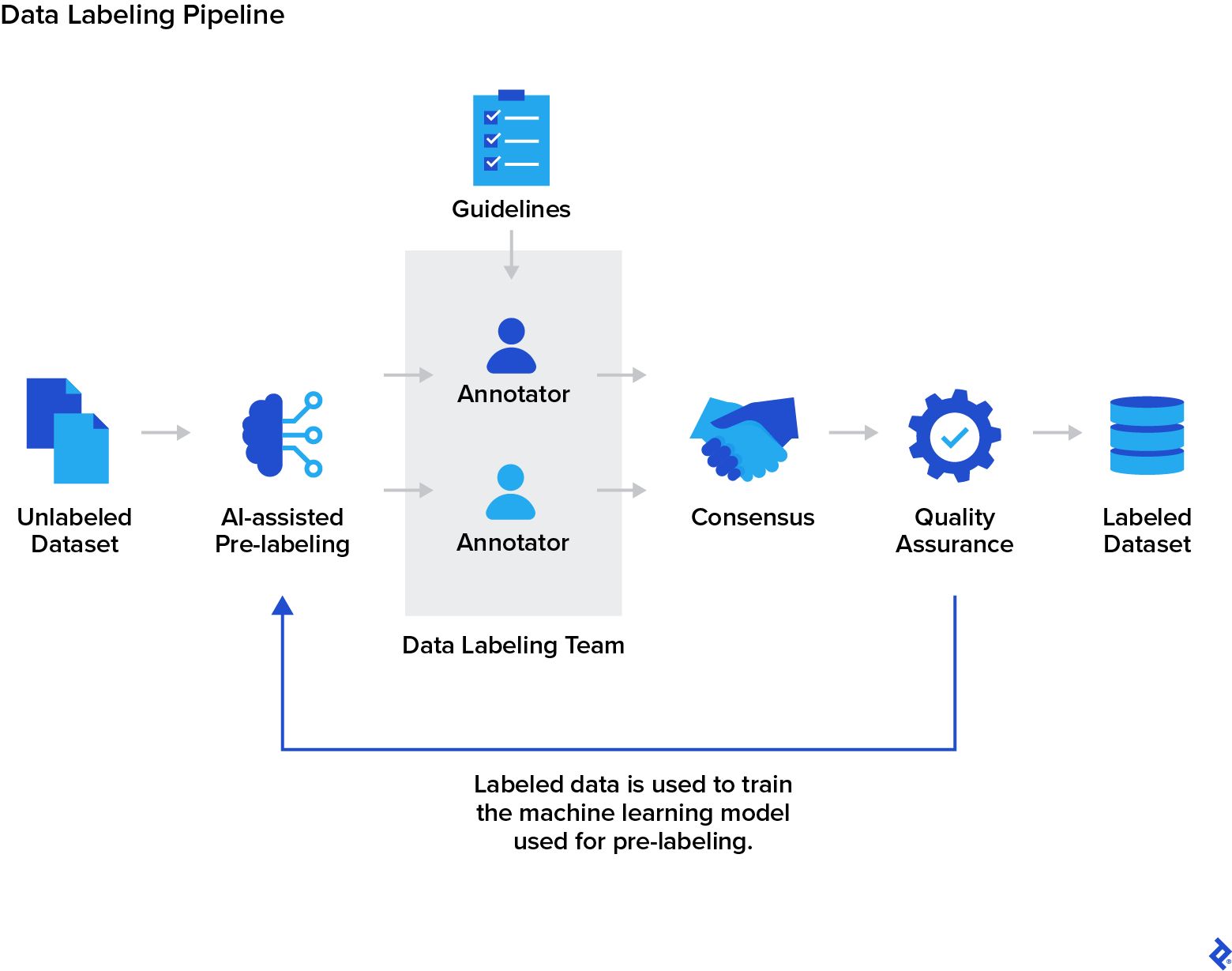

Once preprocessed, the data is labeled or annotated to provide the ML model with necessary learning information. The specific method depends on the data type being processed, as image annotation differs from text annotation. While automated tools exist, human involvement significantly benefits the process, especially for accuracy and avoiding AI-introduced biases. The quality assurance (QA) stage follows labeling, ensuring label accuracy, consistency, and completeness. QA teams often use double-labeling, where multiple labelers independently annotate a subset of data, comparing and resolving discrepancies.

The model then undergoes training with the labeled data to learn patterns and input-label relationships. Its parameters are iteratively adjusted for prediction accuracy regarding the labels. To evaluate the model’s effectiveness, it’s tested on unseen labeled data. Metrics such as accuracy, precision, and recall quantify its predictions. If the model performs poorly, adjustments like improving training data to address noise, biases, or data labeling issues can be made before retraining. Finally, the model is ready for deployment in a production environment with real-world data. Continuous performance monitoring is crucial to identify issues requiring updates or retraining.

Different Data Labeling Types and Methods

Before building a data labeling architecture, identify all data types requiring labeling. Data comes in various forms, including text, images, video, and audio. Each type presents unique challenges, demanding a specific approach for accurate and consistent labeling. Furthermore, some data labeling software includes annotation tools tailored for particular data types. Many annotators and annotation teams specialize in labeling specific data types. Software and team selection will depend on the project requirements.

For instance, computer vision data labeling might involve categorizing digital images and videos, creating bounding boxes to annotate objects within them. Waymo’s publicly available Open Dataset is an example of a labeled computer vision dataset used for autonomous driving. It was labeled through a combination of private and crowdsourced data labelers. Other applications for computer vision include medical imaging, surveillance, security, and augmented reality.

Natural language processing (NLP) algorithms analyze and process text that can be labeled in various ways. This includes sentiment analysis (identifying positive or negative emotions), keyword extraction (finding relevant phrases), and named entity recognition (identifying specific people or places). Text blurbs can also be classified, for example, as spam or identifying the text’s language. NLP models have applications in chatbots, coding assistants, translators, and search engines.

Audio data has applications in sound classification, voice recognition, speech recognition, and acoustic analysis. Audio files might be annotated to identify specific words or phrases (like “Hey Siri”), classify different types of sounds, or transcribe spoken words into text.

Many ML models are multimodal, meaning they can interpret information from multiple sources simultaneously. For example, a self-driving car might combine visual information like traffic signs and pedestrians with audio data such as a honking horn. In multimodal data labeling, human annotators label and combine different data types, capturing their relationships and interactions.

Another factor to consider before building your system is the appropriate data labeling method for your use case. Human annotators have traditionally performed data labeling. However, advancements in ML are boosting automation, making the process more efficient and cost-effective. Although automated labeling tools are improving in accuracy, they still can’t match the accuracy and reliability of human labelers.

Human-in-the-loop (HTL) data labeling combines the strengths of human annotators and software. In HTL, AI automates the initial label creation, followed by human validation and correction. These corrected annotations are added to the training dataset to improve the software’s performance. HTL offers efficiency and scalability while maintaining accuracy and consistency, making it the most popular data labeling method currently.

Building a Data Labeling System: Choosing Your Components

When designing a data labeling architecture, choosing the right tools is crucial for an efficient and reliable annotation workflow. Various tools and platforms are designed to optimize data labeling. However, depending on your project’s needs, building a data labeling pipeline with in-house tools may be the most suitable approach.

Core Data Labeling Workflow Steps

The labeling pipeline starts with data collection and storage. Information can be gathered manually through techniques like interviews, surveys, or questionnaires, or automatically through methods like web scraping. If you lack the resources for large-scale data collection, open-source datasets from platforms like Kaggle](https://www.kaggle.com/), UCI Machine Learning Repository, Google Dataset Search, and GitHub provide good alternatives. Additionally, artificial data generation using mathematical models can augment real-world data. Cloud platforms like [Amazon S3, Google Cloud Storage or Microsoft Azure Blob Storage offer scalable storage, virtually unlimited capacity, and built-in security features. However, on-premise storage is typically necessary for highly sensitive data with regulatory compliance requirements.

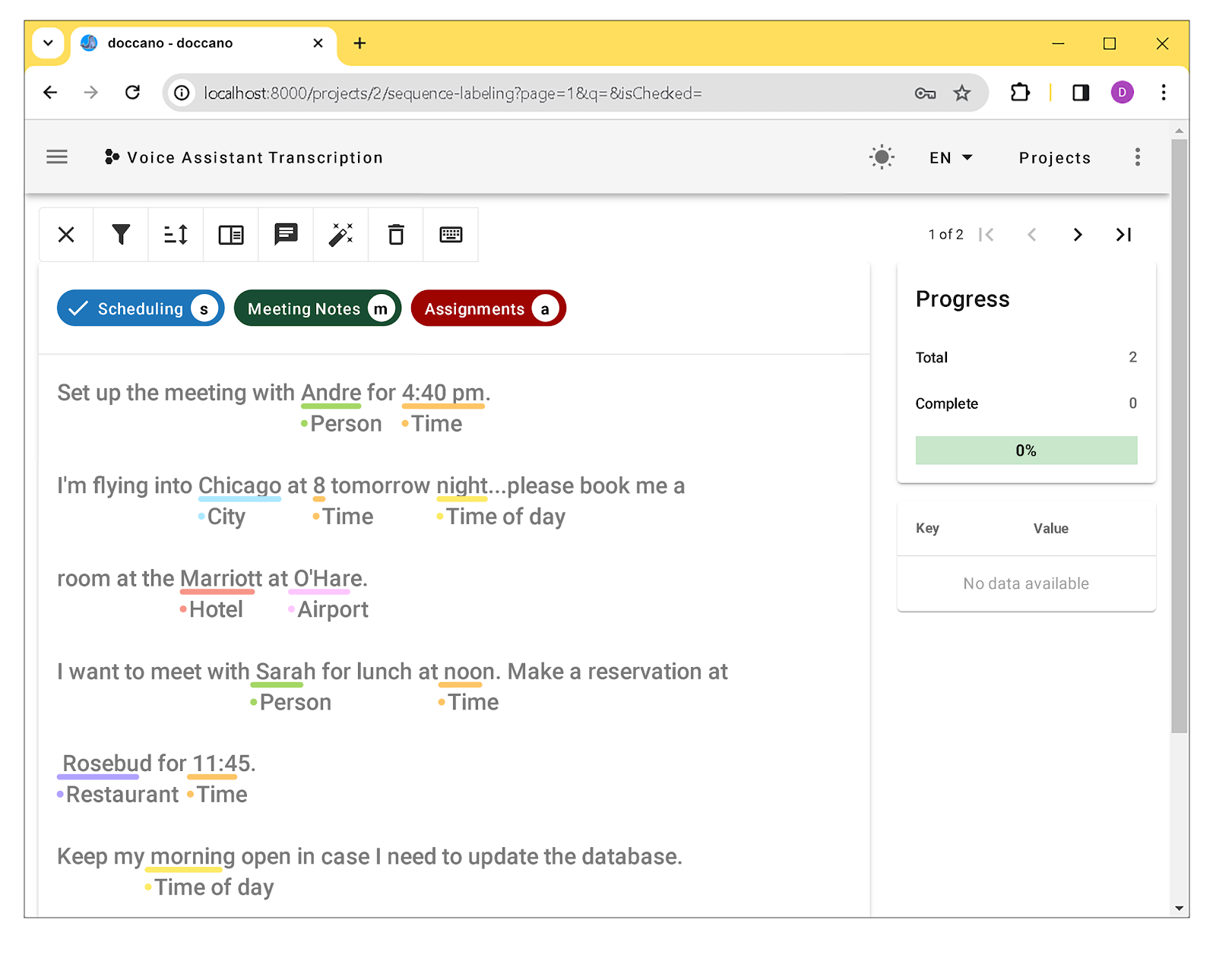

The labeling process can begin once data is collected. While the annotation workflow varies depending on data types, each significant data point is generally identified and classified using an HTL approach. Numerous platforms streamline this complex process, including open-source (Doccano, LabelStudio, CVAT) and commercial (Scale Data Engine, Labelbox, Supervisely, Amazon SageMaker Ground Truth) annotation tools.

A QA team reviews the created labels for accuracy. Inconsistencies are typically addressed at this stage through manual approaches like majority decision, benchmarking, and expert consultation. Automated methods can also mitigate inconsistencies. For example, statistical algorithms like the Dawid-Skene model can aggregate labels from multiple annotators into a single, more reliable label. Once key stakeholders agree on the correct labels, they become the “ground truth” for training ML models. While many free and open-source tools offer basic QA workflow and data validation functionality, commercial tools provide advanced features like machine validation, approval workflow management, and quality metrics tracking.

Data Labeling Tool Comparison

Open-source tools are a good starting point for data labeling. Despite their limited functionality compared to commercial tools, the absence of licensing fees is a significant advantage for smaller projects. While commercial tools often feature AI-assisted pre-labeling, many open-source tools support it when connected to an external ML model.

Name | Supported data types | Workflow management | QA | Support for cloud storage | Additional notes |

|---|---|---|---|---|---|

Label Studio Community Edition |

| Yes | No |

|

|

CVAT |

| Yes | Yes |

|

|

Doccano |

| Yes | No |

|

|

VIA (VGG Image Annotator) |

| No | No | No |

|

| No | No | No |

|

While open-source platforms offer much of the necessary data labeling functionality, complex machine learning projects requiring advanced annotation features, automation, and scalability benefit from a commercial platform. These platforms offer added security features, technical support, comprehensive pre-labeling functionality (assisted by included ML models), and dashboards for visualizing analytics, making them worthwhile investments in most cases.

Name | Supported data types | Workflow management | QA | Support for cloud storage | Additional notes |

|---|---|---|---|---|---|

Labelbox |

| Yes | Yes |

|

|

Supervisely |

| Yes | Yes |

|

|

Amazon SageMaker Ground Truth |

| Yes | Yes |

|

|

Scale AI Data Engine |

| Yes | Yes |

|

|

| Yes | Yes |

|

|

Building an in-house data labeling platform may be the solution if you need features unavailable in existing tools or if your project involves highly sensitive data. This allows you to tailor support for specific data formats and annotation tasks, as well as design custom pre-labeling, review, and QA workflows. However, building and maintaining a platform comparable to commercial platforms is cost-prohibitive for most companies.

The choice ultimately depends on various factors. A custom-built platform might be ideal if third-party platforms lack the necessary features or the project involves highly sensitive data. Some projects might benefit from a hybrid approach, using a commercial platform for core labeling tasks and developing custom functionality in-house.

Maintaining Quality and Security in Data Labeling Systems

The data labeling pipeline is a complex system involving massive data, multiple infrastructure levels, a team of labelers, and a sophisticated, multi-layered workflow. Combining these components into a seamless system is challenging. Issues like privacy and security are ever-present, and other challenges can impact labeling quality, reliability, and efficiency.

Enhancing Labeling Accuracy

Automation can expedite labeling, but overreliance on automated labeling tools can compromise accuracy. Data labeling tasks often demand contextual awareness, domain expertise, or subjective judgment, which software algorithms can’t yet provide. Creating clear human annotation guidelines and implementing error detection mechanisms are two effective methods to ensure data labeling quality.

Minimizing annotation errors starts with comprehensive guidelines. Define all potential label classifications and specify label formats. Include step-by-step instructions in the annotation guidelines, covering ambiguity and edge cases. Provide various example annotations for labelers to follow, including straightforward and ambiguous data points.

Having multiple independent annotators label the same data point and comparing their results enhances accuracy. Inter-annotator agreement (IAA) is a crucial metric for measuring labeling consistency between annotators. A review process should be established for data points with low IAA scores to reach a consensus on a label. Setting a minimum IAA score threshold ensures the ML model only learns from data with high agreement among labelers.

Rigorous error detection and tracking significantly improve annotation accuracy. Software tools like Cleanlab can automate error detection. These tools compare labeled data against predefined rules to detect inconsistencies or outliers. For images, the software might flag overlapping bounding boxes. For text, it can automatically detect missing annotations or incorrect label formats. All errors are highlighted for review by the QA team. Many commercial annotation platforms offer AI-assisted error detection, where an ML model pretrained on annotated data flags potential mistakes. These flagged and reviewed data points are then added to the model’s training data, improving its accuracy via active learning.

Error tracking provides valuable feedback to enhance the labeling process through continuous learning. Key metrics, such as label accuracy and consistency between labelers, are tracked. If labelers frequently make mistakes on specific tasks, determine the root causes. Many commercial data labeling platforms offer built-in dashboards to visualize labeling history and error distribution. Performance improvement methods include adjusting data labeling standards and guidelines to clarify ambiguous instructions, retraining labelers, or refining error detection algorithm rules.

Addressing Bias and Promoting Fairness

Data labeling heavily relies on personal judgment and interpretation, making it challenging for human annotators to create unbiased and fair labels. Data can be ambiguous. For example, when classifying text data, sentiments like sarcasm or humor are easily misinterpreted. Similarly, a facial expression in an image might be perceived as “sad” by some labelers and “bored” by others. This subjectivity can introduce bias.

The dataset itself can also be biased. Depending on the source, specific demographics and viewpoints might be over- or underrepresented. Training a model on biased data can lead to inaccurate predictions, for example, incorrect diagnoses due to bias in medical datasets.

To minimize bias in annotation, ensure the labeling and QA teams represent diverse backgrounds and perspectives. Double- and multi-labeling can also reduce individual bias impact. The training data should reflect real-world data, with a balanced representation of demographics, geographic location, and other relevant factors. Data can be collected from diverse sources, and if needed, augmented to address potential bias sources. Additionally, data augmentation techniques like image flipping or text paraphrasing can mitigate inherent biases by artificially increasing dataset diversity. These methods create variations of the original data point. Image flipping allows the model to recognize an object regardless of its orientation, reducing bias towards specific orientations. Text paraphrasing exposes the model to different ways of expressing the same information, reducing potential biases caused by specific words or phrasing.

Incorporating an external oversight process can also help reduce bias. An external team comprising domain experts, data scientists, ML experts, and diversity and inclusion specialists can review labeling guidelines, evaluate workflow, and audit labeled data. This team can then recommend improvements to ensure a fair and unbiased process.

Data Privacy and Security

Data labeling projects often involve potentially sensitive information. All platforms should incorporate security features like encryption and multifactor authentication for user access control. To protect privacy, remove or anonymize personally identifiable information. Additionally, train every labeling team member on data security best practices, such as using strong passwords and avoiding accidental data sharing.

Data labeling platforms should also comply with relevant data privacy regulations, including the General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), and the Health Insurance Portability and Accountability Act (HIPAA). Many commercial data platforms are SOC 2 Type 2 certified, meaning an external auditor has confirmed their compliance with five trust principles: security, availability, processing integrity, confidentiality, and privacy.

Future-proofing Your Data Labeling System

Data labeling is a crucial yet often unseen process that plays a vital role in developing ML models and AI systems. Therefore, your labeling architecture must scale to accommodate changing requirements.

Commercial and open-source platforms undergo regular updates to support emerging data labeling needs. Similarly, develop in-house solutions with easy updates in mind. For example, modular design allows for component swapping without affecting the entire system. Additionally, integrating open-source libraries or frameworks adds adaptability, as they are constantly updated alongside industry advancements.

Cloud-based solutions offer significant advantages for large-scale data labeling projects compared to self-managed systems. Cloud platforms can dynamically scale storage and processing power as needed, eliminating the need for expensive infrastructure upgrades.

As datasets grow, the annotating workforce must also scale. New annotators need quick training on accurate and efficient data labeling. Managed data labeling services or on-demand annotators can bridge the gap, enabling flexible scaling based on project needs. However, ensure the training and onboarding process can also scale in terms of location, language, and availability.

The accuracy of ML models depends on the quality of the labeled training data. Effective, hybrid data labeling systems have the potential to leverage AI and revolutionize how we approach various tasks, making almost every business more efficient.