When choosing a platform for running a demanding software application, AWS stands out due to its flexibility in scaling resources on demand. This allows for complete control over the environment but requires upfront planning to determine the infrastructure and budget.

This article outlines an AWS cloud infrastructure solution designed and implemented for LEVELS, a social networking platform featuring integrated facial recognition payment processing. This system leverages user benefits from various loyalty programs, possessions, and location data.

Requirements

The solution had to address two primary client requirements:

- Security

- Scalability

Protecting user data from unauthorized internal and external access was paramount for security. The scalability requirement demanded the infrastructure’s capacity to manage product expansion, automatically adjusting to traffic fluctuations and peaks. This includes automated failover and recovery capabilities to minimize downtime in case of server failures.

AWS Security Concepts Overview

A major advantage of a custom AWS Cloud infrastructure is the network isolation and control it provides. This is a key reason to opt for Infrastructure as a Service (IaaS) over the simpler Platform as a Service (PaaS) models. While PaaS offers default security measures, it lacks the comprehensive, fine-grained control achievable with AWS cloud customization.

Despite being a relatively new product when they consulted Toptal for AWS expertise, LEVELS was committed to AWS. They prioritized robust security measures for their infrastructure to safeguard user data and privacy. Additionally, with plans to incorporate credit card processing, ensuring PCI-DSS compliance was essential.

Virtual Private Cloud (VPC)

AWS security begins with creating your own Amazon Virtual Private Cloud (VPC) - a separate virtual network for hosting your AWS resources, logically isolated from others within the AWS Cloud. A VPC has its own dedicated IP address range, fully customizable subnets, routing tables, network access control lists, and security groups (acting as a firewall).

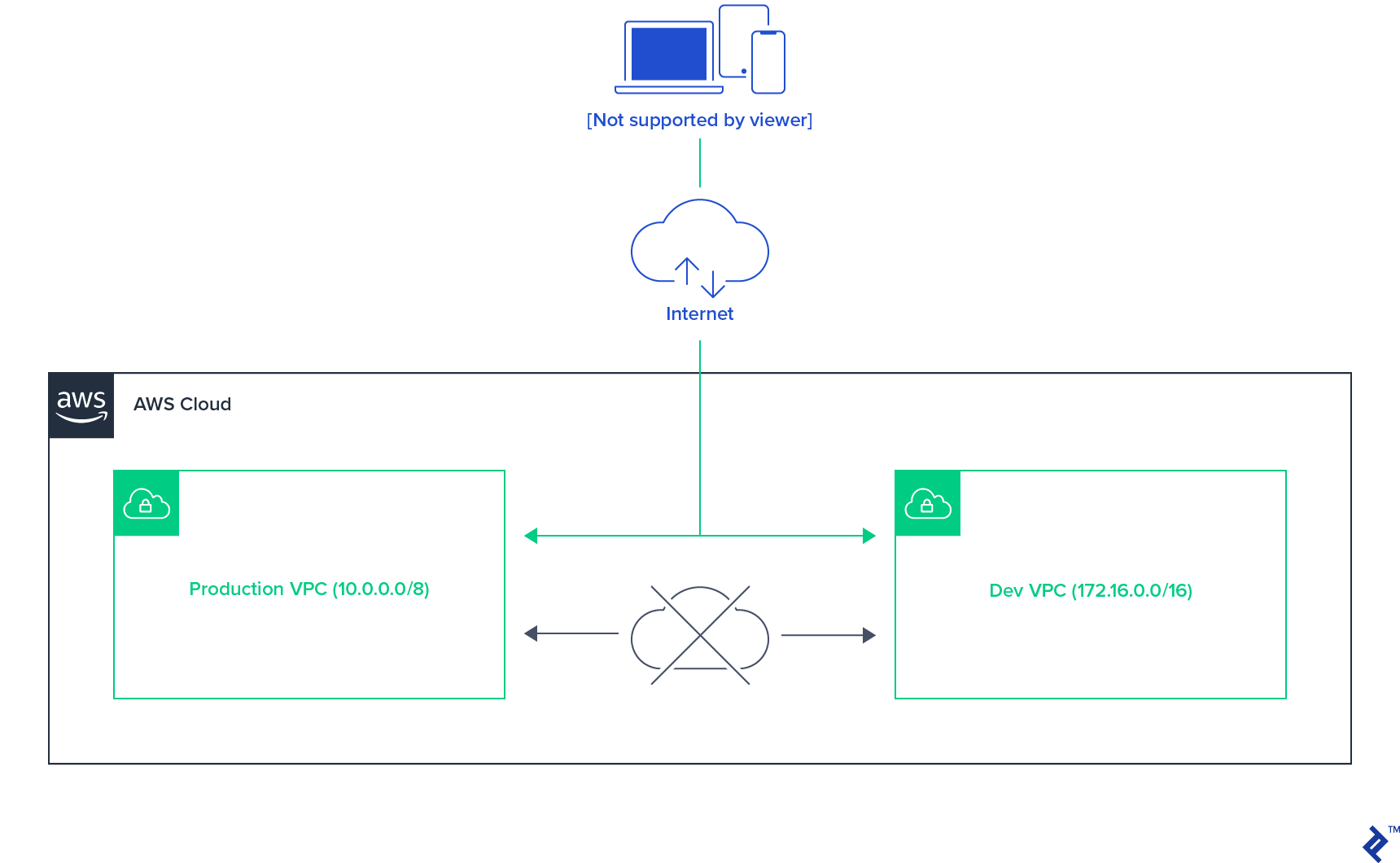

For LEVELS, the first step was separating the production environment from development, testing, and QA environments. Initially, each environment was planned as a fully isolated VPC, each running all necessary application services. However, to reduce costs, some resource sharing across the three non-production environments was deemed feasible.

Therefore, the final design utilized one VPC for the production environment and another for development, testing, and QA.

VPC-Level Access Isolation

This two-VPC setup isolates the production environment from the other three at the network level. This ensures that any accidental misconfiguration of the application cannot breach the production environment. Even if the non-production environment configuration mistakenly points to production resources like databases or message queues, access would be blocked.

While the shared VPC of the development, testing, and QA environments allows cross-boundary access in case of misconfiguration, it poses no significant security risk due to the use of test data in these environments.

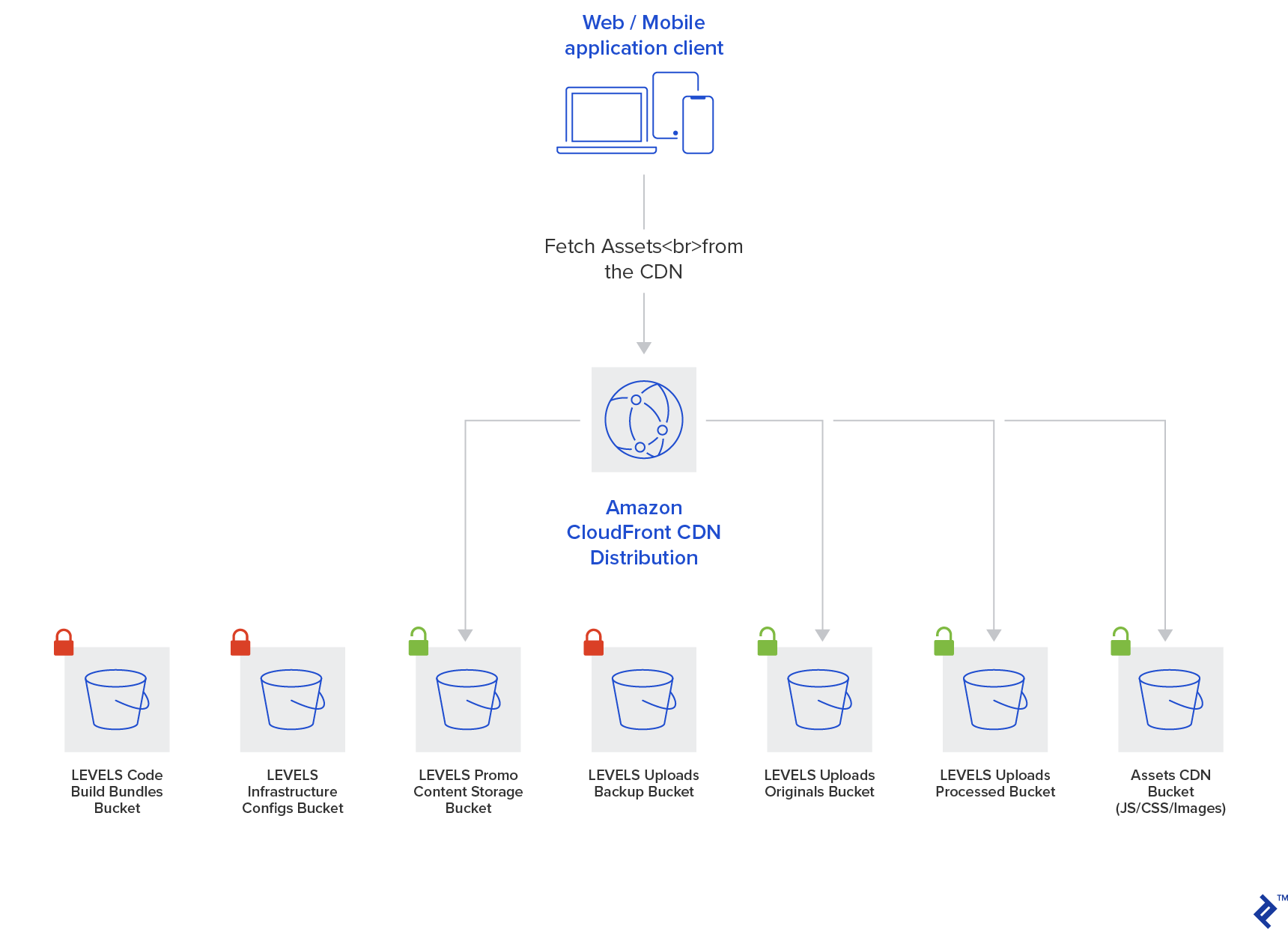

Asset Storage Security Model

Assets are housed in the Amazon Simple Storage Service (S3) object storage. The S3 storage operates independently of VPCs and uses a distinct security model. Storage is organized into separate S3 buckets for each environment and/or asset class, with appropriate access rights assigned to each bucket.

Several asset classes were defined for LEVELS: user uploads, LEVELS-generated content (such as promotional videos), web application and UI assets (like JS code, icons, and fonts), application and infrastructure configuration files, and server-side deployment packages.

Each of these categories is assigned a separate S3 bucket.

Application Secrets Management

AWS provides an encrypted AWS Systems Manager Parameter Store or the AWS Secrets Manager - managed key-value services for secure secret storage. (Learn more at Linux Academy and 1Strategy).

AWS manages the underlying encryption keys and handles the encryption/decryption processes. Secrets can have read and write permission policies based on key prefixes for both infrastructure components and users (servers and developers, respectively).

Server SSH Access

In an ideal fully automated and immutable environment, SSH access to servers should be unnecessary. Logs can be exported to dedicated logging services, and monitoring is delegated to a separate monitoring service. However, occasional SSH access might be required for configuration checks and debugging.

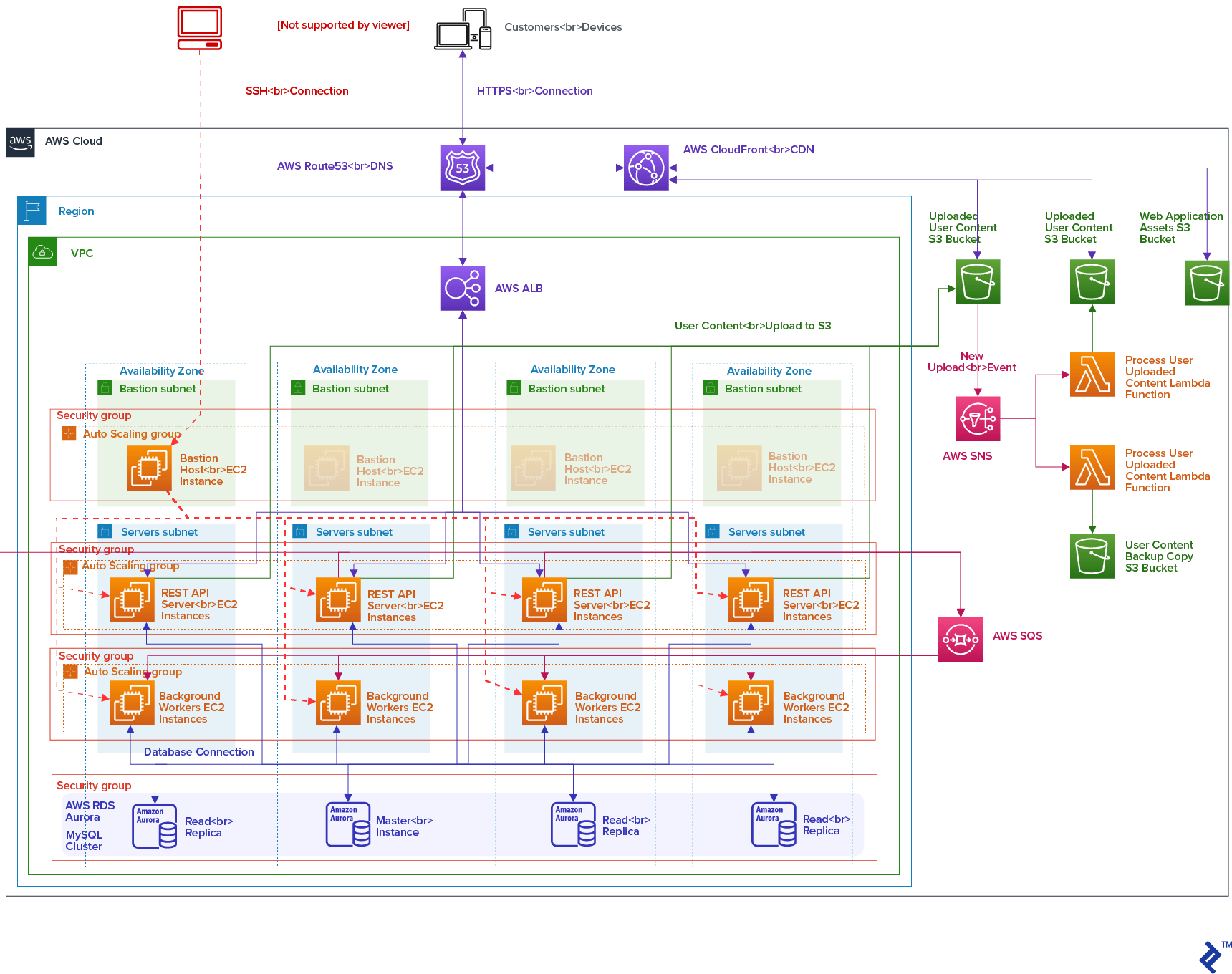

To minimize server vulnerability, the SSH port isn’t publicly exposed. Access to servers is routed through a bastion host, a dedicated machine permitting external SSH access. This host is further secured by a firewall that whitelists only permitted IP address ranges. Access control is maintained by deploying users’ public SSH keys to the respective boxes (password logins are disabled). This setup provides a robust barrier against malicious attackers.

Database Access

Similar principles apply to database servers. While direct database connection and data manipulation should be infrequent and discouraged, such access needs the same level of protection as server SSH access.

A parallel approach utilizing the same bastion host infrastructure with SSH tunneling can be employed. A double SSH tunnel is established—one to the bastion host and another through it to the server with database access (the bastion host lacks direct database access). This allows a connection from the user’s machine to the database server via the second tunnel.

AWS Scalability Concepts Overview

Scalability concerning servers is relatively straightforward with platforms simpler than AWS. However, the trade-off is relying on external services for certain requirements. This means data traverses the public network, potentially compromising security. AWS, in contrast, keeps all data private, but achieving a scalable, resilient, and fault-tolerant infrastructure demands careful engineering.

The most effective approach to scalability within AWS is leveraging managed services with robust monitoring and automation features that have been extensively tested by numerous clients. By incorporating data backups and recovery mechanisms, many concerns are effectively addressed by the platform itself.

While these managed services come at a higher cost, they allow for a smaller operations team, which can offset the expenses. The LEVELS team recognized the value of this approach.

Amazon Elastic Compute Cloud (EC2)

Compared to the overhead and complexity associated with containerization or the evolving complexities of serverless computing, utilizing EC2 instances within a proven environment remains a practical approach.

EC2 instance provisioning should be completely automated. This is achieved through custom AMIs for different server types and Auto Scaling Groups. These groups manage dynamic server fleets by adjusting the number of running server instances based on real-time traffic demands.

Auto-scaling also allows setting upper and lower limits for the number of running EC2 instances. The lower limit promotes fault tolerance by minimizing downtime in case of instance failures. The upper limit controls costs, accepting some downtime risk in extreme, unforeseen circumstances. Auto-scaling dynamically manages the number of instances within these bounds.

Amazon Relational Database Service (RDS)

While the team was already utilizing Amazon RDS for MySQL, a robust backup, fault tolerance, and security strategy was still under development. Initially, the database instance was upgraded to a two-instance cluster in each VPC, configured as a master and a read-replica for automatic failover.

Subsequently, with the release of the MySQL version 5.7 engine, the infrastructure was upgraded to the Amazon Aurora MySQL service. Although fully managed, Aurora doesn’t scale automatically. It does offer automatic storage scaling, eliminating capacity planning concerns, but the architect still needs to define the computing instance size.

Downtime during scaling is unavoidable but can be minimized using Aurora’s auto-healing feature. With improved replication capabilities, Aurora enables seamless failover. Scaling is performed by creating a read-replica with the desired computing capacity and then failing over to that instance. Other read-replicas are then removed from the cluster, resized, and reintroduced. Although this process requires some manual intervention, it’s manageable.

It’s worth noting that the new Aurora Serverless option offers some automatic scaling of computing resources, which could be explored in the future.

Managed AWS Services

Beyond these two components, the remaining system benefits from the auto-scaling capabilities of fully managed AWS services. These include Elastic Load Balancing (ELB), Amazon Simple Queue Service (SQS), Amazon S3 object storage, AWS Lambda functions, and Amazon Simple Notification Service (SNS), which require no specific scaling efforts from the architect.

Mutable vs. Immutable Infrastructure

There are two primary approaches to managing server infrastructure: mutable infrastructure, where servers are installed and continuously updated and modified; and immutable infrastructure, where servers remain unchanged after provisioning. In this latter approach, configuration changes or server updates involve provisioning new servers from a standard image or installation script, replacing the old ones.

LEVELS opted for an immutable infrastructure, eliminating in-place upgrades, configuration adjustments, or management tasks. The sole exception is application deployments, which occur in-place.

More on mutable vs. immutable infrastructure can be found in HashiCorp’s blog.

Architecture Overview

LEVELS is essentially a mobile and web application with a REST API backend and some background services - a common architecture in today’s landscape. Considering this, the following infrastructure was proposed and implemented:

Virtual Network Isolation - Amazon VPC

The diagram illustrates the structure of a single environment within its VPC. Other environments follow the same structure (with the Application Load Balancer (ALB) and Amazon Aurora cluster shared between the non-production environments in their VPC), but the network setup remains identical.

For fault tolerance, the VPC is configured across four availability zones within an AWS region. Subnets and Security Groups with Network Access Control Lists fulfill the security and access control requirements.

Extending the infrastructure across multiple AWS Regions for additional fault tolerance was considered overly complex and unnecessary at this early stage of the business and product development but remains an option for the future.

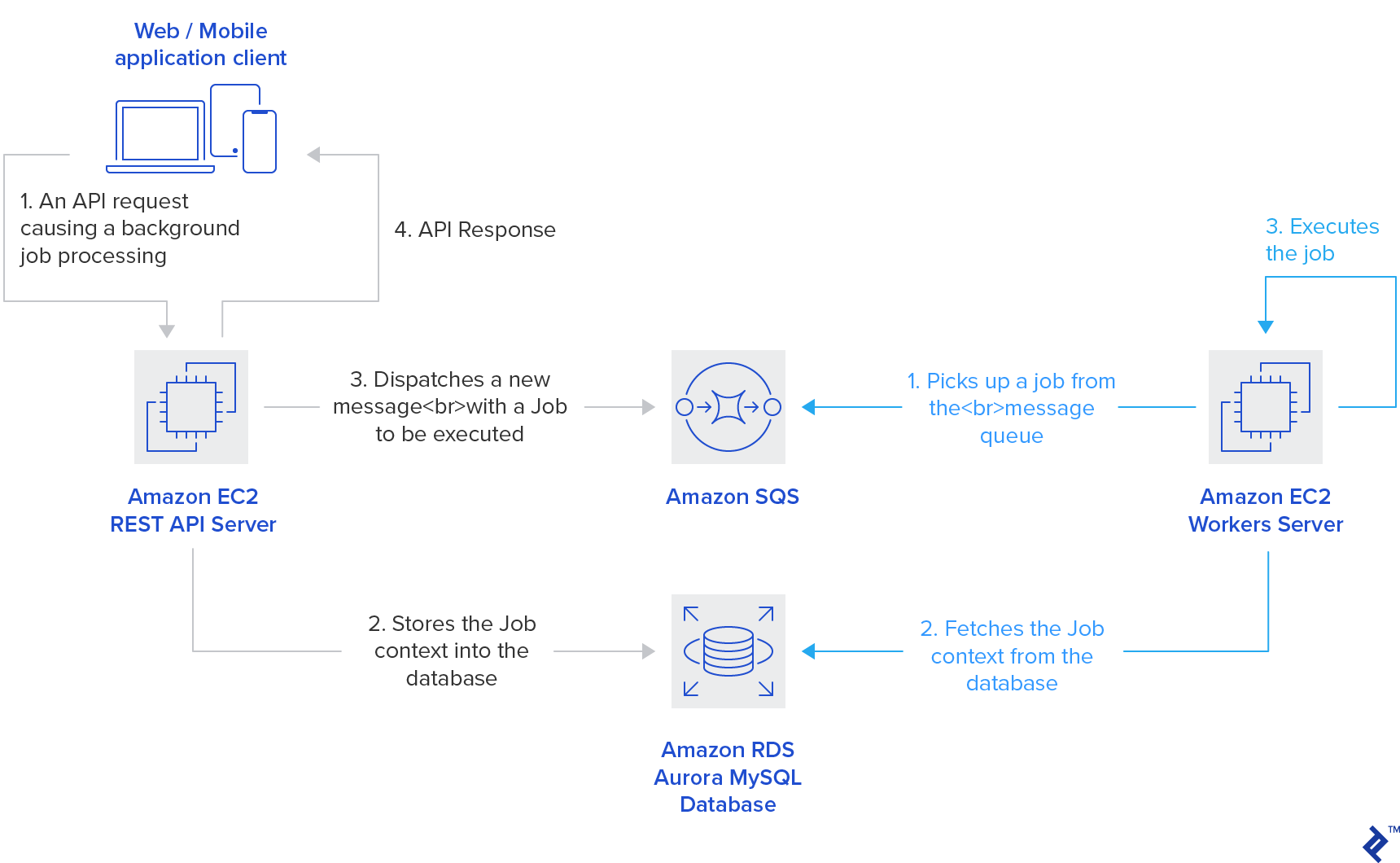

Computing

LEVELS runs a traditional REST API with background workers handling asynchronous application logic. Both operate as dynamic fleets of standard EC2 instances, fully automated using Auto Scaling Groups. The managed Amazon SQS service handles background task (job) distribution, eliminating the need to manage and scale a dedicated MQ server.

Some utility tasks, such as image processing, are not tightly coupled to the main application logic. These tasks are well-suited for AWS Lambda, which provides exceptional horizontal scalability and unmatched parallel processing compared to traditional server-workers.

Load Balancing, Auto-scaling, and Fault Tolerance

While load balancing can be achieved manually with Nginx or HAProxy, opting for Amazon Elastic Load Balancing (ELB) provides the advantage of an inherently scalable, highly available, and fault-tolerant load balancing infrastructure.

The Application Load Balancer (ALB) flavor of ELB is utilized, leveraging its HTTP layer routing capabilities. New instances added to the fleet are automatically registered with the ALB through AWS platform mechanisms. The ALB constantly monitors instance health and can deregister and terminate unhealthy instances, triggering Auto Scaling to replace them. This interplay ensures EC2 fleet self-healing.

Application Data Store

The application data store is an Amazon Aurora MySQL cluster comprising a master instance and multiple read-replica instances. If the master instance fails, one of the read-replicas is automatically promoted to the new master, ensuring the required fault tolerance.

As their name suggests, read-replicas can also distribute the database cluster load for data read operations.

Aurora automatically scales database storage in 10GB increments, and AWS fully manages backups, offering point-in-time restoration by default. These features significantly reduce database administration overhead, except for scaling up database computing power when needed - a worthwhile trade-off for worry-free operation.

Storing and Serving Assets

LEVELS needs to store user-uploaded content. Predictably, Amazon S3 object storage is the service chosen for this task. Application assets (UI elements like images, icons, and fonts) are also stored in S3 for accessibility by the client app. S3 provides default data durability through automated data backups via internal storage replication.

User-uploaded images can vary significantly in size and resolution, often unnecessarily large for direct serving. This can impact mobile network performance. Generating multiple image variations at different sizes optimizes content delivery for various use cases. AWS Lambda is utilized for this task and for creating backup copies of original images in a separate bucket as a precautionary measure.

Finally, a browser-based web application also comprises static assets. The Continuous Integration build process compiles the JavaScript code and stores each build in S3.

With these assets securely stored in S3, they can be served directly via S3’s public HTTP interface or through the Amazon CloudFront CDN. CloudFront distributes assets geographically to reduce latency for end-users and enables HTTPS support for serving static assets.

Infrastructure Provisioning and Management

Provisioning the AWS infrastructure involves configuring networking, managed AWS resources and services, and bare-bones EC2 computing resources. While managed AWS services require minimal intervention beyond provisioning and security configuration, EC2 computing resources demand manual configuration and automation.

Tooling

The web-based AWS Console, while functional, doesn’t lend itself well to managing the “lego-brick-like” AWS infrastructure. Manual processes are prone to errors, making a dedicated automation tool highly desirable.

AWS CloudFormation, developed and maintained by AWS, is one such tool. Another option is HashiCorp’s Terraform, offering greater flexibility with its multi-platform provider support. However, its relevance here stems from Terraform’s alignment with the immutable infrastructure approach, which aligns with LEVELS’ infrastructure management philosophy. Terraform, coupled with Packer for generating base AMI images, proved to be an excellent fit.

Infrastructure Documentation

An added advantage of automation tools is the reduced reliance on extensive infrastructure documentation, which can quickly become outdated. The “Infrastructure as Code” (IaC) paradigm of provisioning tools acts as live documentation, constantly reflecting the current infrastructure state. Maintaining a high-level overview document, less prone to changes and easier to update, is sufficient.

Final Thoughts

This proposed AWS Cloud infrastructure provides a scalable solution that can accommodate future product growth mostly automatically. After nearly two years in operation, it has demonstrated cost-effectiveness by leveraging cloud automation without requiring a dedicated 24/7 system operations team.

Regarding security, the AWS Cloud keeps all data and resources within a private network in the same cloud environment. Confidential data transmission over the public internet is never required. External access is granted with fine-grained permissions to trusted support systems (such as CI/CD, external monitoring, or alerting) and limited to resources necessary for their specific function within the system.

When properly designed and implemented, such a system is flexible, resilient, secure, and well-equipped to handle future demands for scaling due to product growth or the implementation of advanced security measures like PCI-DSS compliance.

While not necessarily cheaper than productized platforms like Heroku, which work well for applications that fit within their predefined usage patterns, AWS offers greater control over your infrastructure, increased flexibility with its extensive range of services, and the ability to fine-tune the entire infrastructure through customized configurations.