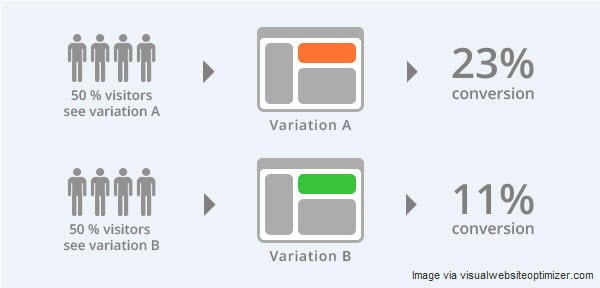

It’s common knowledge* that implementing effective A/B testing strategies can significantly enhance your performance and boost conversions. When it comes to creating an engaging and rewarding digital experience, well-executed A/B tests are a much more potent solution for underperforming landing pages than any quick fix. However, the methodologies for designing multivariate tests that generate accurate and representative results can be ambiguous at best, and highly debated at worst.

A/B testing proves invaluable for optimizing landing pages when done right. To avoid squandering time, resources, and effort on modifications that yield negligible benefits – or even worsen things – consider the following factors during your next project. * Let’s be real, not everyone is in the know about this

RELATED: 5 Budget-Friendly A/B Testing Strategies

Validation is Key

Before you casually task your design and content teams with creating numerous buttons or calls to action by day’s end, it’s essential to have a clear hypothesis you want to test. After all, without a projected outcome, A/B testing devolves into mere guesswork. Similarly, lacking a hypothesis makes it challenging to discern the actual impact of design changes, potentially leading to redundant testing or missed opportunities that a focused objective could have revealed.

Just as scientists embark on experiments with a hypothesis, you should enter the multivariate testing phase with a well-defined expectation of what you hope to observe – or at the very least, a notion of the potential outcome.

Formulating a hypothesis doesn’t need to be overly complex. For instance, you could A/B test whether minor adjustments to the wording of a call to action result in higher conversion rates, or if a slightly modified color scheme reduces your bounce rate or improves user dwell time.

Whichever aspect of your site you choose to test, ensure that everyone involved in the project understands the core hypothesis well in advance of any changes to code, copy, or assets.

Key Takeaway: Before initiating your A/B test, be crystal clear about what you’re testing and why. Are you evaluating the impact of subtle copy changes in a call to action? The length of a form? Keyword positioning? Ensure you have a projected effect of the variations before starting A/B split testing.

RELATED: Seeking to enhance conversions through calls to action? Explore these impactful call to action examples and understand the reasons behind their effectiveness.

Embrace a Granular A/B Testing Approach

A frequent pitfall in A/B testing is comparing landing page layouts that are drastically different. While it might be tempting to test the efficacy of two entirely distinct pages, this approach might not yield actionable insights. The more pronounced the disparities between two page versions, the harder it becomes to pinpoint the specific factors that contributed to an improvement – or decline – in conversions.

Don’t fall into the trap of believing that all variations within an A/B test need to be dramatic, attention-grabbing overhauls. Even subtle tweaks can have a noticeable effect, such as slightly restructuring a list of product features to encourage users to seek more information, or rephrasing a call to action to boost user engagement.

Even seemingly insignificant details like minor punctuation differences can measurably impact user behavior. Marketing expert and author of “The Ultimate Guide to Google AdWords,” Perry Marshall, recounted an A/B test where the CTR of two ads was being assessed. The sole difference? A single comma. Despite this seemingly trivial variation, the variant featuring the comma boasted a CTR of 4.40% – a 0.28 percentage point improvement over the control.

However, this doesn’t imply that comparing user behavior on vastly different page versions is entirely without merit. In fact, doing so earlier in the testing phase can inform design choices down the line. Best practices in A/B testing suggest that the more significant the difference between two versions of a page, the earlier in the testing process these variations should be assessed.

Key Takeaway: Focus on testing one element at a time to definitively determine which change was responsible for the increase in conversions. Once a winner is identified, proceed to test another single change. Continue this iterative process until your conversion rate reaches its peak.

Test Early and Often

Scientists rarely rely on the findings of a single experiment to confirm or refute their hypotheses, and neither should you. To follow A/B testing best practices, you should evaluate the impact of one variable per test, but that doesn’t mean you’re limited to conducting just one overall test. That would be counterproductive.

A/B testing should be a granular process. While the initial test’s results might not offer profound insights into user behavior, they can guide you in designing additional tests to gain a deeper understanding of what design choices have a measurable impact on conversions.

The earlier you commence A/B testing, the sooner you can discard ineffective design choices or business decisions grounded in assumptions. The more frequently you test certain aspects of your site, the more dependable your data becomes, allowing you to prioritize what truly matters – the user.

Key Takeaway: Delaying A/B testing until the eleventh hour is not advisable. The sooner you acquire actual data, the sooner you can begin integrating changes based on real user behavior, not on your assumptions. Frequent testing ensures that modifications to your landing pages are truly optimizing conversions. When creating a landing page from the ground up, keep the insights from early tests at the forefront.

Exercise Patience with Multivariate Tests

A/B testing is a crucial tool in a marketing professional’s toolkit, but don’t expect significant results to magically appear overnight. When designing and executing A/B tests, patience is key – prematurely concluding a test might seem like time saved, but it could end up being costly.

Economists and data scientists rely on the concept of statistical significance to identify and interpret patterns within data. Statistical significance is at the core of A/B testing best practices, as without it, you risk making business decisions based on flawed data.

Statistical significance represents the probability that an observed effect during an experiment or test is a result of changes made to a specific variable, rather than random chance. To arrive at statistically significant results, marketers require a sufficiently large dataset. Larger data volumes not only provide more precise results but also simplify the identification of standard deviations – typical variations from the average result that hold no statistical significance. Unfortunately, gathering this data requires time, even for websites boasting millions of unique monthly visitors.

If you’re inclined to cut a test short, take a step back. Breathe. Have a coffee. Perhaps some yoga. Remember – patience is a virtue.

Key Takeaway: Resist the urge to end a test early, even if you observe promising initial results. Allow the test to run its course and give your users the opportunity to demonstrate how they interact with your landing pages. This holds true even when multivariate testing involves large user bases or high-traffic pages.

A/B Testing Requires an Open Mind

Remember the importance of formulating a hypothesis before launching into testing? Well, just because you have a predicted outcome for an A/B test doesn’t guarantee it will come to fruition – or even that your initial idea was accurate. And that’s perfectly fine, no judgment here.

Many experienced marketers have succumbed to the belief that, regardless of what their results indicate, their initial hypothesis was the only possible outcome. This deceptive thought often arises when user data paints a vastly different picture than what project stakeholders anticipated. When confronted with data that significantly deviates from the original hypothesis, it can be tempting to dismiss the results or the testing methodologies in favor of traditional knowledge or even past experience. However, this mindset can be detrimental to a project. After all, if you’re so certain about your assumptions, why even bother with A/B testing?

Seasoned marketing and PPC expert, Chris Kostecki, can certainly vouch for the importance of keeping an open mind during A/B testing. While evaluating two versions of a landing page, Chris discovered that the variant – featuring more strategically placed copy and positioned further away from the product ordering page – significantly outperformed the control. Chris admitted that although he was confident the more streamlined page would yield higher conversions, his A/B test results demonstrated otherwise.

Remaining receptive to new ideas based on concrete data and observed user behavior is crucial for project success. Moreover, the more extensive the testing phase and the more granular your approach, the more likely you are to uncover new insights about your customers and their interactions with your landing pages. This can lead to valuable discoveries about which changes will have the most substantial impact on conversions. Allow your results to speak for themselves, and pay close attention to what they reveal.

Key Takeaway: Users can be unpredictable, and attempting to foresee their behavior is inherently risky. Unless you secretly possess a deck of tarot cards at home, you’re not psychic. Base your business decisions on solid A/B test data – no matter how unexpected it might be. If certain test results leave you unconvinced, repeat the test and compare the data.

Maintain the Momentum

So, you’ve meticulously formulated your hypothesis, designed a series of robust tests, patiently awaited the influx of precious data, and diligently analyzed your results to arrive at a statistically significant, concrete conclusion – you’re done, right? Not quite.

Successful A/B tests can not only contribute to increased conversions or improved user engagement but can also lay the foundation for future tests. The “perfect” landing page doesn’t exist, and there’s always room for improvement. Even if everyone is content with the results of an A/B test and the ensuing modifications, it’s highly likely that other landing pages can yield similarly actionable results. Depending on your website’s nature, you can either base future tests on the outcomes of the first project or apply A/B testing best practices to an entirely new set of business goals.

Key Takeaway: Even landing pages that have undergone extensive optimization can be further enhanced. Avoid complacency, even after an exhaustive series of tests. If everyone is satisfied with the test results for a particular page, shift your focus to another page for testing. Leverage your experiences from your initial tests to formulate more precise hypotheses, design more effective tests, and hone in on areas of your other landing pages that have the potential to generate higher conversions.

Embrace the Uniqueness of Each Test

No two scientific experiments are identical, and this principle undoubtedly applies to A/B testing. Even when evaluating the impact of a single variable, countless external factors can shape the process, influence your results, and potentially lead to moments of utter despair.

Consider Brad Geddes, Founder of the PPC training platform Certified Knowledge. Brad recounted working with a client who had some rather unimpressive landing pages. After much persuasion and perseverance, Brad convinced his client to implement some adjustments. The redesign was arguably just as bad as the original, but after A/B testing, the revamped landing page led to a remarkable 76% increase in overall sitewide profit – not bad for a seemingly terrible landing page.

Avoid a rigid approach to the testing phase. Be specific in designing your tests, maintain flexibility when interpreting the data, and remember that tests don’t have to be flawless to yield valuable insights. Keep these principles in mind, and you’ll be a seasoned A/B testing expert in no time – no lab coat required (though feel free to don one if you wish – we won’t judge!).

Key Takeaway: Every multivariate test is unique, and it’s crucial to approach each landing page with this understanding. Strategies that proved successful in previous tests might not be as effective in others, even when adjusting similar elements. Even when dealing with similar landing pages, avoid assuming that past test results will directly apply. Always prioritize hard data and don’t lose sleep over imperfect tests.