As I age, I’m reminded of the days when top-tier CPU performance was synonymous with high-end x86 chips or, for the affluent, PowerPC systems. The reliance on x86 seemed to only grow stronger. A decade ago, Apple’s adoption of x86 led many to believe that non-x86 processors were finished in the mass market. However, they were proven wrong a few years later, again with Apple playing a part. ARM servers are emerging, potentially revolutionizing the server landscape.

Rethinking Processor Design

The shift towards smartphones and tablets revealed a critical shortcoming: x86 chips from Intel, AMD, and VIA weren’t suitable for these devices. Despite its ubiquity, the x86 instruction set had drawbacks in the mobile world. Even today, it’s not the go-to choice for mobile processors, though Intel’s foundry advancements are changing this. In this segment, x86 lacks the efficiency of other architectures, particularly ARM’s 32-bit ARMv7 and 64-bit ARMv8.

The last decade, especially the past five years, has seen ARM processors dominate smartphones and tablets. Their strengths were numerous: excellent performance-per-watt, low design, production, and deployment costs. Major vendors could purchase ARM building blocks and customize their processors based on ARMv7 or ARMv8, incorporating components like high-speed modems and GPUs as needed.

This led some designers, like Qualcomm and Apple, to create their own custom CPU cores. Both became dominant players in the mobile System-on-Chip (SoC) market, their custom cores playing a crucial role. While custom ARM cores were reserved for high-end processors, other segments utilized standard ARM Cortex CPUs, like the 32-bit Cortex-A8, A9, A7, and A15, later followed by 64-bit designs like the Cortex-A53, A57, and the upcoming A72.

Another factor in ARM’s success was Microsoft’s misstep.

Windows’ x86 exclusivity meant that Microsoft’s success in mobile would have bolstered Intel. However, by the end of the last decade, Redmond had clearly faltered, surrendering the lucrative market to Google and Apple. Former Microsoft CEO Steve Ballmer even acknowledged their failure to recognize the smartphone and tablet revolution. But it’s no longer his concern; he’s moved on to other “balls,” specifically basketballs.

Interestingly, mobile isn’t the only arena where Microsoft stumbled massively. The server market presents another example. While seemingly different, smartphones and data centers share technological and business parallels.

Both require an emphasis on power efficiency, thermal management, performance per dollar, and so on. Importantly, neither truly needs x86 processors. Thanks to Microsoft’s stumbles, these markets aren’t controlled by Windows, relying instead on UNIX-based systems like Android, iOS, and various Linux distributions.

Microsoft attempted to harness ARM’s potential with a Windows version for ARM hardware, leading to another misstep: Windows RT. Ultimately, they abandoned it. The latest Surface tablets use x64 processors and standard Windows 10. While Microsoft’s Lumia smartphones (formerly Nokia Lumia) still utilize Qualcomm’s ARM processors, Windows Phone has faded from the mainstream.

Servers Don’t Have To Cost An ARM And A Leg

Billions of smartphones and tablets, mostly ARM-based, are in use today. However, ARM’s penetration into other segments is limited. Outside of smartphones and tablets, few high-volume computing platforms utilize ARM. Google Chromebooks are a notable exception. However, ARM chips find homes in routers, set-top boxes, smart TVs, smartwatches, gaming devices, automotive infotainment systems, and more.

What about ARM servers?

This is where things become complicated. Talk of ARM servers has circulated since 2010, but progress has been sluggish. ARM’s server market share is negligible, with Intel’s Xeon and AMD’s struggling Opteron dominating. With AMD facing CPU challenges, Intel’s server market dominance has only grown.

Why were ARM servers considered promising in the first place?

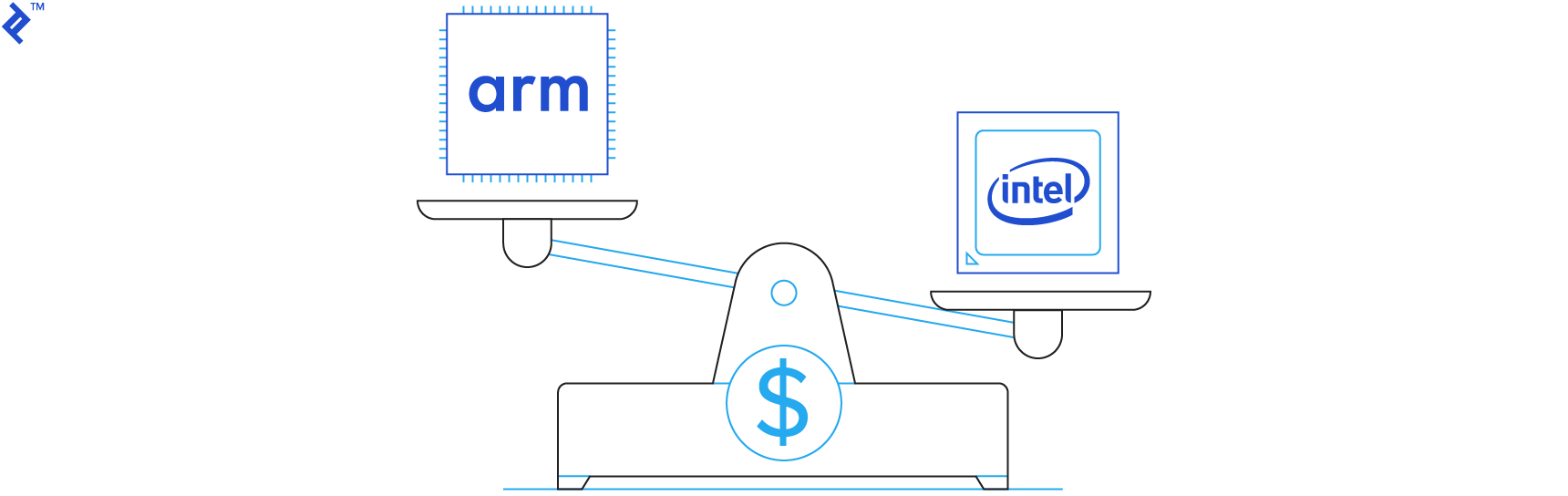

Cost-effectiveness. While technical reasons make ARM viable in servers, it boils down to money. Here’s a concise explanation:

- Price/Performance ratio

- Evolving data center workloads

- Processor sourcing from multiple vendors

- Custom chip design for niches

- ARM’s suitability for certain infrastructure applications

- Challenging Intel’s near-monopoly in the server space

Not every task demands a costly, powerful Xeon processor. Using outdated x86 processors for undemanding tasks is inefficient due to their power consumption. Unlike personal computers, servers operate continuously, making even minor efficiency gains significant. It’s not just about electricity bills; data centers require cooling and maintenance, making processors with lower Thermal Design Power (TDP) ratings more appealing to enterprises.

Why Use ARM Servers?

What enterprise applications are ARM processors well-suited for?

ARM anticipates significant adoption in networking infrastructure. Their flexibility, small size, efficiency, and low cost make them ideal for infrastructure. ARM processors can power routers, high-performance storage, and certain server types.

However, ARM projects that most enterprise growth in this decade will come from servers, as its presence in other mature segments is already strong. Server workloads are evolving due to the rise of cloud services, requiring servers to handle a growing number of smaller tasks.

Many organizations diversify their hardware sourcing, benefiting ARM server processors that various companies can offer. Additionally, ARM’s licensing model and modular design enable the creation of custom processors for specific applications. While not feasible for smaller companies, imagine the possibilities if giants like Amazon, Facebook, or Google demanded bespoke server processors optimized for specific tasks.

Regarding “sticking it to Intel,” it’s not about wishing them ill or pushing them out. However, their dominance could hinder growth and innovation. More competition should lower costs for end-users, which is a key benefit of ARM servers.

Multithreading: How Many CPU Cores Is Enough?

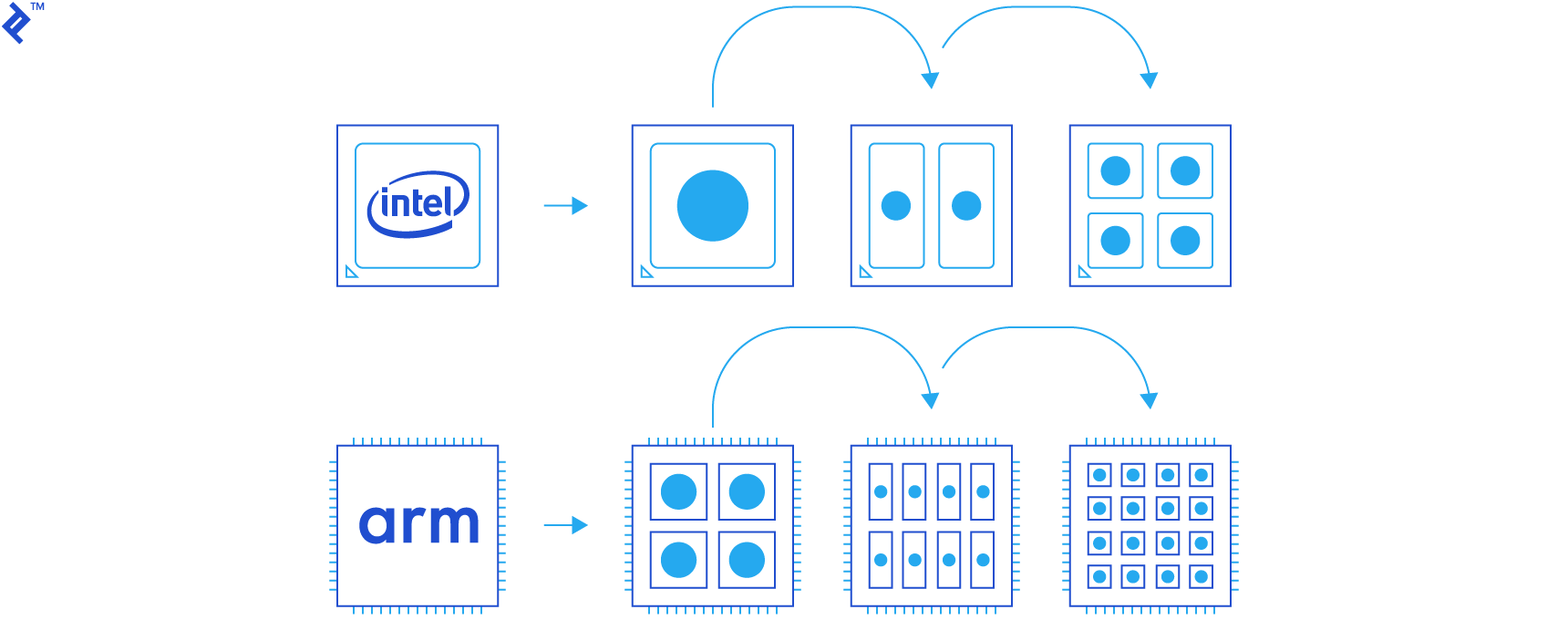

A decade ago, multicore x86 processors were limited to high-performance systems. Today, they’re found in budget tablets.

Early multicore processors required large cores for decent performance. Software often couldn’t utilize the extra cores effectively, making good single-thread performance crucial. Now, octa-core smartphones, quad-core Intel tablets and phones, and 16-core x86 server processors are commonplace.

This shift is logical, both technologically and financially. Distributing workload across smaller, efficient cores is more practical than developing a massive, high-frequency single core. The multicore approach improves efficiency and chip yields.

ARM could push this core count even further. Their cores are generally smaller than Intel’s server and desktop cores (Intel’s “small core” Atoms are primarily for mobile, though server variants exist). While 128-core or 256-core ARM processors aren’t imminent, they’re theoretically feasible. It depends on how the new ARMv8 server processors handle multithreaded workloads. Early indications are positive, suggesting that ARM servers could excel in workloads benefiting from numerous cores.

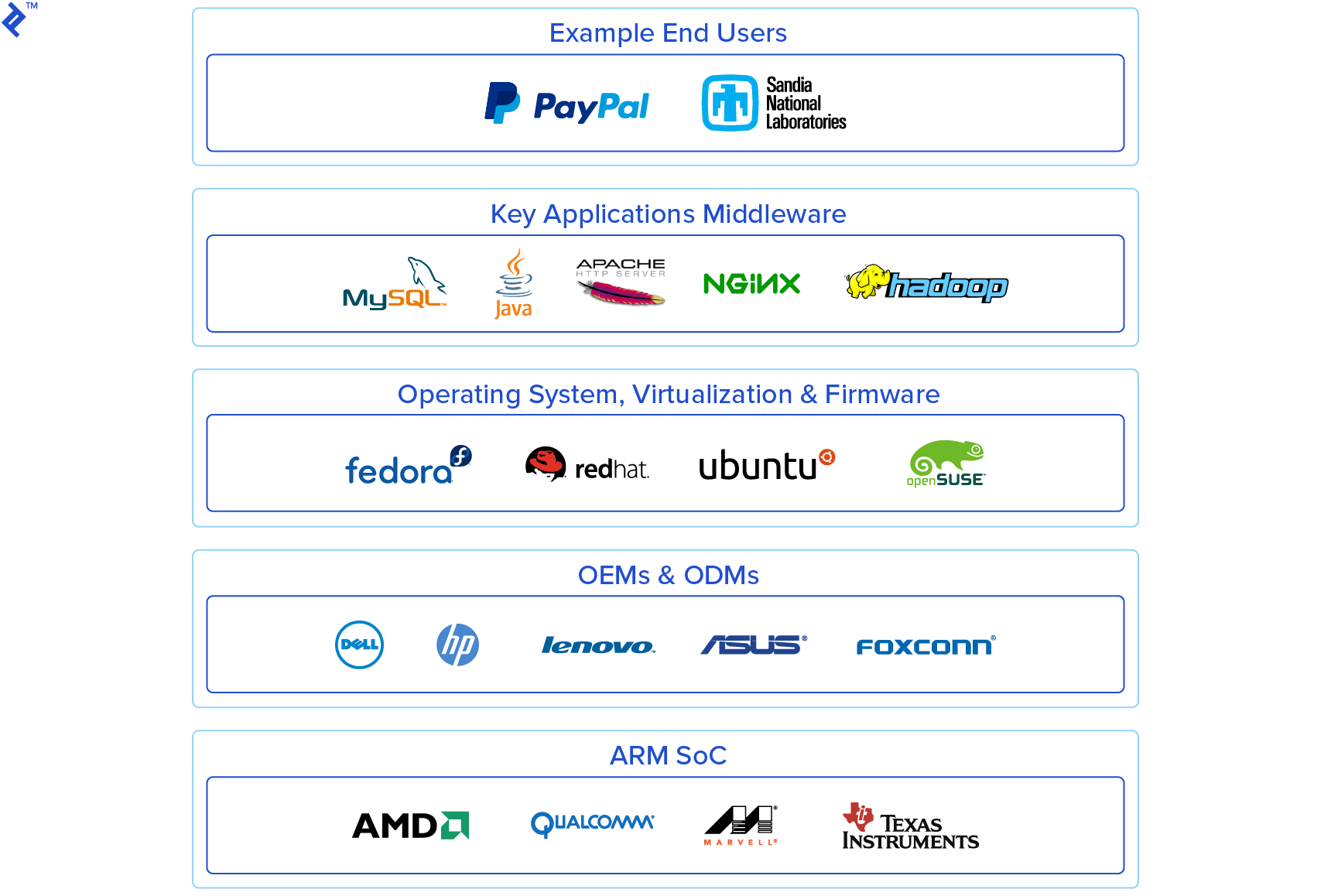

Qualcomm’s first server processor boasts 24 ARMv8 cores, with future models slated to have even more. AMD, amidst its server market struggles, recently launched its long-awaited ARM-based Opteron A1100 processor. With Qualcomm’s October announcement, both products are expected to launch soon.

While Intel is absent from this ARM endeavor, Qualcomm and AMD aren’t alone. Broadcom, Calxeda, Cavium Networks, and Huawei HiSilicon have developed ARM server products. Nvidia and Samsung, giants in SoC and GPU markets, also dabbled in ARM servers before halting development. Texas Instruments, Xilinx, and Marvell are exploring the space as well.

Some companies have crafted custom ARM cores. However, Nvidia’s Denver is the only non-Apple 64-bit custom ARM core currently available, with limited adoption.

What Are ARM Custom Cores?

Given the complexity of the CPU landscape, understanding what distinguishes ARM cores and custom cores is crucial. This explanation focuses on the business perspective rather than delving into the architectural intricacies of x86 and ARM instruction sets.

ARM’s distinctiveness isn’t solely due to its instruction set. The key difference between Intel, AMD, and ARM lies in their business models. Architectures evolve, new CPU designs emerge, but ARM’s marketing and licensing approach has remained consistent.

Consider this example:

An Intel processor, designed by Intel, utilizes their instruction sets and is manufactured in their foundries. It’s packaged and sold with “Intel Inside” branding. It sounds straightforward, but it represents billions invested in R&D and reliance on their own expensive fabrication facilities (a new chip fab costs about as much as a nuclear aircraft carrier).

ARM, on the other hand, is a “fabless” company. They design chips, but don’t manufacture or sell them directly. Instead, ARM sells intellectual property. This means clients can choose from various licensing agreements and create their own designs. Most opt for ARM’s pre-designed Cortex CPUs and Mali GPUs, paying royalties per core.

However, clients aren’t limited to pre-designed CPUs. They can license the architecture and develop custom cores based on the ARM instruction set. This is Apple’s strategy. They leverage the ARMv8 instruction set to build powerful 64-bit cores for their iOS devices. Nvidia’s Denver and Qualcomm’s custom cores (32-bit Krait and 64-bit Kryo series) follow a similar approach.

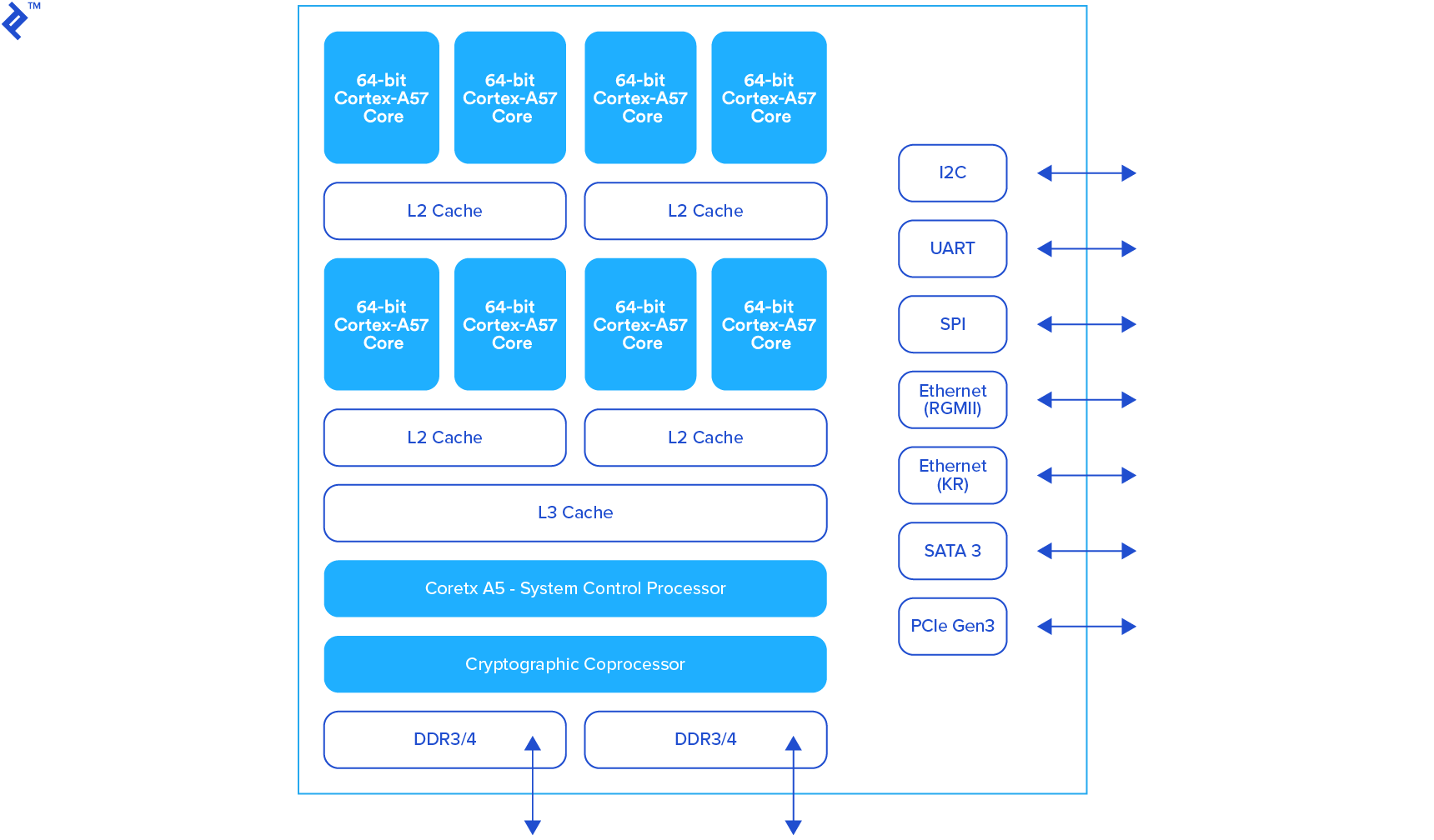

Designing custom CPU cores is complex and resource-intensive, typically undertaken by large companies with the necessary expertise and resources. Most companies opt for readily available ARM Cortex cores. The 64-bit Cortex-A57, for example, is used in many next-generation ARM servers.

It’s important to note that while most ARM-based chips are custom designed, the CPU cores themselves usually aren’t.

The majority utilize standard ARM Cortex CPUs, not custom cores. This allows manufacturers to select from a range of ARM CPUs, third-party GPUs, and other components to tailor processors to their needs without designing a custom core. This approach, stemming from ARM’s licensing policies, makes the architecture cost-effective and flexible.

These upcoming ARM servers, based on the latest 64-bit ARM architecture, are a far cry from their experimental predecessors. For instance, while Scaleway offered ARMv7-based servers, they had limitations like shared I/O controllers and lacked 64-bit support. This new generation won’t face those issues, aligning closer to Intel’s hardware in terms of features and standards.

ARM Server Pros And Cons

Currently, ARM servers primarily serve niche applications, unsuitable for small developers who can work with any server. While some large companies find them appealing, existing ARM servers aren’t a good fit for most individual developers.

However, the next wave of ARM servers promises broader appeal:

- Lower hardware costs and potentially better efficiency (performance per dollar, performance per Watt).

- Increased compatibility and support for popular ports.

- Support for cutting-edge technologies and industry standards.

- Superior performance in specific workloads (simple, multithreaded tasks).

- Potential for greater competition and product diversity compared to the x86 landscape.

It’s crucial to remember that some of these advantages are theoretical, as the hardware is still under development. However, while the future is uncertain, these new ARM servers have significant potential. Otherwise, companies like ARM, Qualcomm, and AMD wouldn’t be investing time and resources into them.

What about the downsides? There are several, some significant, but the industry is actively addressing them:

- Inconsistent software support

- Availability and potential deployment challenges

- Return on investment concerns

- Small ecosystem

- Resistance to change

Software compatibility will likely be the biggest initial hurdle. While many popular services will function, comprehensive software support will take time. Porting software isn’t enough; ensuring stability, performance, and avoiding bugs is critical. No one will build services on shaky foundations.

Despite the lucrative server market, adoption of new hardware and associated software adjustments are always slow, depending on market acceptance. The ARM server ecosystem is still small, and a few new processors won’t drastically change that in the short term. While ARM and Qualcomm have vested interests in ARM server adoption, they have limited influence over software developers. They can’t force developers to prioritize ARM support.

In short, assess your software stack and determine its compatibility with ARM hardware. While ARM support will improve over time, it will be gradual. Developers need to adapt frameworks and applications to the new architecture, which many might postpone until ARM server adoption increases (which could take years). Legacy software support presents another challenge.

This leads to availability and deployment concerns. With limited ARM server options, availability is also constrained. While ARM-based hosting packages might emerge in a year or two, they’ll likely be limited and concentrated in certain regions. Deployment uncertainties further complicate matters.

Slow adoption poses additional challenges, not unique to ARM servers. Many organizations will explore ARM servers without committing to them. Steady market growth is crucial for developer confidence and consumer demand. Otherwise, risk-averse players might hesitate, adopting a wait-and-see approach. Additionally, if developers doubt the ecosystem’s growth, they might not see a worthwhile return on investment.

Then there’s the inertia. The server landscape evolves slowly, leading to reliance on proven platforms, primarily x86. The prevailing attitude is “if it ain’t broke, don’t fix it.” While industry veterans might embrace ARM servers, tying projects to a perceived untested platform requires significant confidence. Many might hesitate to take that risk.

Bright Future And A Pinch Of Hype

Having spent years covering cutting-edge technology, I believe ARM servers have enormous potential, but they’re not a universal solution. They could be instrumental in building tomorrow’s internet, offering affordable building blocks for infrastructure and handling specific server workloads.

However, I also sense an element of hype surrounding ARM servers. Despite this, I don’t see them as a passing trend. They’re here to stay, but vendors need to identify specific niches that benefit from the architecture.

We won’t see ARM-based LAMP web hosting en masse, but they could thrive in specialized and potentially less exciting areas. ARM processors could excel in specific tasks, particularly those leveraging many small cores and not limited by CPU performance. This encompasses a wide range of applications: data logging, processing large volumes of simple queries, certain database types, various storage services, and more.

While I could continue discussing ARM server use cases, advantages, disadvantages, and potential challenges, their adoption will ultimately hinge on economics. Technological advancements aside, ARM servers need to be more cost-effective than x86 processors to justify their existence.

Since cost-effectiveness is a primary driver for introducing this architecture to the server market, competitive pricing is expected, but we’ll have to wait and see.