Machine learning skills can be effectively enhanced through competitions, which provide access to high-quality datasets and well-defined objectives. This focused approach enables participants to concentrate on developing effective solutions for the given problems.

Recently, my friend and I participated in the N+1 fish, N+2 fish](https://www.drivendata.org/competitions/48/identify-fish-challenge/) competition. This machine learning competition, heavily focused on image processing, challenges participants to [analyze video clips of fish being identified, measured, and either kept or discarded.

This article outlines our approach to the competition’s problem, utilizing standard image processing techniques and pre-trained neural network models. The submitted solutions were evaluated based on a specific formula, and our solution achieved an impressive 11th place ranking.

For a concise introduction to machine learning, refer to this article.

Competition Overview

The competition provided videos containing segments with one or multiple fish. These videos were captured on various boats fishing for ground fish in the Gulf of Maine using fixed-position cameras positioned above a ruler. Each fish was placed on the ruler, and after the fisherman removed their hands, the fish was either kept or discarded based on its species and size.

Performance Evaluation

The project’s core objective involved three crucial tasks. The ultimate goal was to develop an algorithm capable of automatically generating annotations for the video files, encompassing:

- The order of appearance of fish

- Species identification of each fish in the video

- Length measurement of each fish in the video

To assess the overall performance, the competition organizers devised an aggregated metric combining individual metrics for each task using specific weights. However, they encouraged participants to prioritize a well-rounded algorithm that contributed effectively to all three tasks.

For a comprehensive understanding of the overall performance metric calculation based on individual task metrics, refer to the official competition web page.

Developing a Machine Learning Solution

Machine learning projects involving images or videos typically utilize convolutional neural networks. However, before employing convolutional neural networks, we needed to preprocess the frames and address other subtasks using different strategies.

We used one nVidia 1080Ti GPU for training. A significant amount of time was invested in optimizing our code to maintain competitiveness. However, we ended up dedicating less time than necessary to certain areas.

Stage 0: Determining the Number of Unique Boats

Silhouette analysis simplified the task of determining the number of boats. We employed standard techniques, outlined below:

- Randomly sample frames from each video.

- Compute statistics and Speeded Up Robust Features (SURF) for each image.

- Utilize silhouette analysis for K-means clustering to determine the number of boats.

SURF identifies and describes points of interest in an image, demonstrating robustness against various image transformations.

After extracting feature descriptions of points of interest, K-means clustering is performed, followed by silhouette analysis to estimate the approximate number of boats in the images.

Stage 1: Identifying Duplicate Frames

Despite the dataset comprising separate video files, we observed overlaps between certain videos, possibly due to splitting a longer video. This resulted in shared frames at the beginning or end of some videos.

We used hash functions on the frames to detect and eliminate such duplicate frames.

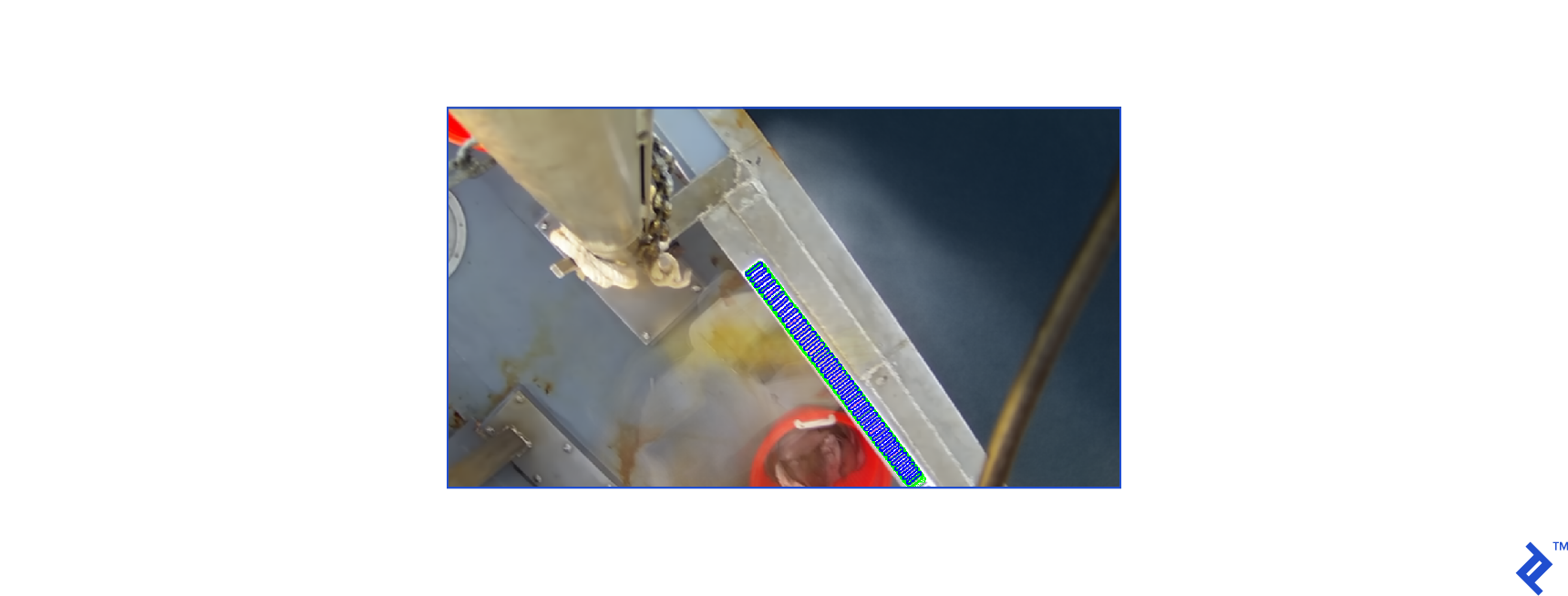

Stage 2: Ruler Localization

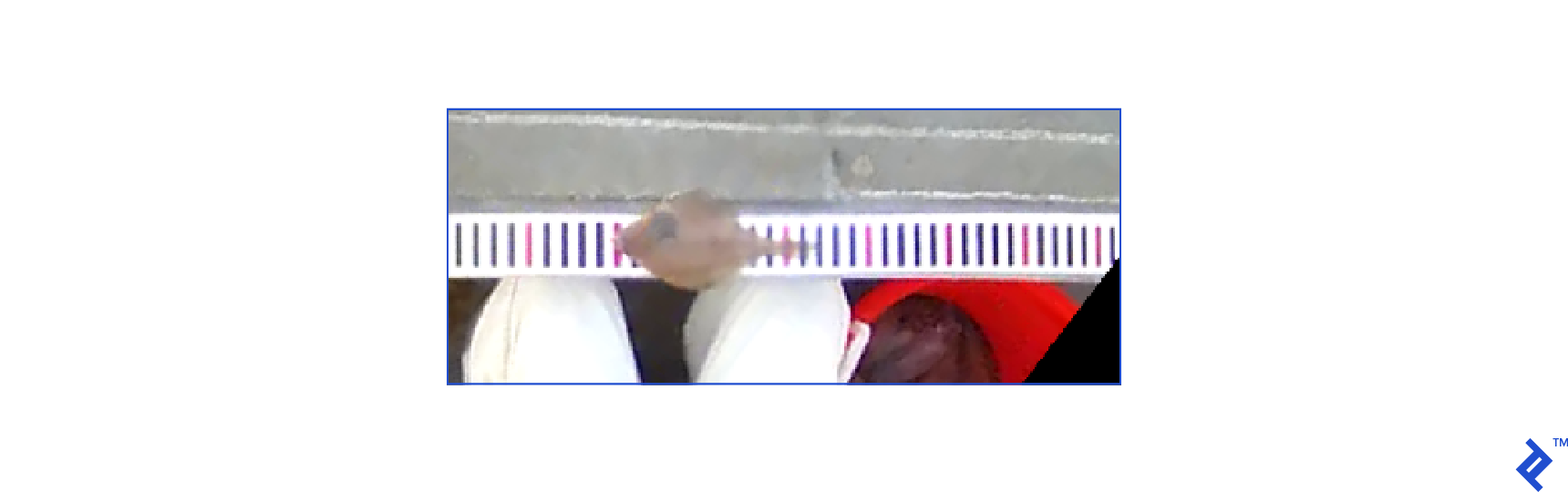

Using standard image processing techniques, we identified the ruler’s position and orientation. Subsequently, we rotated and cropped the image to standardize the ruler’s placement across all frames, resulting in a tenfold reduction in frame size.

Detected ruler (overlaid on the average frame):

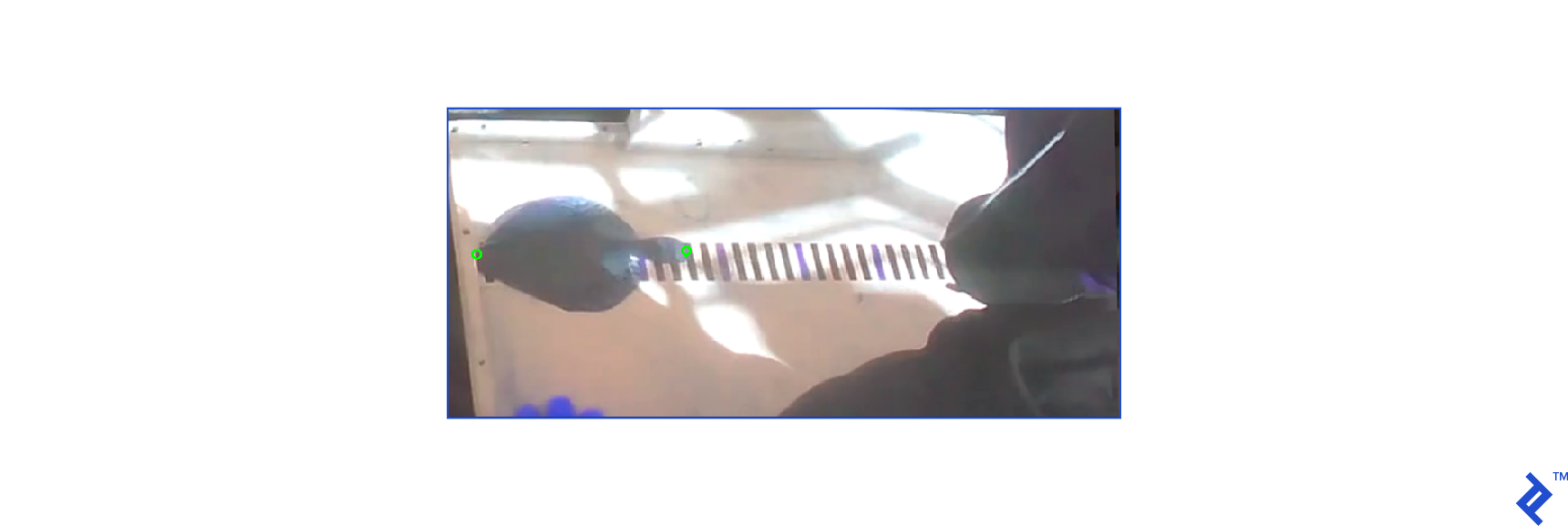

Cropped and rotated region:

Stage 3: Determining Fish Sequence

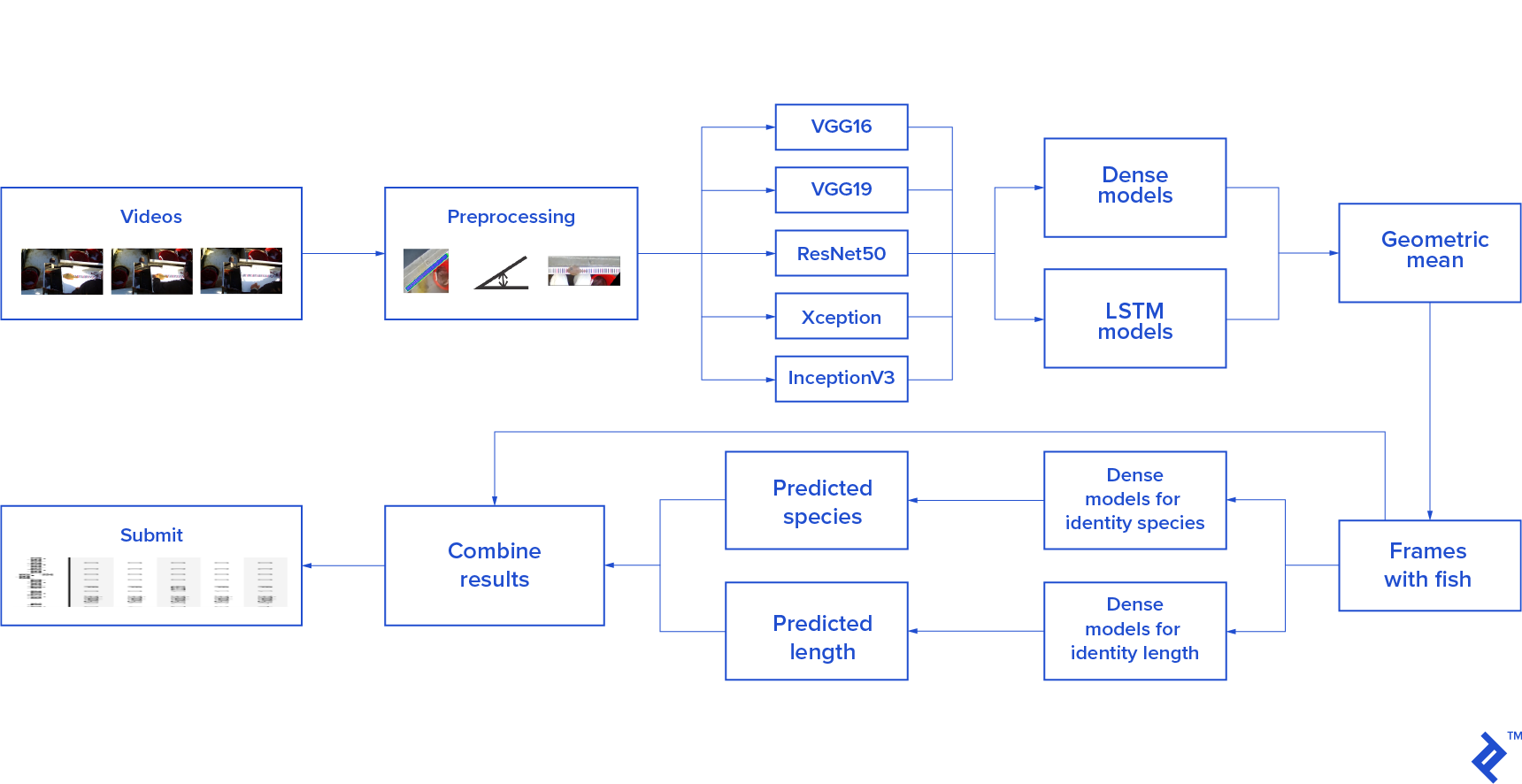

Implementing the stage for determining fish sequence consumed a considerable portion of our time. Training new convolutional neural networks seemed computationally expensive, leading us to opt for pre-trained models:

These models are trained on the ImageNet dataset dataset.

We extracted only the convolutional layers from these models and passed our competition dataset through them, obtaining a compact feature array.

Subsequently, we trained neural networks with fully connected dense layers and generated predictions using each pretrained model. Averaging these predictions yielded unsatisfactory results.

We then decided to employ Long short-term memory (LSTM) neural networks for improved predictions. These networks received a sequence of five frames as input, each transformed using the pretrained models.

The geometric mean was used to combine the outputs of all models.

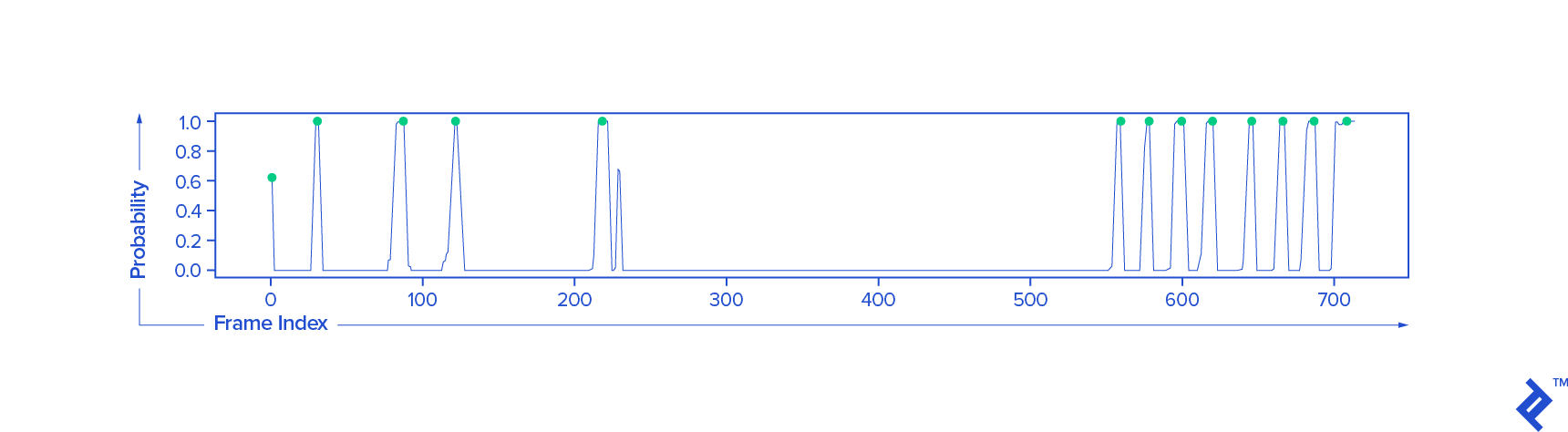

The fish detection pipeline involved the following steps:

- Generate features using pretrained models.

- Predict fish appearance probability using a dense neural network.

- Generate LSTM features using pretrained models.

- Predict fish appearance probability using an LSTM neural network.

- Merge model outputs using the geometric mean.

The result for a sample video:

Stage 4: Fish Species Identification

Having spent a significant portion of the competition on the previous stage, we aimed to compensate by utilizing models from that stage for fish species identification.

Our approach involved:

- Adding dense layers to convolutional pretrained models (VGG16, VGG19, ResNet50, Xception, InceptionV3), keeping the convolutional layers’ weights fixed.

- Training models with minimal image augmentation.

- Predicting species using each model.

- Combining model predictions through voting.

Stage 5: Fish Length Detection

For determining fish length, we utilized two neural networks. One network was trained to identify fish heads, while the other identified fish tails. The fish’s length was then approximated as the distance between these two identified points.

Complete System Overview

The following diagram illustrates the overall system design:

The system followed a straightforward design, passing video frames through the outlined stages and finally combining the individual results.