I was first introduced to Spark in late 2013 while exploring Scala, the programming language behind Spark. Later, I embarked on an engaging data science project aiming to predict survival rates from the Titanic disaster](https://www.kaggle.com/c/titanic-gettingStarted). This proved to be an excellent method to delve deeper into Spark’s concepts and programming techniques. I highly recommend this approach to any aspiring [Spark programmers seeking a starting point.

Today, industry giants like Amazon, eBay, and Yahoo! have embraced Spark. Numerous organizations utilize Spark on expansive clusters with thousands of nodes. As per the Spark FAQ, the largest known cluster boasts over 8000 nodes. Undoubtedly, Spark is a technology deserving of attention and study.

This article offers a primer on Spark, encompassing use cases and illustrative examples. It incorporates information from the Apache Spark website and the book Learning Spark - Lightning-Fast Big Data Analysis.

Introducing Apache Spark

Spark is an Apache project promoted as “lightning fast cluster computing.” Boasting a vibrant open-source community, it currently stands as the most active Apache project.

Spark presents a swifter and more versatile data processing framework. With Spark, you can execute programs up to 100 times faster in memory, or 10 times faster on disk, compared to Hadoop. In the past year, Spark outperformed Hadoop by completing the 100 TB Daytona GraySort competition three times faster, utilizing a tenth of the machines, and securing its position as the fastest open source engine for sorting a petabyte.

Spark also accelerates code writing by providing over 80 high-level operators. To illustrate this, let’s examine the “Hello World!” of Big Data: the Word Count example. Implemented in Java for MapReduce, it requires approximately 50 lines of code. In contrast, using Spark (and Scala), you can achieve the same result with this simple code:

| |

Another noteworthy aspect of Apache Spark is its out-of-the-box interactive shell (REPL). REPL allows you to test each line of code’s output without needing to write and run the entire job. This streamlines the path to functional code and empowers ad-hoc data analysis.

Spark’s key features include:

- APIs currently available in Scala, Java, and Python, with support for other languages (such as R) in development

- Seamless integration with the Hadoop ecosystem and data sources (HDFS, Amazon S3, Hive, HBase, Cassandra, etc.)

- Ability to run on clusters managed by Hadoop YARN or Apache Mesos, as well as in standalone mode

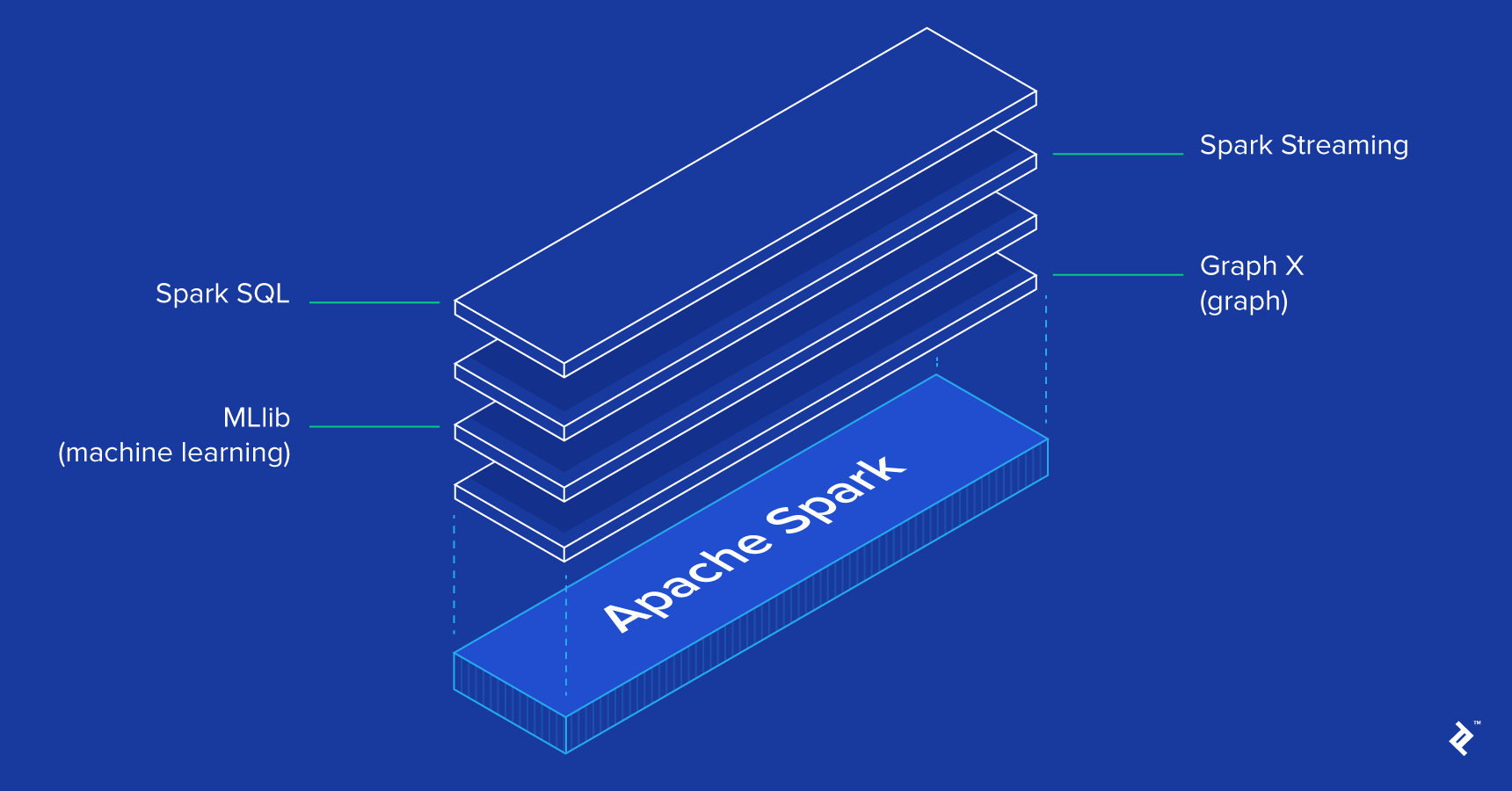

Complementing the Spark core are several potent, higher-level libraries that can be seamlessly incorporated into the same application. These libraries currently comprise SparkSQL, Spark Streaming, MLlib (for machine learning), and GraphX, each explored in greater detail within this article. Further Spark libraries and extensions are also under development.

Spark Core

Spark Core forms the foundation for large-scale parallel and distributed data processing. Its responsibilities include:

- memory management and fault recovery

- scheduling, distributing, and monitoring jobs within a cluster

- interacting with storage systems

Spark introduces the concept of an RDD (Resilient Distributed Dataset), an immutable, fault-tolerant, and distributed collection of objects suitable for parallel operations. An RDD can hold any object type and is generated by loading an external dataset or distributing a collection from the driver program.

RDDs support two operation types:

- Transformations are operations (e.g., map, filter, join, union) performed on an RDD, yielding a new RDD containing the results.

- Actions are operations (e.g., reduce, count, first) that return a value after computation on an RDD.

Transformations in Spark are “lazy,” delaying result computation. Instead, they “remember” the operation and its target dataset. Actual computation occurs only when an action is invoked, returning the result to the driver program. This design enhances Spark’s efficiency. For instance, if a large file undergoes transformations before the first action, Spark processes and returns only the first line’s result, rather than processing the entire file.

By default, transformed RDDs might be recomputed with each action. However, you can persist an RDD in memory using the persist or cache methods. Spark then retains the elements within the cluster for faster access during subsequent queries.

SparkSQL

SparkSQL is a Spark component enabling data querying via SQL or the Hive Query Language. Originating as the Apache Hive port to run on Spark (replacing MapReduce), it is now an integral part of the Spark ecosystem. Supporting various data sources, it empowers the interweaving of SQL queries with code transformations, creating a remarkably powerful tool. Below is an example of a Hive-compatible query:

| |

Spark Streaming

Spark Streaming enables real-time processing of streaming data, including production web server log files (e.g., Apache Flume and HDFS/S3), social media feeds like Twitter, and diverse messaging queues like Kafka. Behind the scenes, Spark Streaming receives and divides input data streams into batches. The Spark engine then processes these batches, generating a final stream of results in batches, as illustrated below.

The close resemblance between Spark Streaming’s API and Spark Core’s simplifies the transition for programmers working with both batch and streaming data.

MLlib

MLlib](https://spark.apache.org/mllib/) is a machine learning library offering scalable algorithms designed for cluster deployment. These algorithms address classification, regression, clustering, collaborative filtering, and more (refer to Toptal’s article on [machine learning for more information on that topic). Some of these algorithms also work with streaming data, such as linear regression using ordinary least squares or k-means clustering (and more on the way). Apache Mahout (a machine learning library for Hadoop) has already shifted from MapReduce and joined forces with Spark MLlib.

GraphX

GraphX is a library for graph manipulation and parallel graph operations. It provides a unified tool for ETL, exploratory analysis, and iterative graph computations. Besides built-in graph manipulation operations, it offers a library of common graph algorithms like PageRank.

Applying Apache Spark: Event Detection Use Case

Having addressed “What is Apache Spark?”, let’s consider its ideal problem-solving applications.

I recently encountered an article about an experiment to detect an earthquake by analyzing a Twitter stream. Remarkably, this technique could potentially detect earthquakes in Japan faster than the Japan Meteorological Agency. While the article employed different technology, it exemplifies how we could leverage Spark with simplified code snippets, omitting the glue code.

Initially, we’d filter relevant tweets containing keywords like “earthquake” or “shaking.” Spark Streaming is perfect for this:

| |

Next, we’d perform semantic analysis to identify tweets referencing a current earthquake. For example, “Earthquake!” or “Now it is shaking” would be positive matches, while “Attending an Earthquake Conference” or “The earthquake yesterday was scary” wouldn’t. The authors utilized a support vector machine (SVM). We’ll follow suit, but we could also explore a streaming version. An example using MLlib might look like this:

| |

Satisfied with the model’s prediction accuracy, we could proceed to the next stage: reacting to detected earthquakes. Detection would involve identifying a specific density of positive tweets within a defined time window, as described in the article. Tweets with location services would also provide earthquake locations. With this data, we could use SparkSQL to query a Hive table (containing users subscribed to earthquake notifications) to retrieve their email addresses and send personalized warnings:

| |

Other Use Cases for Apache Spark

Spark’s potential extends far beyond earthquake detection.

Here’s a glimpse into other use cases demanding Spark’s prowess in handling Big Data’s velocity, variety, and volume:

In gaming, processing and deciphering patterns from the deluge of real-time in-game events, and reacting instantly, presents lucrative opportunities. This could enhance player retention, targeted advertising, and automatic complexity adjustments.

In e-commerce, real-time transaction data could feed a streaming clustering algorithm like k-means or collaborative filtering like ALS. Combining these results with unstructured data (customer comments, product reviews) could refine recommendations to reflect emerging trends.

In finance or security, Spark could power fraud/intrusion detection systems or risk-based authentication. Analyzing vast archived logs, merging them with data on breaches and compromised accounts (see https://haveibeenpwned.com/), and incorporating connection/request details like IP geolocation or time, would deliver superior results.

Conclusion

In essence, Spark simplifies the demanding task of processing large volumes of real-time or archived data, structured or unstructured. Its seamless integration of complex capabilities like machine learning and graph algorithms makes Big Data processing accessible. Explore Spark!