Not long ago, most marketers had no clue about using AI for marketing. Now, we’re suddenly experts on crafting the perfect prompts for ChatGPT.

The integration of AI into our work is exciting, and McKenzie predicts it will generate AI will unlock $2.6 trillion in value for marketers.

However, our eagerness to adopt AI might be outpacing crucial ethical, legal, and operational considerations. This exposes marketers to new risks, such as potential lawsuits for instructing AI to “write like Stephen King.”

The world of AI marketing is currently a whirlwind of uncertainty. It’s challenging to fully grasp the potential pitfalls of using large language models and machine learning for content creation and ad management.

This article aims to provide a high-level overview of seven significant risks associated with using AI for marketing. We’ve gathered insights from experts on mitigating these risks and included resources for further exploration.

The integration of AI into our work is exciting, and McKenzie predicts it will generate AI will unlock $2.6 trillion in value for marketers.

However, our eagerness to adopt AI might be outpacing crucial ethical, legal, and operational considerations. This exposes marketers to new risks, such as potential lawsuits for instructing AI to “write like Stephen King.”

The world of AI marketing is currently a whirlwind of uncertainty. It’s challenging to fully grasp the potential pitfalls of using large language models and machine learning for content creation and ad management.

This article aims to provide a high-level overview of seven significant risks associated with using AI for marketing. We’ve gathered insights from experts on mitigating these risks and included resources for further exploration.

Risk #1: Machine Learning Bias

Machine learning algorithms can produce results that unfairly favor or disadvantage individuals or groups. This issue, known as machine learning bias or AI bias, is prevalent even in advanced deep neural networks.

A Data Problem

AI networks aren’t inherently biased; the problem lies in the data they learn from. Machine learning algorithms identify patterns to predict outcomes, such as whether specific shopper groups will favor a product. However, if the training data is skewed towards certain demographics (race, gender, age), the AI might wrongly assume these groups are a better match, leading to biased ad creatives or placements.

bias laundering edition pic.twitter.com/YQLRcq59lQ

— Janelle Shane (@JanelleCShane) June 17, 2021

For instance, researchers recently tested for gender bias within Facebook’s ad targeting. When placing ads for Pizza Hut and Instacart delivery driver positions (with identical qualifications), Facebook displayed the Pizza Hut ads predominantly to men and the Instacart ads to women, aligning with the existing gender distribution of their driver pools. This approach is flawed as it assumes women wouldn’t be interested in the Pizza Hut jobs.

AI Bias is Widespread

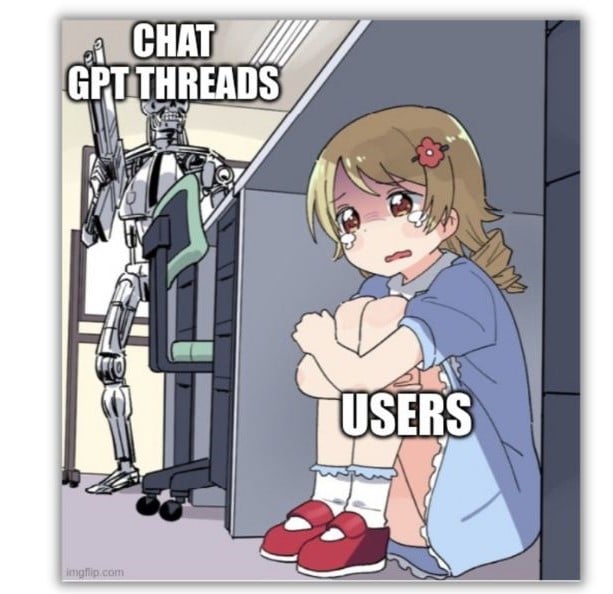

This issue extends beyond Facebook. USC researchers analyzing two major AI databases found that over 38% of the data within them was biased. ChatGPT’s documentation even warns that their algorithm might associate “negative stereotypes with black women.”

Machine learning bias has several consequences for marketers, including subpar ad performance. An ad targeting system that excludes significant portions of the population is counterproductive if your goal is to reach the widest audience.

More importantly, biased ads raise ethical concerns. Real estate ads discriminating against protected groups could lead to legal repercussions under the Fair Housing Act and the Federal Trade Commision, not to mention missing the inclusive marketing movement.

Machine learning bias has several consequences for marketers, including subpar ad performance. An ad targeting system that excludes significant portions of the population is counterproductive if your goal is to reach the widest audience.

More importantly, biased ads raise ethical concerns. Real estate ads discriminating against protected groups could lead to legal repercussions under the Fair Housing Act and the Federal Trade Commision, not to mention missing the inclusive marketing movement.

Avoiding AI Bias

So, how do we address this issue? Several steps can ensure your ads treat everyone fairly. First and foremost, human review of your content is crucial, emphasizes writes Alaura Weaver, Senior Manager of Content and Community at Writer. “While AI technology has advanced, it lacks the critical thinking and decision-making abilities of humans,” she explains. “Human editors can fact-check and ensure AI-written content is free from bias and adheres to ethical standards.” Human oversight is equally vital for paid ad campaigns to prevent negative outcomes. “Currently, and possibly indefinitely, allowing AI to completely control campaigns or any aspect of marketing is not advisable,” says Brett McHale, Founder of Empiric Marketing. “AI works best with accurate input from organic intelligence that possesses extensive data and experience.”

Risk #2: Factual Errors

Google’s new AI chatbot, Bard, recently caused a cost its parent company $100b in valuation when it provided an incorrect answer in a promotional tweet.

Bard is an experimental conversational AI service, powered by LaMDA. Built using our large language models and drawing on information from the web, it’s a launchpad for curiosity and can help simplify complex topics → https://t.co/fSp531xKy3 pic.twitter.com/JecHXVmt8l

— Google (@Google) February 6, 2023

This blunder highlights a major AI limitation and a significant risk for marketers: AI doesn’t always get its facts straight.

AI Hallucinates

Ethan Mollic, a professor at the Wharton School of Business, recently described AI-powered systems like ChatGPT to an “omniscient, eager-to-please intern who occasionally lies.” Of course, AI lacks sentience, despite what some may claim might suggest. It doesn’t intentionally mislead; however, it can experience “hallucinations,” leading to fabricated information.

AI operates on prediction, aiming to find the next word or phrase to answer your query. It lacks self-awareness and the logical reasoning to assess the validity of its generated content.

Unlike bias, this isn’t necessarily a data issue. Even with accurate information, AI can still provide incorrect answers.

Take, for example, when a user asked ChatGPT “How many times did Argentina win the FIFA World Cup?” It incorrectly stated once, referencing the 1978 victory. The user then inquired about the 1986 winner.

AI operates on prediction, aiming to find the next word or phrase to answer your query. It lacks self-awareness and the logical reasoning to assess the validity of its generated content.

Unlike bias, this isn’t necessarily a data issue. Even with accurate information, AI can still provide incorrect answers.

Take, for example, when a user asked ChatGPT “How many times did Argentina win the FIFA World Cup?” It incorrectly stated once, referencing the 1978 victory. The user then inquired about the 1986 winner.

Asked #ChatGPT abt who won the FIFA world cup in 2022. It couldn’t answer. That’s expected. However, it seems to provide wrong information (abt the other 2 wins) even though the information is there in the system. Any #Explanations? pic.twitter.com/fvxe05N12p

— indranil sinharoy (@indranil_leo) December 29, 2022

The chatbot correctly identified Argentina as the winner, without acknowledging its previous mistake. Disturbingly, AI often presents erroneous answers confidently, seamlessly blending them into the surrounding text and making them appear plausible. The comprehensiveness of these fabrications is evident in a detailed in a lawsuit against OpenAI, where ChatGPT allegedly invented an embezzlement story that a journalist then published.

Avoiding AI Hallucinations

While AI can mislead with simple answers, it’s more prone to errors when generating longer texts. “AI can create a blog post or an eBook from a single prompt. While amazing, there’s a caveat,” Weaver cautions. “The more it generates, the more editing and fact-checking are required.” To minimize the risk of AI hallucinations, Weaver recommends outlining your content and having the AI tackle one section at a time. Importantly, always have a human review the generated facts and statistics.

Risk #3: Misapplication of AI Tools

Every day brings a wave of new AI tools, but not all platforms are suitable for every marketing function, and some marketing challenges remain beyond AI’s current capabilities.

Limitations of AI Tools

ChatGPT serves as a prime example. It excels at playful tasks like writing how to remove a peanut butter sandwich from a VCR in the style of the King James Bible and generating well-written short-form content. However, it falters when asked to assist with keyword research. ChatGPT’s limitation stems from its outdated data set, which only includes information up to 2022. Requesting keywords for “AI marketing” yields results that don’t align with findings from tools like Thinword or Contextminds. Similarly, while Google and Facebook offer AI-powered tools for ad creation, optimization, and personalization, a chatbot cannot address these challenges.

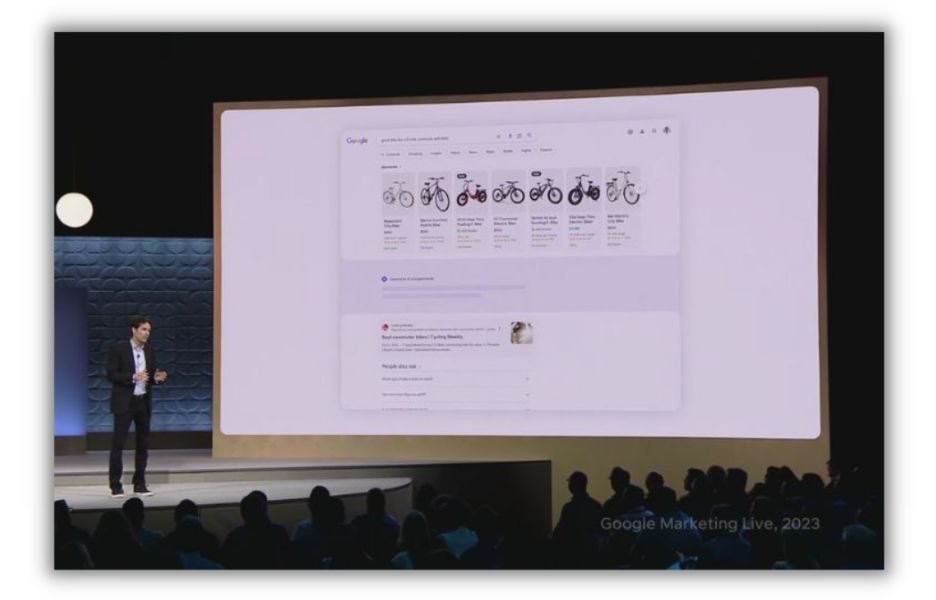

Google showcased numerous AI enhancements to its search and ads management platforms at the 2023 Google Marketing Live event.

Google showcased numerous AI enhancements to its search and ads management platforms at the 2023 Google Marketing Live event.

Overreliance on AI

Tasking AI with a singular objective can lead to over-optimization. Nick Abbene, a marketing automation expert, observes this frequently with companies focused on SEO. “The biggest issue I encounter is blind reliance on SEO tools, resulting in over-optimization for search engines and neglecting customer search intent,” Abbene explains. “While SEO tools are valuable for signaling content quality to search engines, Google’s ultimate goal is to satisfy the searcher’s query.”

Avoiding AI Tool Misapplication

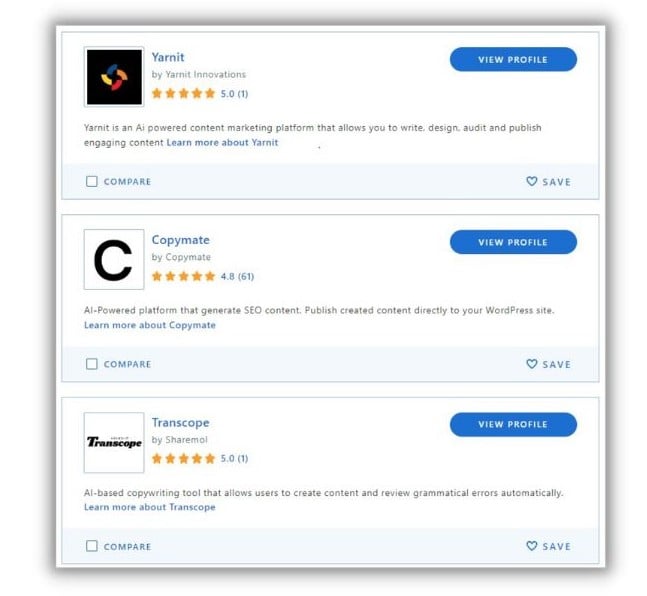

Just as a wrench isn’t ideal for hammering nails, an AI writing assistant might not be suitable for web page creation. Before fully committing to any AI tool, Abbene advises seeking feedback from its developers and other users. “To avoid selecting the wrong tool, determine if other marketers are using it for your specific use case,” he suggests. “Request product demos or trial it alongside similar tools.” Websites like Capterra facilitate the comparison of various AI platforms.

Once you’ve found the appropriate AI tool stack, utilize it as an aid, not a replacement for your expertise. “Don’t hesitate to leverage AI tools, but remember they are tools,” Abbene emphasizes. “Start each content piece with thorough keyword research and an understanding of search intent.”

Risk #4: Repetitive Content

AI can generate an essay in seconds, but its impressive speed comes at the cost of creativity and nuance, often resulting in robotic-sounding output. “While AI excels at producing informative content, it often lacks the creative flair and engagement that humans bring,” says Weaver.

AI as an Imitator

Ask an AI writing tool to write a book report, and it can easily produce a 500-word analysis of Catcher in the Rye’s main themes (assuming it doesn’t hallucinate Holden Caulfield as a bank robber). Its ability stems from having processed thousands of texts about J.D. Salinger’s novel. However, when tasked with crafting a blog post that conveys a core business concept while reflecting your brand, audience, and value proposition, the results might disappoint. “AI-generated content doesn’t always grasp the nuances of brand personality and values, potentially missing the mark,” Weaver points out.

In essence, AI excels at digesting, combining, and re-presenting existing content, but struggles to create something truly unique.

Additionally, generative AI tools lack the finesse to make content engaging. They produce large chunks of text without visual aids like images, graphs, or bullet points. They struggle to incorporate customer stories, hypothetical examples, or connect industry news to your product’s benefits.

Avoiding Homogenous Content

While some AI tools, like Writer, offer features to maintain brand consistency, human editors remain crucial. They can “review and edit content for brand voice and tone, ensuring it resonates with the audience and reinforces the organization’s messaging,” Weaver advises. Human editors also possess the ability to perceive content from a reader’s perspective, breaking up text walls and adding visual appeal. Therefore, treat AI-generated content as a starting point to spark your creativity and research, but always infuse it with your personal touch.

Risk #5: SEO Degradation

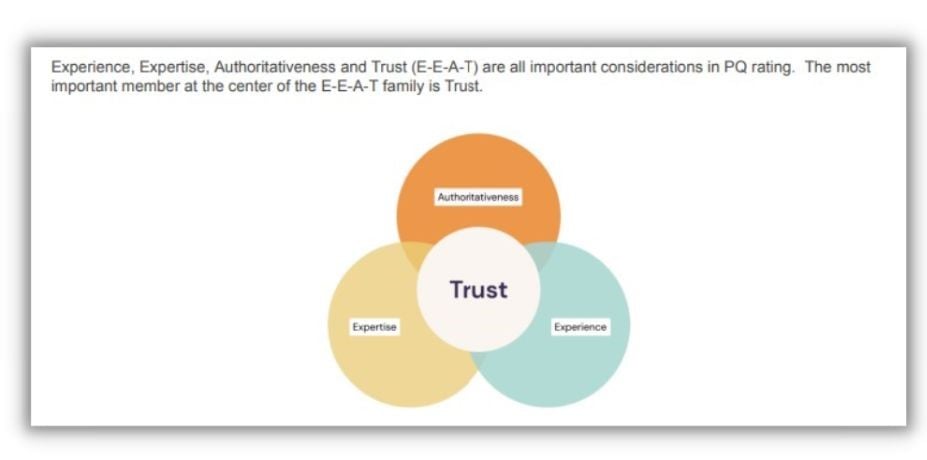

Google’s stance on AI content has been somewhat unclear. Initially, it seemed the search engine might penalize AI-written content. However, more recently, Google’s developer blog stated that AI-generated content is acceptable. Yet, this confirmation comes with a significant caveat: Only content exhibiting “qualities of E-E-A-T: expertise, experience, authoritativeness, and trustworthiness” will satisfy Google’s human search raters, who constantly evaluate ranking systems.

Trust as the Cornerstone of SEO

Among Google’s E-E-A-T factors, trust reigns supreme.

[source]

As previously discussed, AI content’s susceptibility to factual errors makes it inherently untrustworthy without human oversight. It also falls short of the other E-E-A-T requirements as it typically lacks genuine expertise, authority, or experience.

Imagine a blog post about baking banana bread. An AI can provide a recipe instantly, but it can’t replicate the personal touch of sharing cherished family baking memories or years of experience experimenting with different flours as a professional baker. These are the elements Google’s search raters seek.

It appears that human users crave authenticity as well, explaining why many are are turning to real people on TikTok videos to find information they previously sought on Google.

[source]

As previously discussed, AI content’s susceptibility to factual errors makes it inherently untrustworthy without human oversight. It also falls short of the other E-E-A-T requirements as it typically lacks genuine expertise, authority, or experience.

Imagine a blog post about baking banana bread. An AI can provide a recipe instantly, but it can’t replicate the personal touch of sharing cherished family baking memories or years of experience experimenting with different flours as a professional baker. These are the elements Google’s search raters seek.

It appears that human users crave authenticity as well, explaining why many are are turning to real people on TikTok videos to find information they previously sought on Google.

Avoiding SEO Degradation

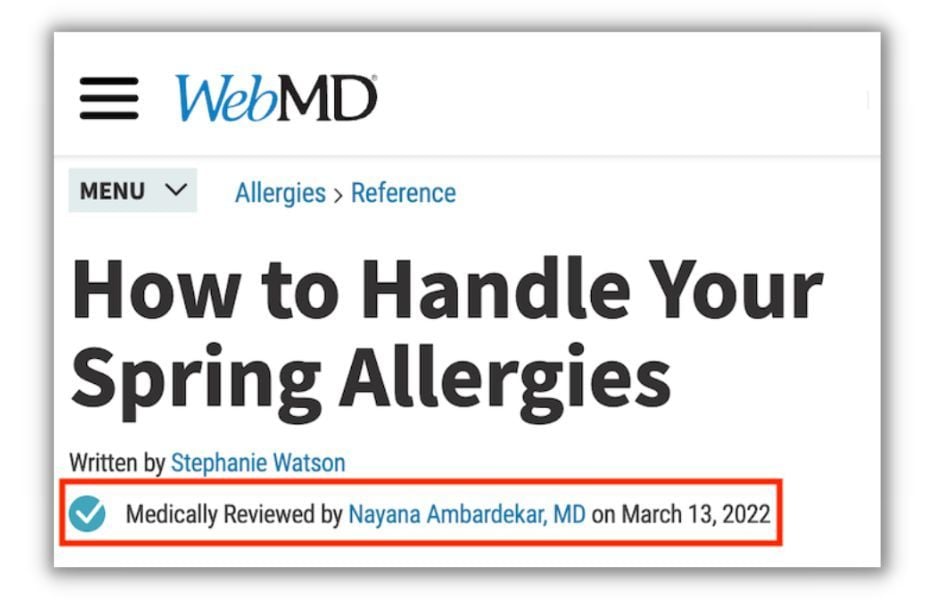

The beauty of AI lies in its willingness to share credit. When utilizing a chatbot to expedite content creation, always attribute the work to a human author with relevant credentials. This is particularly crucial for sensitive topics like healthcare and personal finance, categorized as Your Money, Your Life (YMYL) topics by Google. “For YMYL verticals, prioritize authority, trust, and accuracy above all else,” advises Elisa Gabbert, Director of Content and SEO for nexus-security and LocaliQ.

For instance, when writing about healthcare, have a medical professional review your content and acknowledge their contribution within the post. This signals trustworthiness to Google, even if the content originated from a chatbot.

We delve deeper into using ChatGPT for SEO effectively in this article.

For instance, when writing about healthcare, have a medical professional review your content and acknowledge their contribution within the post. This signals trustworthiness to Google, even if the content originated from a chatbot.

We delve deeper into using ChatGPT for SEO effectively in this article.

Risk #6: Legal Ramifications

Generative AI learns from human-created work to generate new(ish) content, raising copyright concerns for both the input and output of the AI content model.

AI’s Use of Existing Work

To illustrate the copyright dilemma surrounding works used to train large language models, consider the case of a case reported by technologist Andy Baio. As Baio explains, an LA-based artist, Hollie Mengert, discovered that 32 of her illustrations had been incorporated into an AI model and were being offered for recreation under an open license.

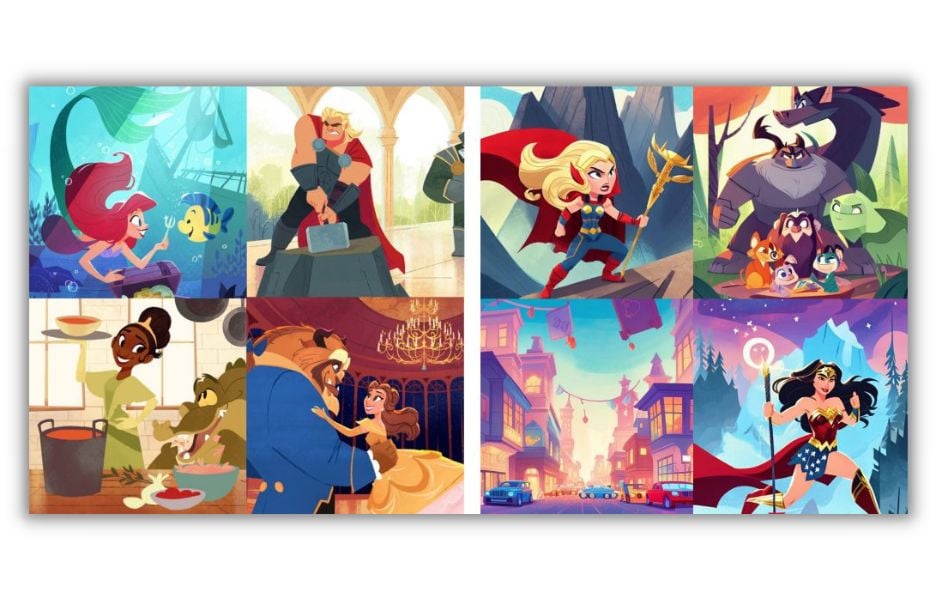

Caption: A comparison of Hollie Mengert’s original illustrations (left) and AI-generated versions based on her style as curated by Andy Baio.

The situation becomes even more complex considering Mengert created many of these illustrations for clients like Disney, who hold the actual copyrights.

Can artists (or writers, coders, etc.) in a similar predicament as Mengert successfully sue for copyright infringement?

The answer remains unclear. “Both sides of this argument seem incredibly confident, but the reality is nobody knows,” Baio told The Verge. “Anyone claiming to definitively know the outcome in court is mistaken.”

While using an AI trained on a vast collection of works from numerous creators might not lead to legal trouble, feeding it ten Stephen King novels and instructing it to write a new one in his style could have serious consequences.

Disclaimer: We are not legal professionals; consult with a lawyer for legal advice.

Caption: A comparison of Hollie Mengert’s original illustrations (left) and AI-generated versions based on her style as curated by Andy Baio.

The situation becomes even more complex considering Mengert created many of these illustrations for clients like Disney, who hold the actual copyrights.

Can artists (or writers, coders, etc.) in a similar predicament as Mengert successfully sue for copyright infringement?

The answer remains unclear. “Both sides of this argument seem incredibly confident, but the reality is nobody knows,” Baio told The Verge. “Anyone claiming to definitively know the outcome in court is mistaken.”

While using an AI trained on a vast collection of works from numerous creators might not lead to legal trouble, feeding it ten Stephen King novels and instructing it to write a new one in his style could have serious consequences.

Disclaimer: We are not legal professionals; consult with a lawyer for legal advice.

Uncertain Copyright Protection for AI-Generated Content

What about the copyright status of content you create using a chatbot? In most cases, it might not be it’s not unless you’ve made substantial edits, meaning you have limited recourse if someone steals it. Even for protected content, the copyright might belong to the AI’s programmer, not you, as many countries consider the tool’s creator, not the user, as the rightful owner.

Avoiding Legal Challenges

Begin by selecting a reputable AI content creation tool from a company with transparent copyright policies and positive user reviews. Exercise good judgment to avoid intentionally replicating someone else’s work, focusing instead on using AI to enhance your own. If seeking legal protection for your AI-assisted creations, make significant changes or use AI solely for outlining, writing the majority of the content yourself.

Risk #7: Security and Privacy Breaches

AI tools introduce various security and data privacy risks for marketers. These range from direct attacks by malicious actors to users unknowingly sharing sensitive information with systems designed to spread it.

Security Threats from AI Tools

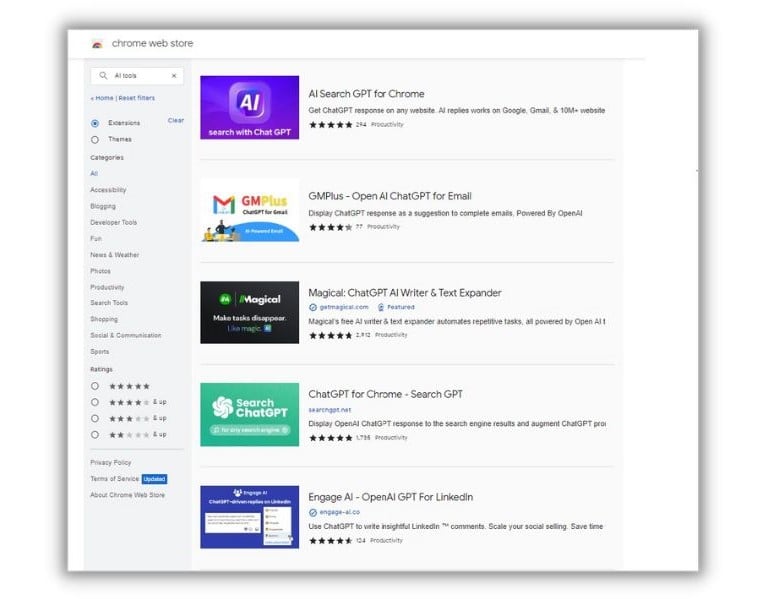

“Numerous products mimic legitimate tools but are actually malware,” warns Elaine Atwell, Senior Editor of Content Marketing at endpoint security provider Kolide. “These are incredibly difficult to distinguish from genuine tools and are readily available in the Chrome store.” A simple search for “AI tools” in the Google Chrome store yields countless results.

Atwell delves deeper into this issue in a wrote about these risks on the Kolide blog, citing an incident involving a malicious Chrome extension disguised as “Quick access to Chat GPT.” This extension hijacked users’ Facebook accounts, stealing their cookies, including security cookies. According to Atwell, over 2,000 users downloaded this extension daily.

Atwell delves deeper into this issue in a wrote about these risks on the Kolide blog, citing an incident involving a malicious Chrome extension disguised as “Quick access to Chat GPT.” This extension hijacked users’ Facebook accounts, stealing their cookies, including security cookies. According to Atwell, over 2,000 users downloaded this extension daily.

Unprotected Privacy

Even legitimate AI tools can pose security risks, Atwell explains. “Currently, most companies lack policies for assessing the risks associated with different extensions. Without clear guidelines, people globally are installing these tools and feeding them sensitive data.” Consider drafting an internal financial report for investors. Remember that AI networks learn from input to generate output for other users. Any data you input into the AI chatbot could be accessible to individuals outside your company, potentially surfacing if a competitor inquires about your financials.

Avoiding Privacy and Security Risks

Start by verifying the legitimacy of any software before use. Exercise caution regarding the information you share with chosen tools. “If using AI tools (and they do have their uses!), avoid inputting any sensitive data,” Atwell advises. When evaluating AI tools, inquire about their privacy and security policies alongside their usefulness and potential for bias.

Mitigating the Risks of AI Marketing

AI is evolving at an unprecedented pace. ChatGPT has already undergone significant advancements in capabilities within a year. It’s impossible to predict what we’ll achieve with AI in the coming months, let alone the potential challenges that lie ahead. Here’s how to enhance your AI marketing outcomes while mitigating common risks:

- Implement human review for content quality, readability, and brand voice.

- Thoroughly vet each tool for security and capabilities.

- Regularly check AI-driven ad targeting for bias.

- Scrutinize copy and images for potential copyright infringement. We extend our gratitude to Elain Attwell, Brett McHale, Nick Abenne, and Alaura Weaver for their contributions to this post. As a reminder, here are the risks associated with utilizing AI for marketing:

- Machine learning bias

- Factual errors

- Misapplication of AI tools

- Repetitive content

- SEO degradation

- Legal challenges

- Security and privacy breaches