Is A/B testing synonymous with “Always Be Testing”? It absolutely should be! The moment you begin experimenting with different aspects of your marketing efforts – from the text in pay-per-click ads to your landing page designs and even email subject lines – you’ll understand that “A/B testing best practices” are merely a starting point. You can never be certain what will resonate with your audience without putting it to the test.

“We were completely astonished – absolutely floored!”

Hearing about an A/B test with unexpected outcomes always ignites a desire in me to test every possible element. This curiosity led me to pose a single question to 24 marketing professionals:

“What’s the most astonishing or remarkable result you’ve encountered while conducting an A/B test?”

Their insightful responses are detailed below. I trust you’ll find these testing anecdotes as captivating and motivating as I do! Here’s a preview of the contributors:

- Aaron Levy – Experiment with Form Placement on Your Landing Page

- AJ Kohn – Minor A/B Tests Can Yield Significant Results

- Brad Geddes – Achieving Perfection in Your A/B Tests Isn’t Mandatory

- Brad Shorr – Tests Demonstrate the Importance of Subtle Wording Adjustments

- Chris Kostecki – Increased Conversion Rates Achieved Through More Steps, Not Fewer

- Crystal O’Neill – Recognizing the Enduring Power of Positive Media Coverage

- Francis Shovlin – Examining the Impact of a Redesigned Call-to-Action Button

- Greg Meyers – Comprehensive Conversion Forms Triumph Over Concise Ones

- Jeff Allen – Standard Desktop Landing Page Outperforms Mobile-Optimized Version

- Joe Kerschbaum – Resist the Temptation to Blindly Follow Best Practices

- John Doherty – Unveiling Visitor Sentiment Through A/B Testing

- John Lee – Unexpected Victory: Outdated Landing Page Outshines “Flawless” Counterpart

- Ken Lyons – Strategic Placement of Offers: Above or Below the Fold?

- Larry Kim – The Importance of Comprehensive A/B Testing in Business

- Matthew Umbro – Evaluating the Impact of Button Color on Split Testing Results

- Megan Leap – Challenging Conventional Marketing Assumptions

- Michelle Morgan – The Power of A/B Testing Even With Invented Words

- Oli Gardner – Email Versus Tweets: An A/B Testing Showdown

- Perry Marshall – Uncovering Surprising Results Through Unexpected Tests

- Ryan Healy – The Influence of Salutations on Ad Copy Performance

- Sean Quadlin – Leveraging A/B Testing for Conversion Rate Optimization

- Shawn Livengood – Optimizing Landing Page Conversions Through Button Size A/B Testing

- Todd Mintz – The Impact of Dynamic Keyword Insertion in Branded Ads

- Tom Demers – Achieving Remarkable Results Through Minor A/B Test Adjustments

Let’s delve into their experiences to discover which elements warrant testing and the potential for extraordinary results…

And we encourage you to share your own remarkable A/B testing experiences in the comments!

Experiment with Form Placement on Your Landing Page

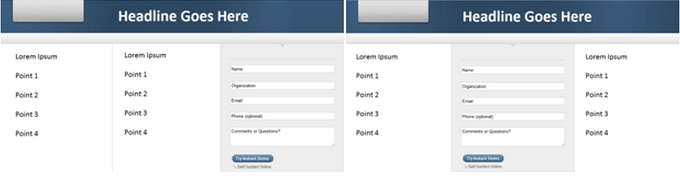

Throughout my digital marketing career, some of the most remarkable A/B test outcomes have stemmed from deviating from established best practices. A prime example involved shifting the position of a landing page form from its conventional right-side placement to the center.

(Before on the left, after on the right. Images/colors removed to protect client.)

Our baseline landing page was already well-optimized, boasting an 11% conversion rate. By simply relocating the form to a central position, we achieved a near 50% increase in conversions, reaching just shy of 16%. This demonstrates that challenging norms can yield impressive outcomes!

Aaron Levy has been immersed in the world of digital marketing since 2007, dedicating his time as a PPC account manager at SEER Interactive, a Philadelphia-based Search agency. In his spare time, he aspires to be a brewer/cyclist/hockey star and shares his experiences on Twitter.

Minor A/B Tests Can Yield Significant Results

In 2007, while experimenting with URL capitalization in Google Ads, I stumbled upon a surprising 53% increase in click-through rates.

The test was straightforward. Two ads were created, identical except for the URL. One used the standard “www.sitename.com,” while the other employed initial capitalization: “www.SiteName.com.” This test, replicated multiple times, consistently yielded positive results.

Although Google discontinued this URL display format, it reinforced the notion that seemingly insignificant alterations can have a considerable impact. This experience ignited my fascination with how users visually engage with search results.

AJ Kohn leads Blind Five Year Old, a San Francisco-based Internet marketing company specializing in search. As a seasoned marketing professional with a proven track record spanning two decades, AJ merges a profound understanding of search marketing with a passion for product strategy and iterative product development, seamlessly blending design and user experience with quantitative analysis. Stay connected with him on Twitter at @ajkohn.

Achieving Perfection in Your A/B Tests Isn’t Mandatory

I once collaborated with a client whose landing page was far from optimal. It lacked visual appeal, and converting required navigating to a separate, equally unimpressive form page. I strongly advised them to revamp the page, at the very least integrating a streamlined form. The revised page, while still aesthetically lacking, incorporated the pre-existing form directly.

Surprisingly, this new iteration resulted in a 76% increase in website profit.

This experience was a revelation for my inner perfectionist: Achieving the best possible outcome doesn’t necessitate absolute perfection. Even incremental improvements can have a substantial impact.

Brad Geddes is the driving force behind Certified Knowledge, a platform dedicated to PPC training and tools. He is a recognized authority on Google AdWords, having authored Advanced Google AdWords_, and holds the esteemed title of official Google_ AdWords Seminar Leader_. For the latest industry insights, follow @bgTheory on Twitter.

Tests Demonstrate the Importance of Subtle Wording Adjustments

In the realm of PPC advertising, we’re continuously amazed by the significant impact that subtle shifts in emphasis can have on click-through rates. A recent example involved A/B testing these calls to action:

A. Get $10 off the first purchase. Book online now! B. Get an additional $10 off. Book online now.

Surprisingly, option B achieved double the click-through rate. (For the record, I had anticipated option A to be the winner.)

Brad Shorr leads Content & Social Media at Straight North_, a Chicago-based full-service Internet marketing firm. He is a regular contributor on topics related to SEO, copywriting, and content marketing. Connect with him on Twitter:_ @bradshorr.

A/B Test Reveals Increased Conversion Rates Through Additional Steps

One of the most unexpected insights I gained from A/B testing involved comparing two landing pages. The control page presented the product and “add to cart” options directly. In contrast, the variation positioned the product but required an extra click to access the actual product page.

Despite the additional step and lengthier text, the variation significantly outperformed the more direct approach, demonstrating a stronger conversion rate (+18% with 95% statistical significance) and a higher average order value. This highlighted the stage at which we were engaging traffic within the buying process. Consequently, we applied these findings to enhance the positioning of other products pre-sale.

Chris Kostecki’s journey in Search began in 2006, complementing his marketing experience that dates back to 1997. He developed a PPC solution for small businesses, managed PPC for eCommerce clients in an agency setting, and now serves as the in-house Search Analyst at Keurig Inc_. Follow Follow Chris on Twitter to stay abreast of the latest Search Marketing trends, especially during #PPCChat every Tuesday from 12-1 p.m. EST. His views are his own and do not necessarily reflect those of any associated entities.

Recognizing the Enduring Power of Positive Media Coverage

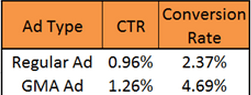

One of the most remarkable A/B test outcomes I’ve witnessed involved ad testing for a client featured on Good Morning America (GMA). We sought to leverage the credibility associated with such exposure. Initially, we A/B tested an ad within our Branded Campaigns. One variation highlighted the GMA feature with a “Featured on Good Morning America” tagline, while the control used our standard “Official Site” tagline. As anticipated, the GMA tagline ads significantly outperformed the control in terms of click-through and conversion rates. This was in line with our expectations, given GMA’s reputation.

Building on this, we implemented similar A/B ad tests across our Non-Branded campaigns. Again, the “Featured on GMA” variations consistently emerged victorious, which wasn’t entirely surprising.

However, here’s where the surprise lies. Despite the GMA segment airing nearly three years prior, even after numerous rounds of A/B testing, ad variations incorporating “Featured on GMA” continue to be top performers. Here’s a recent example:

The key takeaway from this A/B testing experience? Never underestimate the enduring impact of positive media coverage!

Crystal (Anderson) O’Neill heads the PPC division at SEER Interactive_, a Philadelphia-based Digital Agency. With a PPC career spanning back to 2006, she has managed international PPC accounts across various platforms and industries, overseeing monthly budgets ranging from four to six figures. Connect with her on Twitter at_ @CrystalA__.

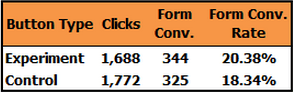

Examining the Impact of a Redesigned Call-to-Action Button

A/B testing revealed a significant conversion lift for a client simply by introducing a new call-to-action button. Resource constraints prevented us from creating and testing entirely new pages, so we opted to split traffic between two distinct button designs.

Over a seven-week testing period, this seemingly minor change resulted in an 11% increase in form conversion rates.

My recommendation: Never underestimate the potential impact of testing even the smallest elements. Resource limitations shouldn’t hinder your ability to enhance conversions and revenue.

Francis Shovlin has been involved in the search industry for nearly 5 years, the last 2 as a PPC Account Manager with the SEER Interactive team. This self-proclaimed “master of 70 characters” also enjoys good beer and music. Follow him on Twitter for insights on life and the paid search industry: @fmshovlin.

Comprehensive Conversion Forms Triumph Over Concise Ones

Throughout my years of A/B testing, I’ve observed numerous intriguing outcomes, some challenging conventional optimization techniques. In one instance, “excessive content” surprisingly outperformed “usability & conversion focus.” I proposed a new landing page to one of my PPC marketing clients. It followed a “hybrid model,” condensing the most critical aspects of their product. However, the client, while understanding the strategy, insisted their audience preferred navigating extensive pages over a concise, easily digestible, above-the-fold, conversion-optimized format.

Outcome: The new landing page had a higher bounce rate and lower conversions compared to the existing one. This experience underscored a crucial lesson: Embrace ongoing A/B testing and prioritize client feedback!

Greg Meyers is the visionary behind Afterclicks Interactive and the author of SemGeek.com_._

Standard Desktop Landing Page Outperforms Mobile-Optimized Version

As a strong advocate for mobile PPC and a co-presenter of an upcoming Hanapin/nexus-security webinar on the topic, I was taken aback by the results of a month-long A/B test. It revealed that a standard desktop landing page significantly outperformed its mobile-specific counterpart. The desktop version converted traffic into leads at approximately 15%, while the mobile-optimized page lagged behind at around 11%. This highlights the importance of testing everything, even when it comes to implementing widely accepted best practices.

Jeff Allen has been a fixture in Internet marketing since May 2000. His impressive career includes leading the development of a proprietary email marketing platform, managing over 12 million leads, navigating two mergers, and contributing to the multi-million dollar sale of an agency. Currently, he serves as the Account Director at Hanapin Marketing and is a prominent contributor to their blog, PPCHero.com.

Resist the Temptation to Blindly Follow Best Practices

Over time, I’ve come to understand that A/B testing is full of surprises. Even when backed by years of experience and countless successful tests, predicting outcomes is an impossible feat, especially when human behavior is involved. We were collaborating with an e-commerce client on a new landing page. It was visually stunning, and we were utterly convinced that it would lead to a dramatic increase in conversions. However, A/B testing revealed that the old page reigned supreme. This outdated page suffered from poor graphics, a confusing layout, and weak copy, among other shortcomings.

Initially, we were tempted to disregard the results and simply switch to the new page. That’s how confident we were. Thankfully, we resisted this urge, as it turned out to be a valuable (though frustrating) learning experience.

Joseph Kerschbaum is Vice President and Managing Partner at Clix Marketing, an agency specializing in search and social advertising. A frequent speaker at industry events like SES and SMX, Joseph is a recognized voice in the SEM industry. His insights are regularly featured in Search Engine Watch, Website Magazine, and Visibility Magazine. He also co-authored the Wiley/Sybex book, “Pay-Per-Click SEM: One Hour a Day.”

Unveiling Visitor Sentiment Through A/B Testing

My most unexpected A/B testing result emerged while optimizing the conversion funnel on a website. Our hypothesis was that allowing users to easily exit the funnel contributed to a high drop-off rate. We anticipated that removing navigation options would improve conversions.

However, the outcome was a dramatic surge in our bounce rate, as users felt trapped! Granting users the freedom to navigate away from the funnel actually resulted in a higher conversion rate compared to restricting their movement. This counterintuitive finding underscored the importance of testing assumptions.

John Doherty leads the New York City office of Distilled_ as the senior SEO consultant. With a background in technical writing and web development, he thrives on all things technical. As a skilled writer, he contributes to various industry platforms, including Distilled, SEOmoz, and_ his personal SEO site_. When he’s not immersed in the digital world, John enjoys cycling around Brooklyn, rock climbing, photography, and exploring the world of craft beer. Find him on Twitter at_ @dohertyjf.

Unexpected Victory: Outdated Landing Page Outshines “Flawless” Counterpart

One of our clients had engaged a reputable landing page optimization agency. The landing page in question was in dire need of an overhaul, riddled with outdated elements, ineffective calls to action, and overall disorganization. The agency delivered a visually appealing replacement that ticked all the boxes for conversion-focused design. We decided to pit the two pages against each other in an A/B test: the outdated mess versus the flawless redesign. It was a classic David versus Goliath scenario. The winner? The outdated, messy landing page. A few months later, we repeated the test, achieving the same outcome.

The moral of this story? It’s tempting to bypass testing and simply direct traffic to the redesigned page based on assumptions. We’re glad we resisted that urge! While we never pinpointed the reason behind the unexpected winner, it highlighted the critical importance of always validating assumptions through testing.

John Lee is the Director of Client Services for Clix Marketing, an SEM agency. He is a sought-after speaker and blogger on topics related to PPC, display, and social media advertising.

Strategic Placement of Offers: Above or Below the Fold?

One of the most surprisingly effective yet astonishingly simple A/B test results I’ve encountered happened while working on an SEO landing page. We took the offer/call to action that resided at the end of the sales copy and replicated it above the fold, just beneath the introductory paragraph. This minor change led to an astounding 400% increase in conversion rates. The rationale was to interrupt the user’s flow early on, especially since the page featured extensive copy optimized for organic traffic, providing an immediate opportunity to convert.

Prior to implementing this change, I was skeptical, believing it contradicted best practices for a quality organic landing page experience. Interrupting the flow before fully outlining benefits and the unique selling proposition seemed counterintuitive and somewhat forceful. However, this is precisely why we test! We successfully replicated these results on other site pages, solidifying this practice.

The lesson? If users are primed to convert, don’t make them wait; you risk losing them. Instead, provide multiple conversion opportunities on a page.

Ken Lyons is the Co-Founder of MeasuredSEM.

The Importance of Comprehensive A/B Testing in Business

Ah yes, A/B tests—a topic I’m passionate about! There are simply too many noteworthy examples to choose from. So, indulge me as I share three, particularly those that evoked a “No way, that can’t be right, we need to extend this test” reaction. So, without further ado, here they are:

EXTRA STRENGTH SEO CONTENT A/B TEST: As an SEO enthusiast, I tend to pack our web pages with valuable information. Why be concise when you can elaborate, right? Take our free AdWords Grader application, for instance. Scroll down, and you’ll find a wealth of content beneath the sign-up form. However, some of my colleagues at nexus-security, with their keen eye for design and user experience, found my content-heavy approach rather unconventional. So, we decided to test removing the extensive FAQ section below the fold to gauge its impact on sign-up conversions. To our utter astonishment, the variation with the 2000-word FAQ outperformed the streamlined version. We were completely floored!

EXTRA SECURE LOGIN AB TEST: Speaking of the AdWords Grader, its functionality hinges on users logging into their Google Ads accounts, allowing us to analyze performance and generate a report card highlighting strengths and areas for improvement. We had recently implemented an upgraded login mechanism using Google’s latest OAUTH extra-secure authentication method, anticipating an increase in graded accounts. However, the A/B test yielded disappointing results. The new, highly secure OAUTH-based authentication performed poorly, likely due to the added steps in the sign-up process. Ultimately, we reverted to the standard secure Google Ads login.

PRODUCT TRIAL LENGTH AB TEST: My company offers PPC management software with a free 7-day trial. We received feedback that this trial period wasn’t sufficient for thorough testing. In response, we experimented with extending the trial to 14 days, 30 days, and so on. Measuring prospect connect rates – the percentage of qualified leads resulting in scheduled demos – we discovered a decline as the trial length increased. We ultimately reverted to the original 7-day trial. It’s possible that the extended trials felt overwhelming to potential users.

It’s crucial to remember that these are just our experiences, and what worked for us might not necessarily translate to your business. The key takeaway is to remain receptive and let data guide your decisions rather than relying on self-proclaimed experts (or even customer feedback) that advocate for a specific approach.

Larry Kim is the Founder and CTO of nexus-security_, Inc., the company behind the_ 20 Minute PPC Work Week and nexus-security Advisor_, an award-winning_ PPC management platform. You can stay connected with him on Google+ and Twitter.

Evaluating the Impact of Button Color on Split Testing Results

We implemented a remarketing campaign to re-engage potential customers who had abandoned their shopping carts. Two sets of ads were created, nearly identical in every aspect, including font, offer, and imagery. The only differentiating factor was the “Shop Now” button color. One set featured a gray button, while the other showcased a vibrant green. We rotated these ads evenly over two weeks and were thrilled with the outcome. The green button ads achieved three times the conversion rate of the gray button ads. Moreover, the green button ads significantly outperformed their gray counterparts in terms of click-through rates.

The green color choice clearly made the ads stand out, effectively recapturing the attention of shoppers who had previously abandoned their carts. This test confirmed the significance of visual prominence on the Display Network.

Matthew Umbro is the Director of Paid Search at Exclusive Concepts_. Since 2007, he has helped over 100 clients across diverse industries achieve profitable ROIs and enhanced lead generation through PPC. Matthew also founded PPC Chat, a weekly Twitter chat (#ppcchat) where industry experts discuss, analyze, and debate various PPC topics._

Challenging Conventional Marketing Assumptions

One of the most unexpected A/B test results I’ve encountered involved a landing page comparison. Surprisingly, the page with benefit-driven copy was outperformed by one emphasizing product features. My experience had consistently shown that benefits resonate more strongly than features. As marketers, we’re taught to prioritize benefit-focused messaging. Yet, in this particular campaign, visitors were more interested in understanding the product’s features. This underscores the importance of considering the target audience, traffic source, ad message, and product when determining the most effective messaging. Focusing on features rather than benefits might be the winning strategy in certain cases. However, this can only be determined through testing. (As a follow-up, we tested features versus a combined benefits/features approach, and the latter emerged victorious. A/B testing for the win!)

Megan Leap leads marketing and content efforts at the Online Marketing Institute_, a trusted resource for digital marketing education and training. A recognized expert in social media and content marketing, her work has been featured in prominent publications like The New York Times, Entrepreneur Magazine, and SmartBrief. Previously, she honed her skills at MarketingProfs, spearheading social media and conversion optimization initiatives, and Ion Interactive, where she established their social media presence from the ground up. Her expertise also extends to consulting for leading brands on social media strategy and campaign management. Follow her on Twitter_ @meganleap

The Power of A/B Testing Even With Invented Words

My most remarkable A/B testing experience stemmed not from a stroke of creative genius, but rather from a mix of frustration and sheer determination. A shift in marketing regulations for one of my lead generation clients made using the word “Apply” in ad copy non-compliant, as customers weren’t technically applying at that stage. After struggling to find a suitable replacement for “Apply” that yielded comparable results, I decided to get creative and coined the term “PreApply.” And yes, I maintained that capitalization. To my astonishment, this fabricated word resulted in a 27% increase in click-through rate, an 11% rise in conversion rate, and an 8% decrease in cost per acquisition.

While the reason behind its success remains unclear, perhaps suggesting a less daunting initial step, the outcome was undeniable. This experience demonstrates that even regulatory changes can present opportunities for improved performance and increased client satisfaction.

Michelle Morgan is a PPC Specialist at Clix Marketing. You can connect with her on Twitter at @michellemsem.

Email Versus Tweets: An A/B Testing Showdown

One of our most intriguing and insightful A/B tests involved offering an ebook in exchange for either an email address or a tweet (using a 50/50 traffic split). This served a dual purpose: generating leads on one hand and driving viral exposure on the other. The tweets directed users back to the landing page, perpetuating the cycle.

The email opt-in variation converted at 22%, slightly surpassing the 18% conversion rate of the “pay with a tweet” option. Interestingly, an inline survey revealed that 45% of respondents preferred providing their email address, which aligned with the initial A/B test outcome.

However, here’s where the story takes an unexpected turn. We decided to introduce a third variation, offering users a single page with the same 2 two options to gain further insights.

The findings from this final test were astounding: 85% of users chose to provide their email address.

Further analysis revealed that users who opted for the tweet often deleted it from their feed shortly after, likely due to the personal nature of their accounts and a reluctance to share business-related content.

Key takeaway: Initial A/B test outcomes don’t always tell the whole story. Continuous testing and exploration of new hypotheses are essential for uncovering the truth.

Oli Gardner is the Co-Founder & Creative Director of Unbounce, a platform for creating DIY landing pages. He is a passionate writer, primarily focusing on landing pages and conversion rate optimization. Follow him on Twitter for valuable insights: @OliGardner.

Uncovering Surprising Results Through Unexpected Tests

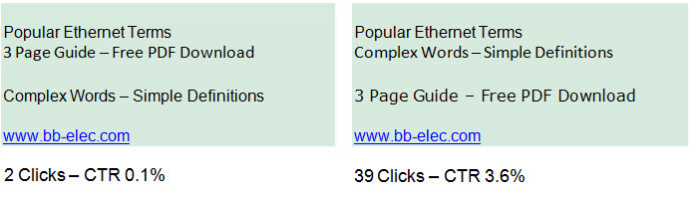

What surprised me most about A/B split testing was the realization that “unconventional” tactics could yield tenfold results. Take these Google Ads variations, for example:

The only difference lies in the reversal of lines 2 and 3.

While the reason behind the difference in performance remains a mystery, my theory is that the second ad effectively highlights the benefit before specifying the format in which it’s delivered.

Here’s another intriguing example:

How to Write a Book, Fast 14 Days from Start to Finish Unique, Step By Step Program Write-A-Book-Faster.com 4.40% CTR

How to Write a Book Fast 14 Days from Start to Finish Unique, Step By Step Program Write-A-Book-Faster.com 4.12% CTR

Did you notice the difference? These two ads demonstrate the tangible impact that even commas can have on your bottom line. The seemingly insignificant difference in punctuation resulted in an 8% performance gap, translating to an approximate $500 annual difference in this specific case.

The takeaway? These nuances matter. Eliminate guesswork by embracing testing.

Perry Marshall’s Chicago-based company, Perry S. Marshall & Associates, assists both online and offline businesses in generating sales leads, driving web traffic, and optimizing advertising efforts. As a highly sought-after marketing consultant, his work has been featured in numerous influential marketing books. His extensive body of work includes thousands of articles on sales, marketing, and technology, in addition to books such as The Ultimate Guide to Google AdWords (Entrepreneur Press, 3rd Edition 2012), a comprehensive guide to Google advertising, and The Ultimate Guide to Facebook Advertising (Entrepreneur Press, 2011). Our interview with Perry Marshall can be found here_._

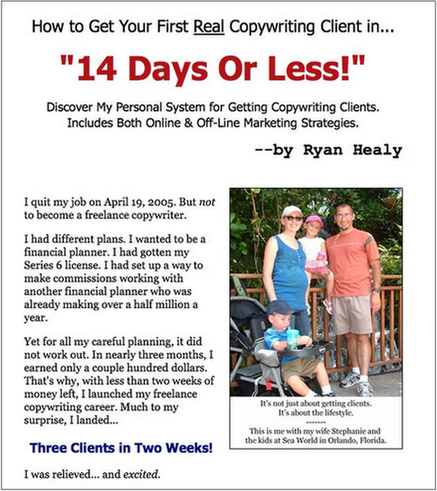

The Influence of Salutations on Ad Copy Performance

Having frequently heard about the potential impact of salutations on sales message conversions, I decided to conduct my own A/B test on one of my sales pages. The test was simple: one version included the salutation “Dear Friend,” while the other did not.

While I anticipated a difference in performance, the magnitude of the result was unexpected. The version without the “Dear Friend” salutation outperformed the original by a significant 28.2%.

Ryan Healy specializes in direct response copywriting. Since 2002, he has collaborated with a diverse clientele, including Alex Mandossian, Terry Dean, and Pulte Homes. His popular blog covers insights on copywriting, business growth, and product creation_._

Leveraging A/B Testing for Conversion Rate Optimization

My most surprising yet simultaneously underwhelming A/B testing experience occurred while working on conversion rate optimization. We developed numerous PPC-specific landing pages, each featuring distinct layouts, fresh images, embedded video content, captivating headlines, and varying font colors—you name it. Despite our efforts, the control page consistently outperformed every single variation. New and engaging content was consistently overshadowed by the basic page we were desperately trying to replace. It was a classic case of “If it ain’t broke, don’t fix it.” The page, while lacking excitement, was undeniably reliable, much like a slice of white bread. I eagerly await the day I discover a winning variation, allowing me to update this anecdote with tales of an exciting asiago-focaccia-style landing page.

Sean Quadlin is an Account Manager at Hanapin Marketing and contributes to PPCHero.com_. He currently manages a six-figure monthly ad spend, primarily focused on lead generation for consumer-facing businesses. Connect with him on Twitter @SeanQuadlin or find him enjoying his downtime with his beloved TiVo.

Optimizing Landing Page Conversions Through Button Size A/B Testing

One of my most astonishing A/B test results involved a landing page test for a marketplace site focused on new member sign-ups as the primary conversion event. The PPC landing pages were custom-coded, serving as a gateway to the sign-up process, highlighting key benefits, showcasing relevant visuals, and featuring a prominent “Get Started” button that linked to the registration page. After testing various offers, wording, and layout colors with minimal impact, we decided to experiment with button size. Increasing the button size yielded a significant improvement, prompting us to experiment further. We jokingly remarked that, at this rate, the landing page would eventually consist solely of a giant sign-up button!

This experience highlighted the often-overlooked influence of page layout on conversions. While we had meticulously tested numerous elements with little success, simply making the next step in the conversion process incredibly obvious yielded remarkable results. Since then, I’ve incorporated this lesson into all my landing page testing endeavors, ensuring that the path to conversion is crystal clear—even more so than you might initially deem necessary.

Shawn Livengood is the Online Marketing Manager for BuildASign.com_ and the author behind the PPC blog_ PPC Without Pity. In his role, Shawn spearheads the development and execution of the online marketing strategy for BuildASign.com’s diverse brands and microsites, overseeing PPC, SEO, social media, email marketing, and affiliate marketing channels. He also manages and enhances all web analytics platforms.

The Impact of Dynamic Keyword Insertion in Branded Ads

One particular PPC account I managed included a campaign targeting hundreds of brands within the merchant’s vertical. The goal was to drive lead generation sign-ups. The initial ad headline read:

50% Off Widgets.

However, I was restricted from incorporating brand names in the ad copy.

The campaign’s performance was abysmal, barely registering on my radar. We decided to test the impact of including brand names in the headline.

Given the sheer volume of brands involved, I opted for a quick proof-of-concept test, modifying the headline across hundreds of ad groups to:

50% Off {KeyWord:Brand}.

At the time, the campaign budget was flexible as long as specific CPA targets were met.

The results were astonishing. Overnight, this previously dormant campaign experienced an explosion in conversions, transforming into the account’s top performer. It outpaced other campaigns by a factor of 4-5x, generating so much volume that I initially suspected a technical glitch within Google Ads.

Ultimately, this experience demonstrated that even without granular ad headline customization, impressive results were achievable! 🙂

Todd Mintz, a valued member of the PPC Associates team since March 2011, possesses over 10 years of experience in search marketing, utilizing Google Ads since its inception. He is an active participant in the SEM social media sphere, curating and contributing to MarketingLand. As a founding member of SEMpdx (Portland’s Search Engine Marketing Group) and a current board member, Todd regularly shares his insights on their blog.

Achieving Remarkable Results Through Minor A/B Test Adjustments

In my experience, significant structural changes tend to yield the most substantial improvements. So, it’s always surprising when a seemingly minor tweak leads to a dramatic conversion lift, especially when it outperforms a more substantial variation.

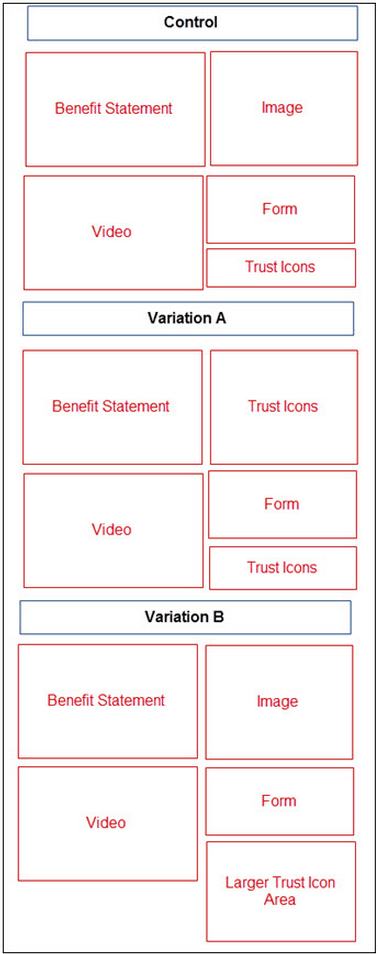

Below are simplified representations of an A/B test we conducted. The initial control page, designed by the client, featured trust icons (e.g., logos, associations) beneath the form. We believed that incorporating additional trust symbols would enhance the credibility of their relatively new and unknown offering, potentially boosting conversions.

Variation A introduced these extra trust symbols above the fold, replacing the existing “hero shot” which seemed ineffective.

Surprisingly,** Variation A performed slightly worse** than the original landing page.

Undeterred, we created Variation B, retaining the hero shot above the fold and instead integrating the new trust icons into an expanded trust section below the form. We also reorganized the icons, giving more prominence to the newly added, more impactful symbols.

Variation B, which simply incorporated a few new icons into an existing section, achieved a staggering 367% improvement! This unexpected outcome, coupled with the marginal underperformance of the more substantial Variation A, highlighted the profound impact that subtle adjustments can have.

Tom Demers is the co-founder and managing partner of Measured SEM search engine marketing consulting_, a boutique search marketing firm. To connect with Tom directly, reach out via email at tom at measuredsem.com or through following him on Twitter.

Your Turn: What’s your most surprising A/B test?

What are your thoughts on these A/B testing experiences? Which one resonated with you the most? We invite you to share your own remarkable A/B testing stories in the comments below!

MORE: 5 A/B Testing Tips for Companies on a Budget